The world needs reliable AI detection tools; however, no AI detection tool is ever going to be 100% perfect.

It’s important to understand their limitations so that you can use them responsibly.

What does this mean for developers of AI detectors? They should be as transparent as possible about the capabilities and limitations of their detectors.

At Originality.ai, we believe that transparency is a top priority.

So, below we’ve included our analysis of Originality.ai’s AI detector efficacy, including accuracy data and false positive rates.

Then, to review third-party data on Originality.ai's AI detector accuracy, see this meta-analysis of multiple academic studies on AI text detection.

Try the patented Originality.ai AI Detector for free today!

We are proposing a standard for testing AI detector effectiveness and AI detector accuracy, along with the release of an Open Source tool to help increase the transparency and accountability of all AI content detectors.

We hope to achieve this idealistic goal by…

Interested in evaluating an AI content detector's potential use case for your organization? Then, this article is for you.

Have a question, suggestion, research question, or commercial use cases? Please contact us.

Across all tests, Originality.ai has increased its accuracy, further establishing Originality.ai as the most accurate AI checker.

Originality.ai offers the most accurate AI detector — so what?

Before diving into our accuracy rates, let’s first review why AI detectors are important — or rather essential — in 2025, starting with a challenge to OpenAI’s stance on AI detection.

In July 2023, OpenAI released an announcement that suggested AI detectors don’t work when it shut down its own detection tool.

So, do AI detectors work? OpenAI Says No.

However, oversimplistic views that “AI detectors are perfect” or “AI detectors don't work” are equally problematic.

We still have an offer to OpenAI (or anyone willing to take us up on it) to back up their claim that AI detectors don't work with proceeds sent to charity. Learn more here.

AI Content Detectors need to be a part of the solution to undetectable AI-generated content.

The current unsupported AI detection accuracy claims and research papers that have tackled this problem are simply not good enough in the face of the societal risks LLM-generated content poses.

Here are some real-life scenarios when AI can pose significant problems:

Not to mention that multiple third-party studies have found that humans struggle to identify AI-generated content.

Then, there are also implications for SEOs and marketers.

AI Content is rising in Google, which presents a number of challenges. So, we created a Live Dashboard to monitor AI in Google Search Results.

Google can detect and does penalize AI content, and it's already happening via manual updates and Google Algorithm updates. Check out our study on Google AI Penalties.

Not to mention that in 2025, Google released updated Search Quality Rater Guidelines stating:

“The Lowest rating applies if all or almost all of the MC on the page (including text, images, audio, videos, etc) is copied, paraphrased, embedded, auto or AI generated, or reposted from other sources with little to no effort, little to no originality, and little to no added value for visitors to the website.” - Source: Google

Claimed accuracy rates with no supporting studies are clearly a problem.

We hope the days of AI detection tools claiming 99%+ accuracy with no data to support it are over. A single number is not good enough in the face of the societal problems AI content can produce, and the important role AI content detectors have to play.

The FTC has come out on multiple occasions to warn against tools claiming AI detection accuracy or unsubstantiated AI efficacy.

In 2025, the FTC addressed misleading accuracy claims from one company offering AI detection without the data to back it up:

“The order settles allegations that Workado [Content at Scale now BrandWell] promoted its AI Content Detector as “98 percent” accurate in detecting whether text was written by AI or human. But independent testing showed the accuracy rate on general-purpose content was just 53 percent, according to the FTC’s administrative complaint. The FTC alleges that Workado violated the FTC Act because the “98 percent” claim was false, misleading, or non-substantiated.” - Source: FTC

“If you’re selling a tool that purports to detect generative AI content, make sure that your claims accurately reflect the tool’s abilities and limitations.” source (page since removed from the FTC)

“you can’t assume perfection from automated detection tools. Please keep that principle in mind when making or seeing claims that a tool can reliably detect if content is AI-generated.” source (page since removed from the FTC)

“Marketers should know that — for FTC enforcement purposes — false or unsubstantiated claims about a product’s efficacy are our bread and butter” source (page since removed from the FTC)

We fully agree with the FTC on this and have provided the tool needed for others to replicate similar accuracy studies for themselves.

The misunderstanding of how to detect AI-generated content has already caused a significant amount of pain, including a professor who incorrectly failed an entire class.

AI detection tools' “accuracy” should be communicated with the same transparency and accountability that we want to see in AI’s development and use. Our hope is that this study will move us all closer to that ideal.

At Originality.ai, we aren’t for or against AI-generated content… but believe in transparency and accountability in its development, use, and detection.

Originality.ai helps ensure there is trust in the originality of the content being produced by writers, students, job applicants or journalists.

Pro Tip: Scanning high volumes of content for AI? Check out our Bulk Scan feature.

Along with this study, we are releasing the latest version of our AI content detector. Below is our release history.

1.1 – Nov 2022 BETA (released before Chat-GPT)

1.4 – Apr 2023

2.0 Standard — Aug 2023

3.0 Turbo — Feb 2024

Even easier-to-use Open Source AI detection efficacy research tool released.

2.0.1 Standard (BETA) — July 2024

1.0.0 Lite — July 2024

3.0.1 Turbo — October 2024

Multilingual 2.0.0 — May 2025

1.0.1 Lite — June 2025

1.0.2 Lite — September 2025

3.0.2 Turbo — September 2025

0.0.5 Academic — September 2025

Our AI detector works by leveraging supervised learning of a carefully fine-tuned large AI language model.

We use a large language model (LLM) and then feed this model millions of carefully selected records of known AI and known human content. It has learned to recognize patterns between the two.

More details on our AI content detection.

Below is a brief summary of the 3 general approaches that an AI detector (or called in Machine Learning speak a “classifier”) can use to distinguish between AI-generated and human-generated text.

The feature-based approach uses the fact that there can potentially be consistently identifiable and known differences that exist in all text generated by an LLM like ChatGPT when compared to human text. Some of these features that tools look to use are explained below.

Burstiness in text refers to the tendency of certain words to appear in clusters or "bursts" rather than being evenly distributed throughout a document.

AI-generated text can potentially have more predictability (less burstiness) since AI models tend to reuse certain words or phrases more often than a human writer would.

Some tools attempt to identify AI text using burstiness (more burstiness = human, less burstiness = AI).

Perplexity is a measure of how well a probability model predicts the next word. In the context of text analysis, it quantifies the uncertainty of a language model by calculating the likelihood of the model producing a given text.

Lower perplexity means that the model is less surprised by the text, indicating the text was more likely AI-generated. High perplexity scores can indicate human-generated text.

Frequency features refer to the count of how often certain words, phrases, or types of words (like nouns, verbs, etc.) appear in a text. For example, AI generation might overuse certain words, underuse others, or use certain types of words at rates that are inconsistent with human writing. These features might be able to help detect AI-generated text.

Learn about the most commonly used ChatGPT words and phrases, as well as obvious ChatGPT sayings.

Studies have shown that earlier (ie 2019) LLMs would generate text that has similar readability scores.

This pertains to the use and distribution of various punctuation marks in a text. AI-generated text often exhibits correct and potentially predictable use of punctuation.

For instance, it might use certain types of punctuation more often than a human writer would, or it might use punctuation in ways that are grammatically correct but stylistically unusual. By analyzing punctuation patterns, someone might attempt to create a detector that can predict AI-generated content.

A zero-shot approach uses a pre-trained language model to identify text generated by a model similar to itself. Basically, asking itself how likely the content the AI is seeing was generated by a similar version of itself (note: don’t try asking ChatGPT… it doesn’t work like that).

A fine-tuning AI model approach uses a large language model such as BERT or RoBERTa and trains on a set of human and AI-generated text. It learns to identify the differences between the two in order to predict if the content is AI or Original.

The test below looks at the performance of multiple detectors using all of the strategies identified above.

This post covers the main and supporting tests that were all completed on the latest versions of the Originality.ai AI Content Detector.

The dataset(s) provided might be applicable for your use case or potentially if you are evaluating AI detection tools' effectiveness for another type of content you will need to produce your own dataset.

Use our Open-Source Tool to make running your data and evaluating detectors' performance much easier.

To make the running of tests easy, repeatable and accurate, we created and decided to open-source our tools to help others do the same. The main tool allows you to enter the API key for multiple AI content detectors and plug in your own data to then receive not just the results from the tool but also a complete statistical analysis of the detection effectiveness calculations.

This tool makes it incredibly easy for you to run your test content against all AI content detectors that have an available API.

The reason we built and open-sourced this tool to run tests is so that we can increase the transparency into tests by…

The speed at which new LLMs are launching and the speed AI detection is evolving means that accuracy studies, which take 4 months from test to publication, are hopelessly outdated.

Features of This Tool:

Link to GitHub: https://github.com/OriginalityAI/AI-detector-research-tool

In addition to the tool mentioned above, we have provided three additional ways to easily run a dataset through our tool…

We do not believe that AI detection scores alone should be used for academic honesty purposes and disciplinary action.

The rate of false positives (even if low) is still too high to be relied upon for disciplinary action.

Here is a guide we created to help writers or students reduce false positives in AI content detector usage.

Plus, we created a free AI detector Chrome extension to help writers, editors, students, and teachers visualize the creation process and prove originality.

Our newly released Academic model is best for educators and academic settings, as it allows for light AI editing with popular tools like Grammarly (grammar and spelling suggestions) and is designed to be accurate on STEM-related content.

Learn more about Originality.ai for Education.

Below are the best practices and methods used to evaluate the effectiveness of AI classifiers (i.e., AI content detectors). There is some nerdy data below, but if you are looking for even more info, here is a good primer for evaluating the performance of a classifier.

One single number related to a detector's effectiveness without additional context is useless!

Don’t trust a SINGLE “accuracy” number without additional context.

Here are the metrics we look at to evaluate a detector's efficacy…

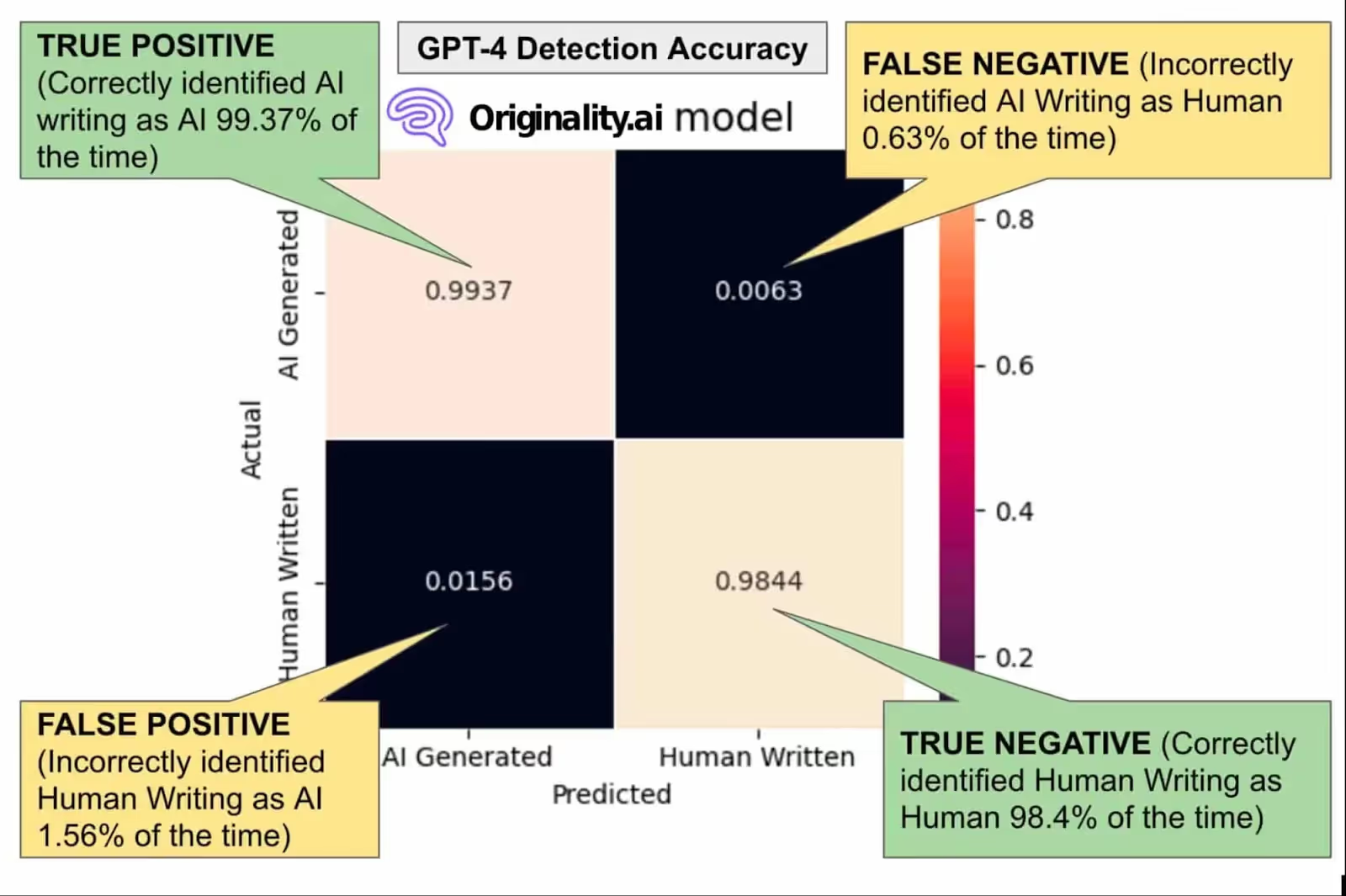

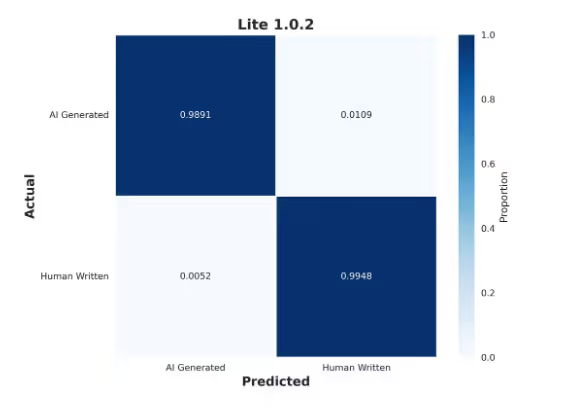

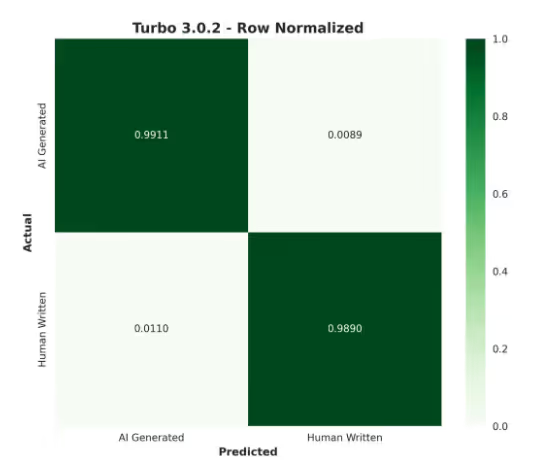

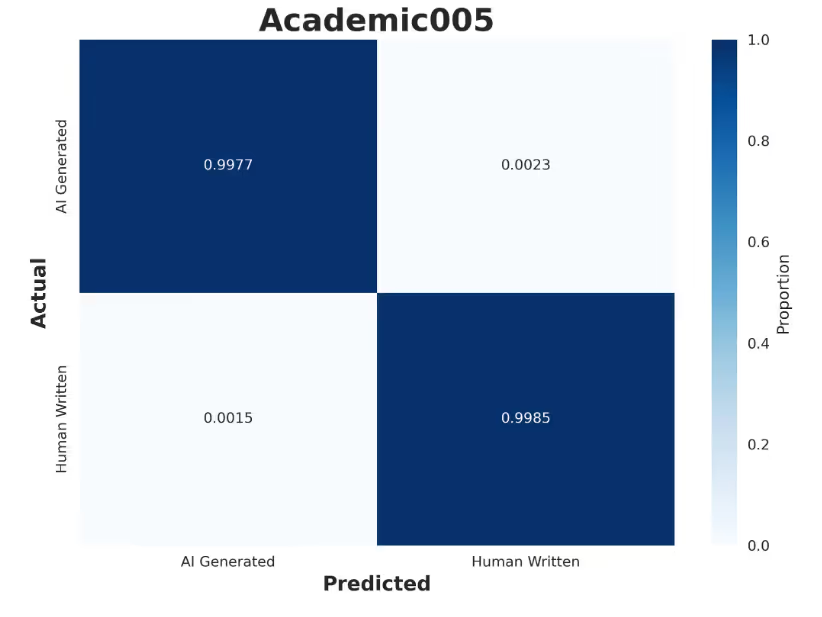

The confusion matrix and the F1 (more on it later) together are the most important measures we look at. In one image, you can quickly see the ability of an AI model to correctly identify both Original and AI-generated content.

Identifies AI content correctly x% of the time. True Positive Rate TPR (also known as sensitivity, hit rate or recall).

Identifies human content correctly x% of the time. True Negative Rate TNR (also known as specificity or selectivity).

What % of your predictions were correct? Accuracy alone can provide a misleading number. This is in part why you should be skeptical of AI detectors' claimed “accuracy” numbers if they do not provide additional details for their accuracy numbers. The following metric is what we use, along with our open source tool to measure accuracy.

Combines Recall and Precision to create one measure to rank all detectors, often used when ranking multiple models. It calculates the harmonic mean of precision and sensitivity.

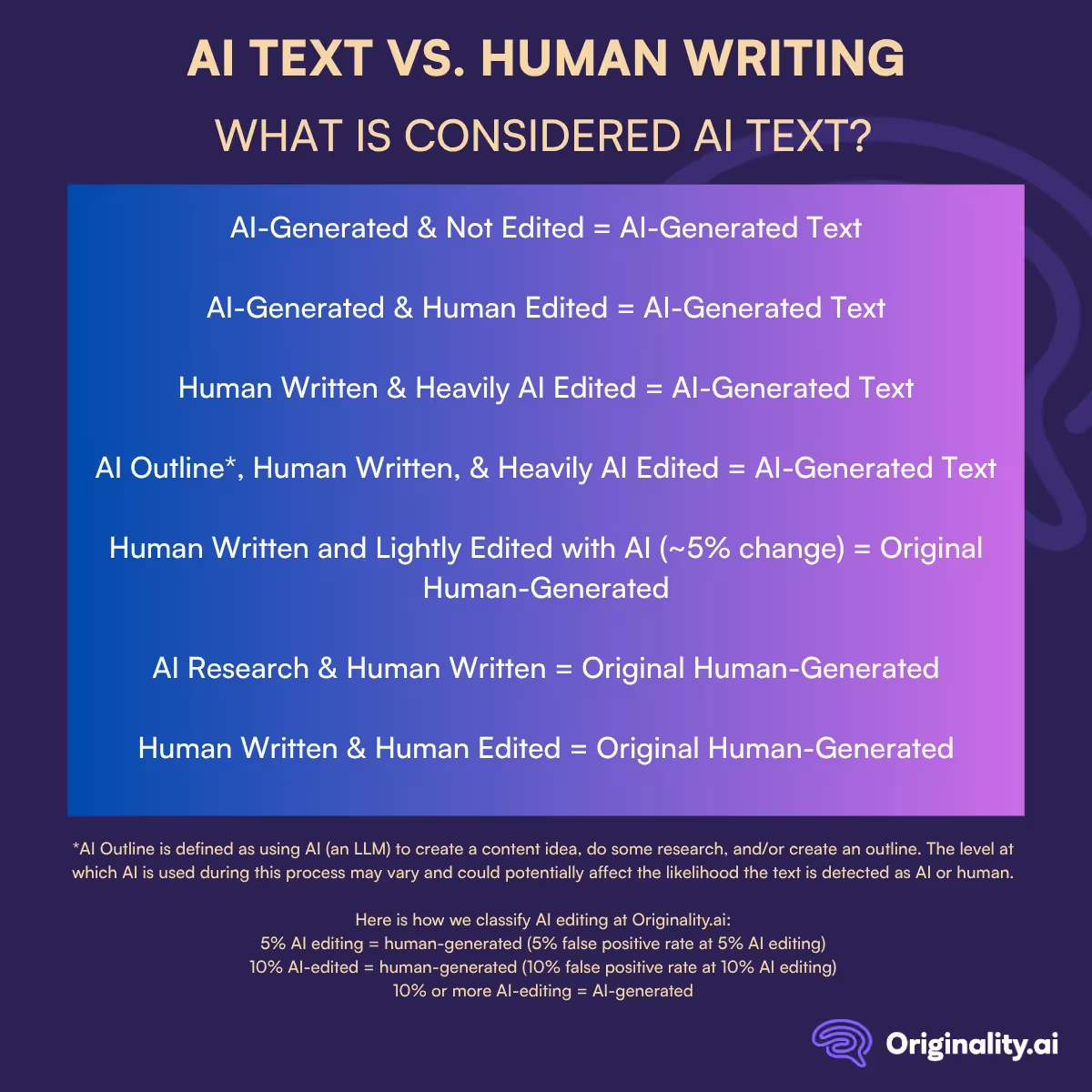

So, what should and should not be considered AI content? As “cyborg” writing combining humans and AI assistants rises, what should and shouldn’t be considered AI content is tricky!

Some studies have made some really strange decisions on what to claim as “ground truth” human or AI-generated content.

In fact, there was one study that used human-written text in multiple languages that was then translated (using AI tools) to English and called it “ground truth” Human content.

Source…

Description of Dataset:

Classifying the AI Translated Dataset (02-MT) as Human-written???

https://arxiv.org/pdf/2306.15666.pdf

We think this approach is crazy!

Our position is that if the effect of putting content into a machine is that the output from that machine is unrecognizable when comparing the two documents, then it should be the aim of an AI detector to identify the output text as AI-generated.

The alternative is that any content could be translated and presented as Original work since it would pass both AI and plagiarism detection.

As the way people write evolves, there is an increased use of AI tools in research and editing.

AI editing is the process of using an AI-powered tool as support to correct grammar, punctuation and spelling.

At Originality.ai, we offer an AI Grammar Checker to help you catch common errors like spelling mistakes, comma splices, or grammatical errors (like confusing when to use they’re vs. their).

However, where things get tricky with AI editing is when a tool offers AI-powered rephrasing features that effectively rewrite sentences for you. For instance, Grammarly, a popular writing tool, offers AI rephrasing that can trigger AI detection.

As AI editing tools become increasingly popular, we’ve set targets to define AI editing:

Here is what we think should and should not be classified as AI-generated content:

*AI Outline is defined as using AI (an LLM) to create a content idea, do some research, and/or create an outline. The level at which AI is used during this process may vary and could potentially affect the likelihood the text is detected as AI or human.

Some journalists, such as Kristi Hines, have done a great job at trying to evaluate what AI content is and whether AI content detectors should be trusted by reviewing several studies - https://www.searchenginejournal.com/should-you-trust-an-ai-detector/491949/.

Review a meta-analysis of AI-detector accuracy studies for further insight into the efficacy of AI-detectors.

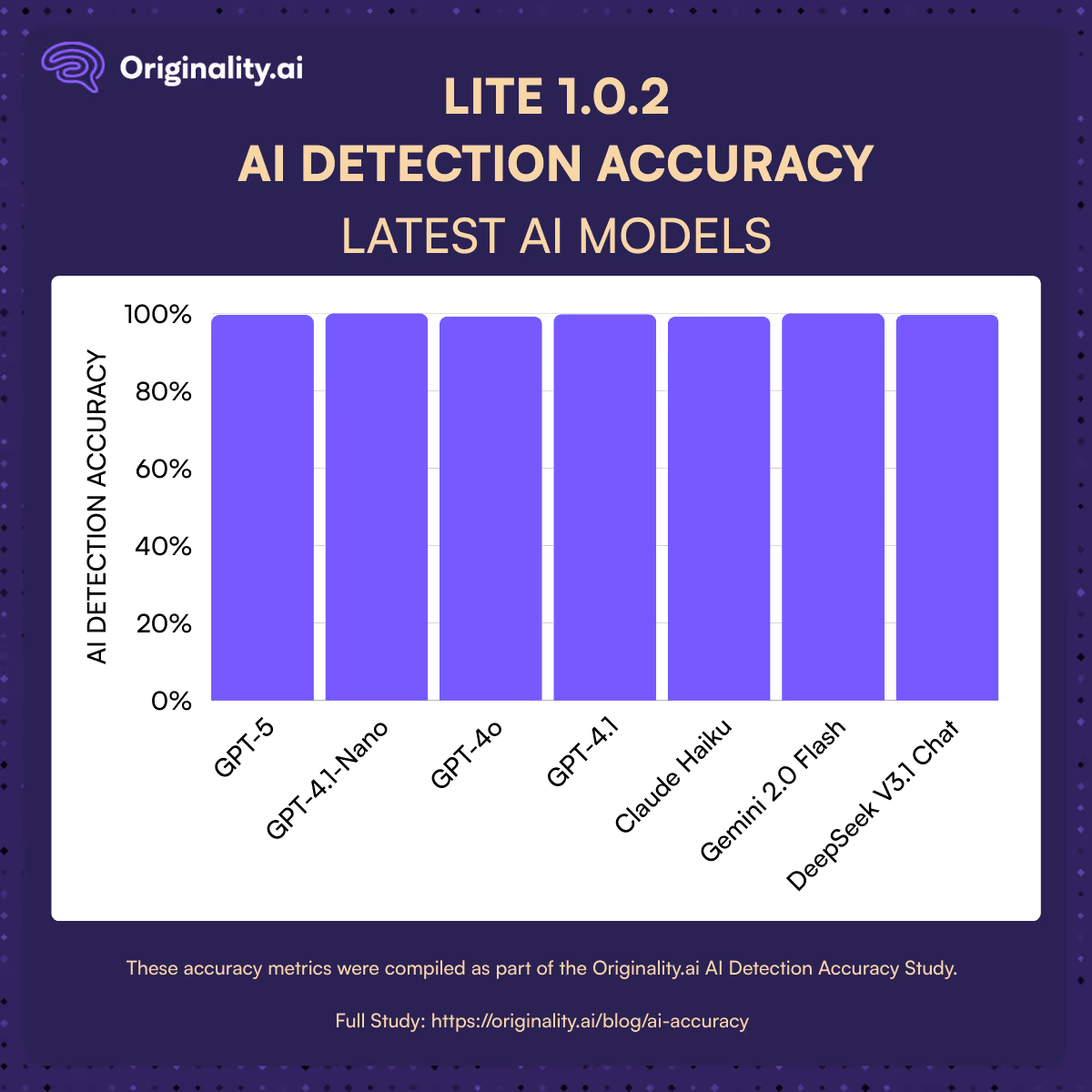

In September 2025, we released the updated, more robust Lite 1.0.2 AI detection model.

Why?

The rapid evolution of advanced AI language models, such as OpenAI's GPT series, Anthropic's Claude, and Google's Gemini, can produce increasingly human-like text.

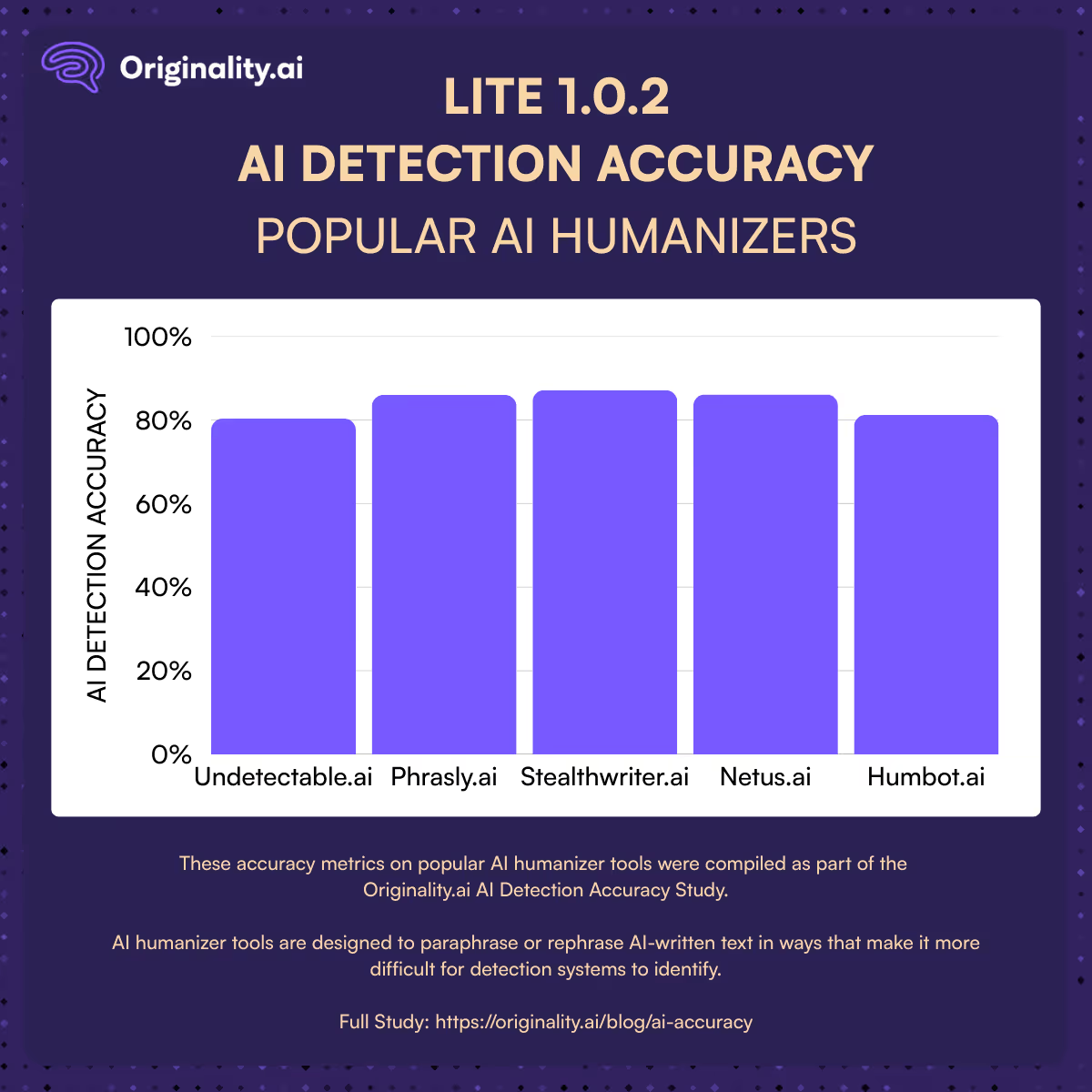

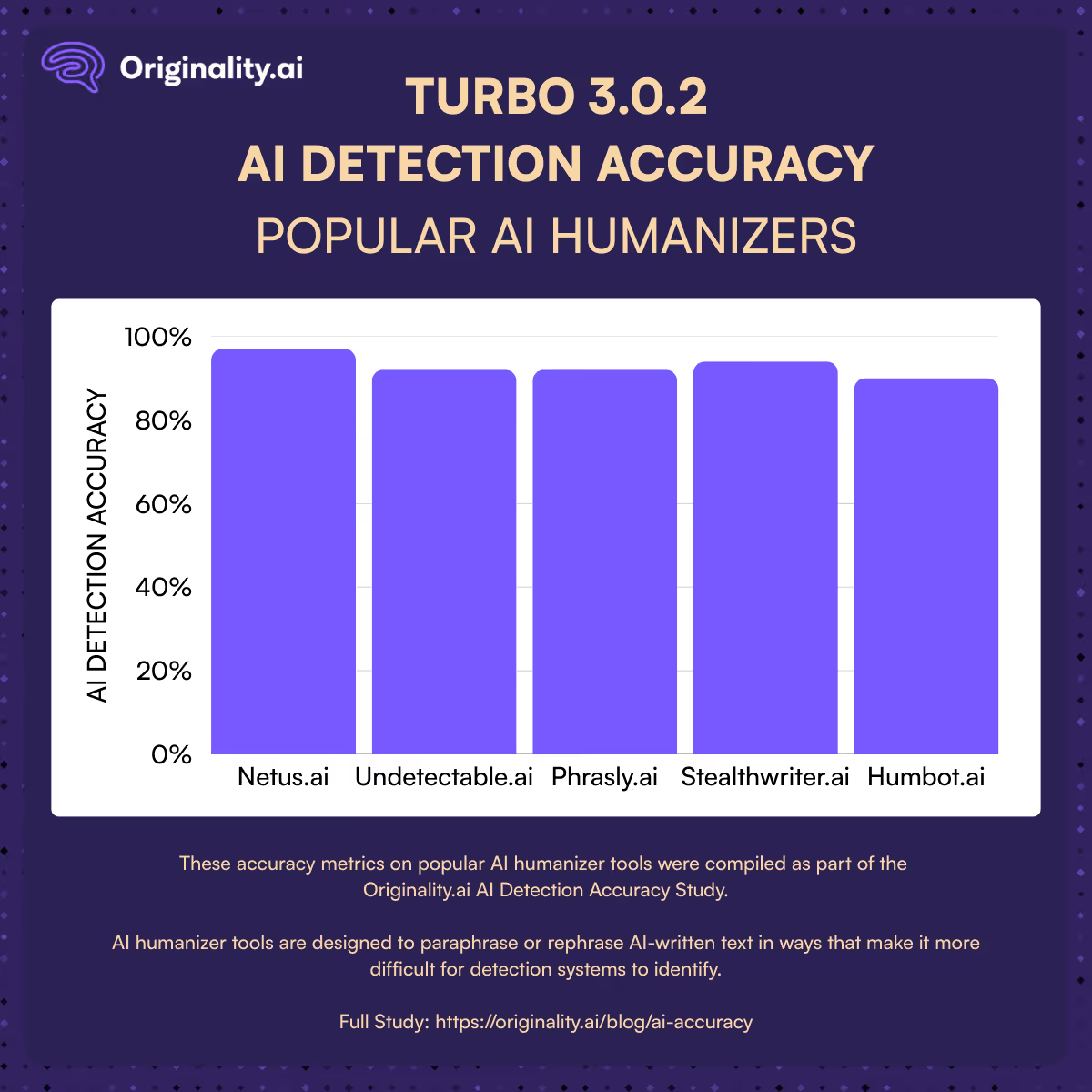

At the same time, "AI Humanizer" tools — designed specifically to obfuscate AI-generated content and evade detection systems — are also increasing in popularity.

In response to these developments, we developed Lite 1.0.2, designed to accurately identify content generated by the latest AI models and humanizer tools, while maintaining a low false positive rate (ensuring that human-written content is not incorrectly flagged as AI-generated).

We evaluate our AI detection model on outputs from various state-of-the-art and widely used language models to assess its robustness across generations of AI systems.

This testing included accuracy evaluations with some of the latest AI models. Here’s a quick overview:

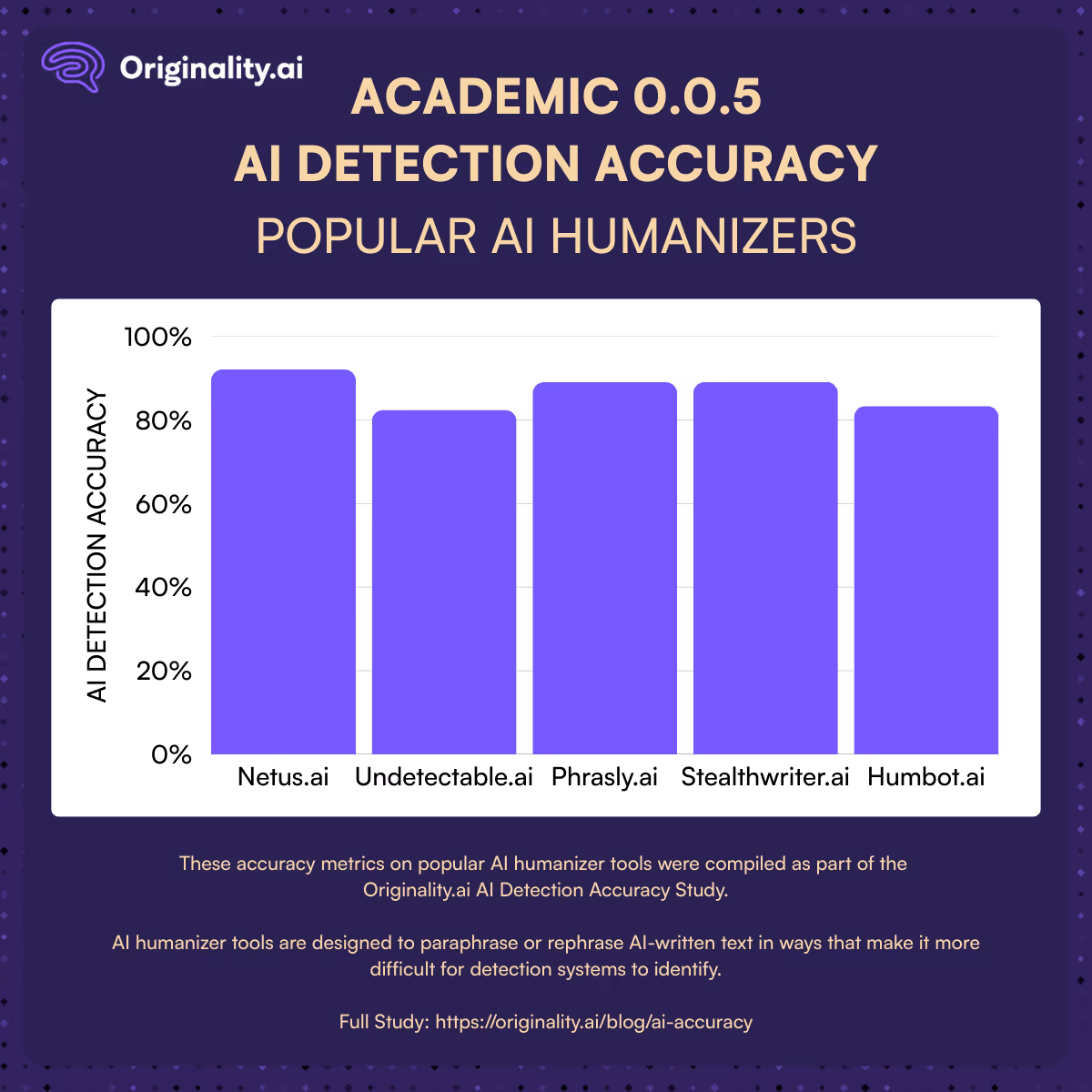

To further test the robustness of our detection model, we evaluate its performance against AI-generated content that has been deliberately modified using AI humanizer tools.

Most AI humanizer tools are designed to paraphrase or rephrase AI-written text in ways that make it more difficult for detection systems to identify. While some AI Humanizer tools like ours are designed to have the content sound more natural, but not to bypass detection.

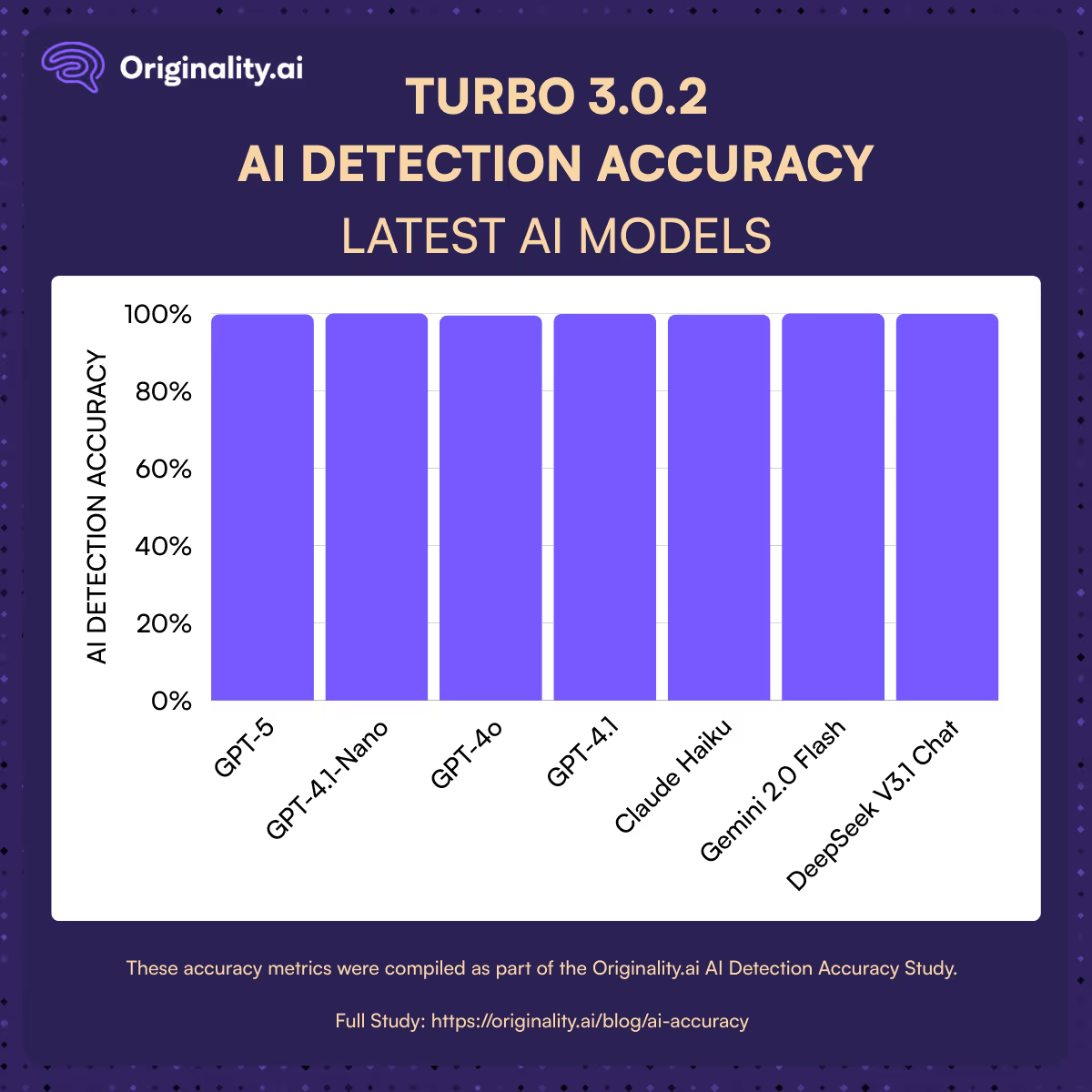

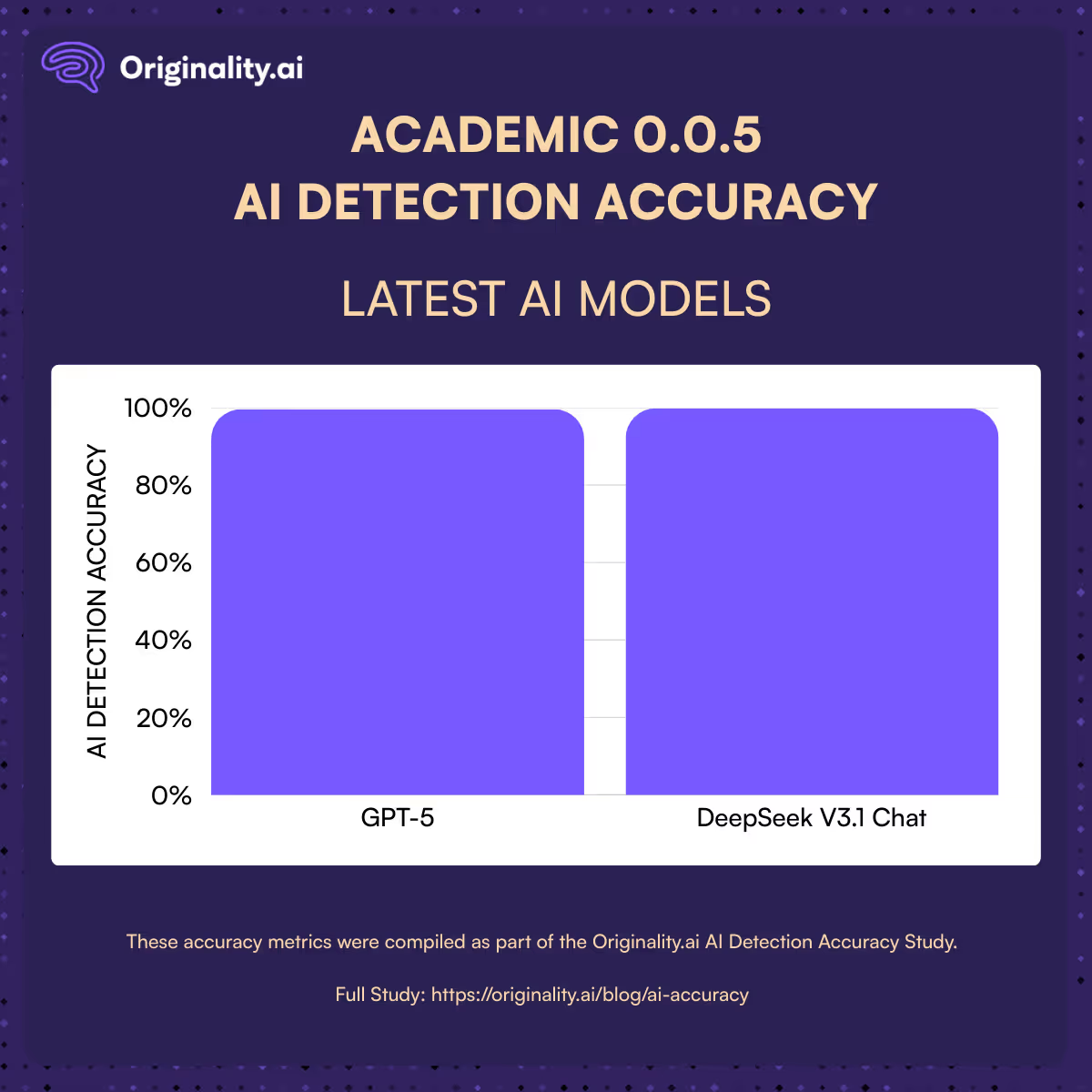

AI content continues to evolve rapidly, especially with the release of the GPT-5 family and the new DeepSeek reasoning models.

Academic and enterprise contexts require detectors that minimize false negatives (FN) to avoid missing AI-generated content, while keeping false positives (FP) low to protect human authors.

Turbo 3.0.2 is an upgraded model that significantly improves detection accuracy. It introduces enhanced defences against AI humanizer tools and demonstrates consistent robustness across public human-written datasets.

As with our Lite model, we evaluate our Turbo AI detection model on outputs from the latest flagship LLMs.

This testing included accuracy evaluations with some of the latest AI models. Here’s a quick overview:

Turbo 3.0.2 is robust in real-world adversarial conditions, delivering up to 97% accuracy against top AI humanizers and AI bypassers.

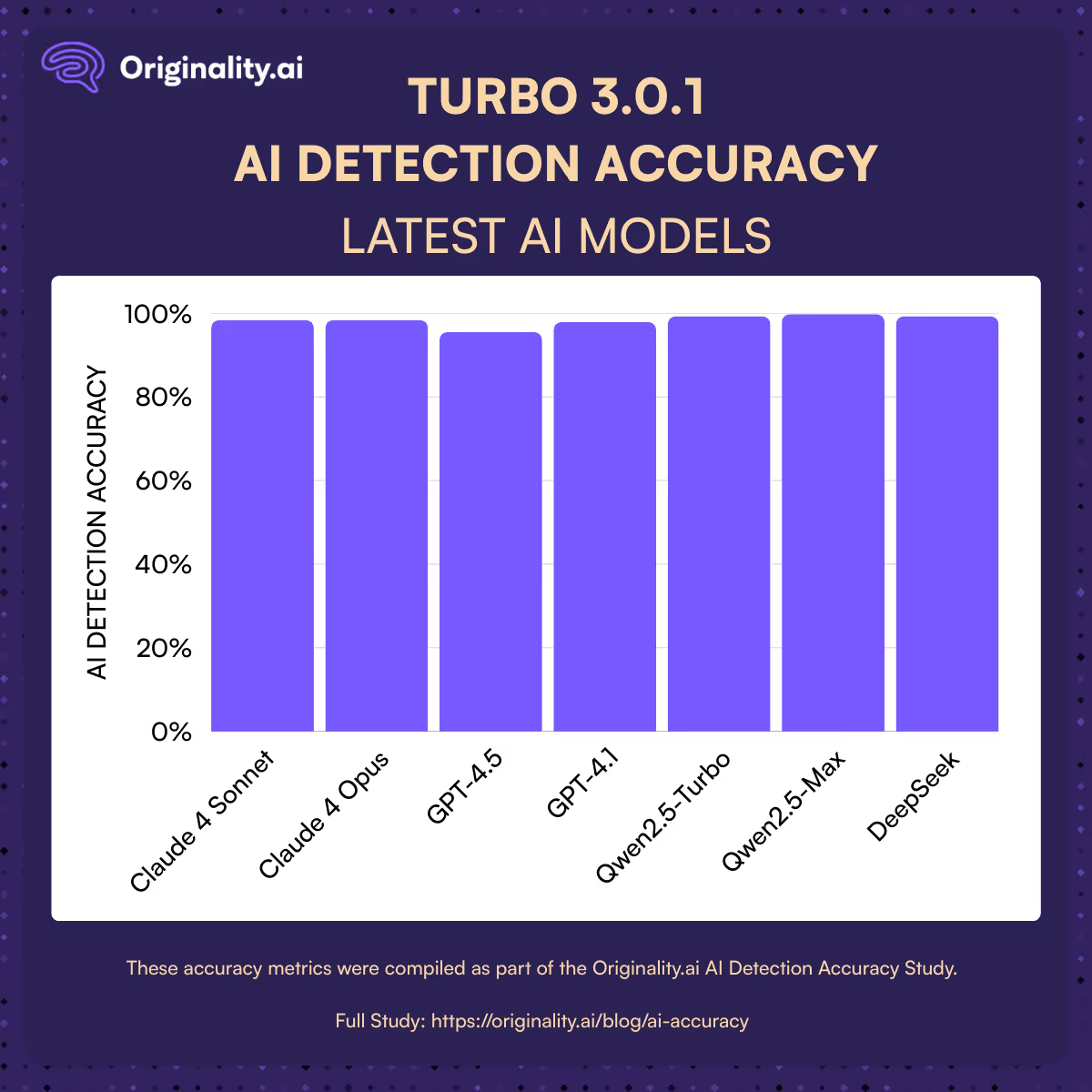

See past studies on Turbo 3.0.1, as a historical look at some of our previous testing conducted as the latest AI models were released:

Our newly released 3.0.2 Turbo model has continued to show exceptional accuracy and improvements, reflecting the dedicated work of our machine learning engineers to train our AI detection models and improve accuracy as new LLMs continue to be released.

As with our Lite and Turbo models, we evaluate our Academic AI detection model on outputs from the latest flagship LLMs.

This testing included accuracy evaluations with some of the latest AI models. Here’s a quick overview:

Despite the strong paraphrasing capabilities of AI humanizers, the Originality.ai Academic 0.0.5 Model delivered strong accuracy, up to 92% with top AI humanizers and AI bypassers.

Here are additional studies completed by 3rd parties and their findings showing Originality to be the most accurate…

Summaries of these studies: Meta-Analysis of AI Detection Accuracy

The end result?

Across both internal testing and third-party studies, we continue to outperform competitors as the Most Accurate AI Detector.

Below is a list of all AI content detectors and a link to a review of each. For a more thorough comparison of all AI detectors and their features, have a look at this post: Best AI Content Detection Tools

List of Tools:

As these tests have shown, not all tools are created equal! There have been many quickly created tools that simply use a popular Open Source GPT-2 detector.

Below are a few of the main reasons we suspect Originality.ai’s AI detection performance and overall AI detector accuracy are significantly better than alternatives…

The AI/ML team and core product team at Originality.ai have worked relentlessly to build and improve on the most effective AI content detector!

Originality.ai Launches Lite 1.0.2

Originality.ai Launches Version 3.0.2 Turbo

Originality.ai Launches Version 0.0.5 Academic

We hope this post will help you understand more about AI detectors, AI detector accuracy, and give you the tools to complete your own analysis if you want to.

Our hope is that this study has moved us closer to achieving this and that our open-source initiatives will help others to be able to do the same.

If you have any questions on whether Originality.ai would be the right solution for your organization, please contact us.

If you are looking to run your own tests, please contact us. We are always happy to support any study (academic, journalist, or curious mind).

Additionally, to learn more about how Originality.ai performs in third-party academic research and studies, review our meta-analysis of accuracy studies.

Try our AI detector for yourself.

MoltBook may be making waves in the media… but these viral agent posts are highly concerning. Originality.ai’s study with our proprietary fact-checking software found that Moltbook produces 3 X more harmful factual errors than Reddit.