As artificial intelligence becomes increasingly integrated into education, publishing, and digital communications, reliably detecting AI-generated content — especially in underrepresented languages like Arabic — has become crucial.

A major study, “The Arabic AI Fingerprint: Stylometric Analysis and Detection of Large Language Models Text,” rigorously tested the performance of state-of-the-art AI content detectors across a range of Arabic datasets.

Here’s how Originality.ai’s multilingual AI detector stacked up.

Learn more about AI detection and AI detection accuracy in our AI Detection Accuracy Review and a Meta-Analysis of Third-Party AI Detection Studies. Then, get further insight into our Multilingual AI Detector.

The study, conducted by researchers at King Fahd University of Petroleum and Minerals, set out to answer a pressing question: Can current AI detectors distinguish between human-written and AI-generated Arabic text?

It evaluated content from both academic and social media sources, generated by leading large language models (LLMs), using a suite of stylometric and machine learning methods.

The study and our benchmark used standard metrics for evaluating AI detection accuracy:

All metrics were calculated separately for each text source (Human, ALLaM, Jais, LLaMA, OpenAI).

In the research paper, the authors trained their detector as a multi-class classifier: for each input text, the model predicts whether it was written by a human or by one of the AI models (ALLaM, Jais, Llama, OpenAI).

This allows them to calculate per-class Precision, Recall, and F1-score for each label — including “Human” — since the classifier can make various types of mistakes (e.g., calling a human text “ALLaM” or “Jais”).

However, for fair comparison with Originality.ai (which only distinguishes “AI” vs “Human”), it makes sense to simplify the evaluation for human data:

Definition: FPR (False Positive Rate) – For the human dataset, if the model predicts any label other than “Human” for a sample, it is counted as a false positive.

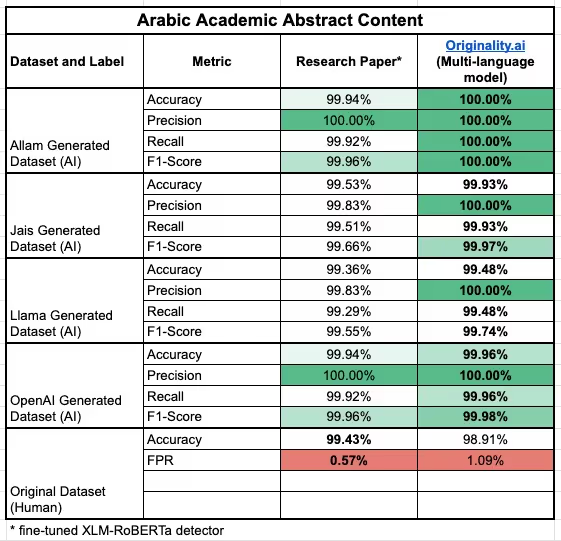

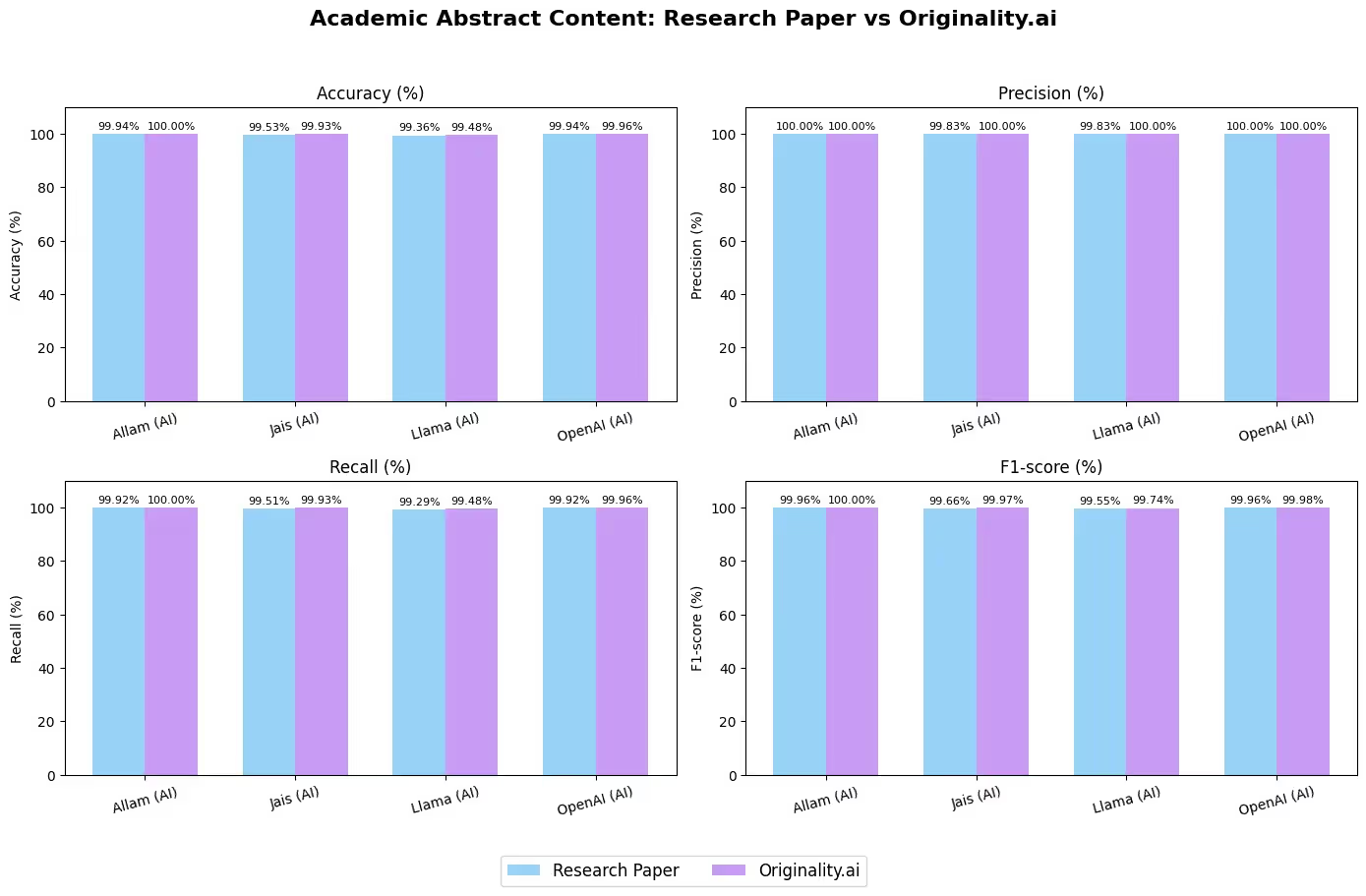

Originality.ai achieved perfect (100%) or near-perfect accuracy and F1-score on all AI-generated academic abstract datasets, outperforming the research’s own fine-tuned detectors.

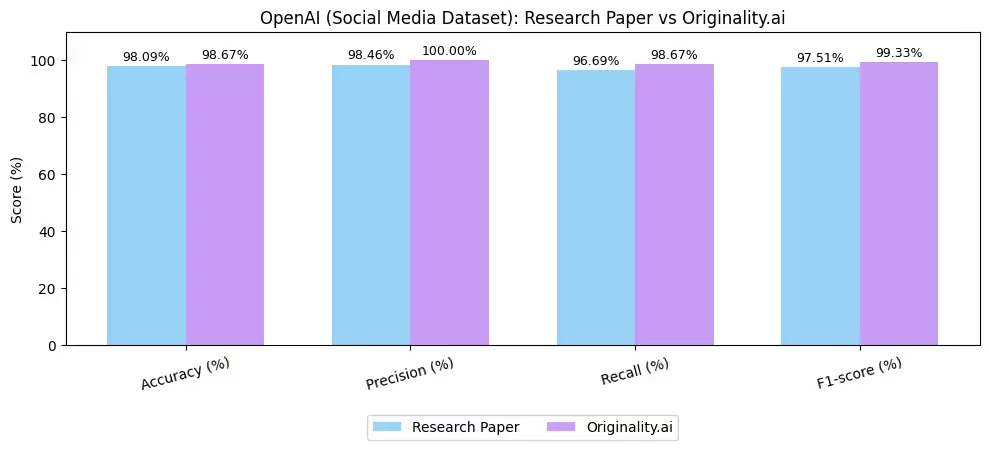

For OpenAI social posts, Originality.ai reached F1-scores over 99% — higher than the research baseline.

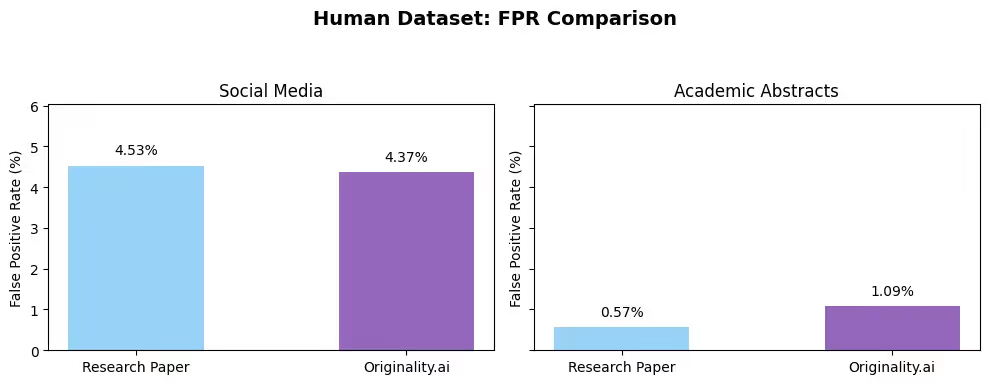

Across both academic and social datasets, Originality.ai kept the false positive rate extremely low (as low as 1.09% in academic abstracts and 4.37% in social media), ensuring human writing is rarely misclassified.

Originality.ai’s multilingual AI detection tool isn’t just a contender — it’s a leader in the detection of Arabic AI-generated text. Its results consistently match or exceed the best academic models, achieving industry-leading accuracy with minimal false positives.

For educators, publishers, and institutions looking to maintain integrity in detecting AI Arabic content, Originality.ai’s AI detector is the most accurate.

Further Reading:

According to the 2024 study, “Students are using large language models and AI detectors can often detect their use,” Originality.ai is highly effective at identifying AI-generated and AI-modified text.