AI is becoming increasingly prevalent across industries, from web publishing to marketing and even education.

Yet, considering AI’s tendency to produce AI hallucinations (incorrect information presented as fact), such as with the 2025 AI book list scandal, establishing ways to confirm the veracity of information is of the utmost importance.

However, how can you tell which fact-checking solution is the most accurate and effective?

That’s where our AI fact-check accuracy study comes in.

Learn about our fact-checking benchmarking, datasets, and find out how the accuracy of the Originality.ai fact checker compares to GPT-4o and GPT-5.

Plus, get insights into why fact-checking is important and the AI hallucination rates of popular LLMs according to third-party research studies.

First, let’s take a quick look at what fact-checking is (and why it is important in 2025).

Quick Answer: Fact-checking is the rigorous process of verifying the accuracy and authenticity of information.

To provide further context, fact-checking may be conducted across various forms of content, such as:

This means that fact-checking is incorporated into a number of different use cases, including:

In an age where information can be shared at lightning speed, the spread of misinformation can have profound consequences, from influencing public opinion to negatively impacting brand reputation to endangering public health and safety.

The importance of fact-checking is multifold:

While LLMs are constantly improving, the potential for AI hallucinations has become a well-known limitation of AI tools.

As a quick recap, an AI hallucination occurs when AI presents something that is incorrect as a fact. If this ‘hallucination’ is then missed during editorial review and published, it can lead to a number of consequences, from spreading misinformation to damaging brand reputation.

Research is still being conducted into AI hallucinations and LLMs, but here’s a quick look at AI hallucination rates based on some of the latest findings:

Findings differed for the exact hallucination rates across the studies, depending on sample size, as well as the material/facts tested, and the testing methodology.

For instance, whereas the paper available via PubMed conducted a study to determine if a paper was hallucinated based on whether it produced two of the wrong “title, first author, or year of publication,” the Stanford Study focused on testing hallucinations in response to “specific legal queries.”

Yet, the key theme remains that AI models and popular LLMs do hallucinate, which, unnoticed and without proper fact-checking, can pose significant problems.

The objective of this benchmarking effort was twofold:

The intent was to stress test the fact checker using challenging, real-world claims and measure its ability to deliver robust True/False judgments.

We sought to evaluate the checker against a diverse set of datasets to avoid overfitting to any one claim type or category. The goal was to test general performance, not just domain-specific accuracy.

Below are the datasets we considered and our verdicts:

Consists of 185,445 human-generated claims based on Wikipedia sentences (2018 snapshot), classified as Supported, Refuted, or NotEnoughInfo, with annotated evidence. This dataset is widely considered the standard benchmark for fact-checking research.

Verdict: Use it, but sample 1,000 claims for practicality, since we want to re-run the benchmark frequently.

Dataset: Fever Paper

A compact dataset (1.4k expert-written scientific claims) with high annotation agreement (Cohen’s κ ≈ 0.75). Scientific claims tend to be less ambiguous, making this a high-quality dataset.

Verdict: Use it in its entirety.

Dataset: SciFact Paper

Knowledge-graph-based dataset of 108k synthetic claims (one-hop, multi-hop, negation, etc.). While it offers perfect labeling due to deterministic graph generation, claims follow strict subject–predicate–object templates and DBpedia 2015 snapshot is outdated. This makes it less challenging for a text-evidence fact checker and poor for stress testing.

Verdict: Skip it.

Dataset: FactKG Paper

A 2023 dataset of 4,568 real-world claims from 50 fact-checking organizations. Each claim includes supporting evidence and detailed justifications. Unlike synthetic datasets, these claims are not trivially generated and require careful reasoning to verify. According to the paper, “any claim included in the dataset is deemed interesting enough to be worth the time of a professional journalist”.

Verdict: Use it, full size.

Dataset: AVeriTeC Paper

Because Originality.ai’s fact checker outputs only True/False labels (no 'No Evidence' class), we filtered each dataset to retain only claims with binary outcomes.

FEVER’s large size required sampling 1,000 claims. After running three checkers (Originality, GPT-4o, GPT-5) on this sample, we manually inspected 176 disputed claims where there was at least one disagreement between original labels and checker predictions. We dropped 72 ambiguous claims and corrected mislabeled ones, resulting in a final sample of 928 high-quality claims.

SciFact and AVeriTeC were filtered similarly for True/False claims, resulting in 693 and 3017 claims, respectively.

Performance was measured on FEVER 1k, SciFact, and AVeriTeC datasets, then aggregated.

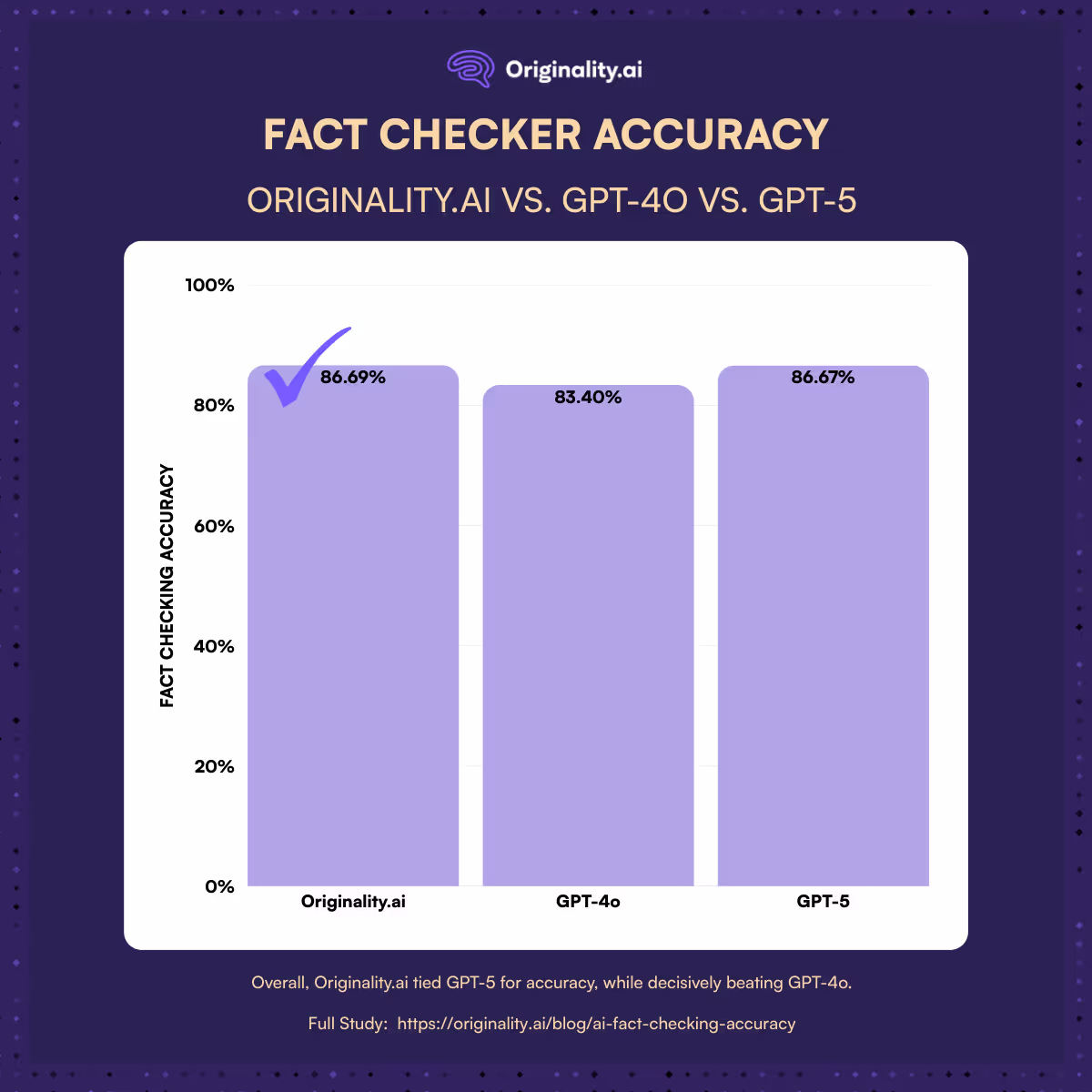

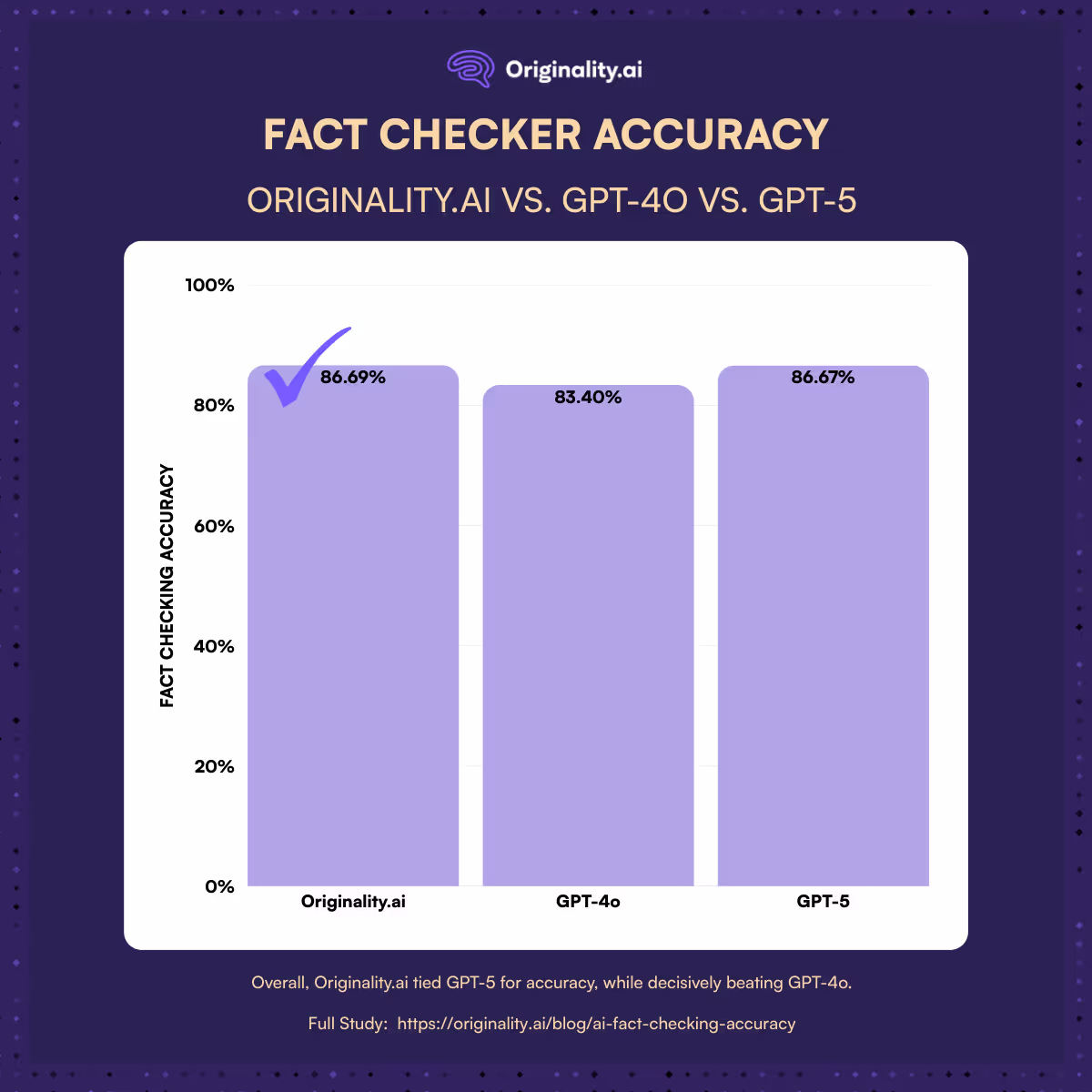

Across all three datasets, Originality.ai delivered the best recall (0.835) and tied GPT-5 for accuracy, while decisively beating GPT-4o.

GPT-5 achieved an almost perfect score (Accuracy ≈ 0.996), but Originality.ai was close behind and performed consistently with high precision and recall.

While GPT-5 edged out a small win in raw accuracy (0.840), it did so by sacrificing recall (0.669 vs Originality’s 0.746), meaning it missed far more true claims. Originality.ai’s balanced approach is arguably more trustworthy, especially for use cases where missing true information carries a cost.

Originality.ai outperformed GPT-4o and GPT-5 across all metrics on SciFact, showcasing its ability to handle scientific claims with superior reliability. This result highlights its strength in cases where precision and recall must both be high.

Not only is the Originality.ai fact checker accurate, it’s also easy to use.

Here’s a quick 3-step process on how to check facts with Originality.ai:

Originality.ai showed strong, balanced performance across all benchmarks.

It dominated SciFact, stayed close to GPT-5 on FEVER and AVeriTeC, and clearly outperformed GPT-4o.

Its higher recall means it misses fewer true claims, which is critical when the goal is to surface as many correct facts as possible.

Overall, Originality.ai proves to be a top-tier fact-checking system that competes directly with the latest LLMs.

Get insight into Originality.ai’s industry-leading accuracy across tools:

Further Reading on Fact Checking: