Artificial intelligence (AI) has rapidly become integral in educational settings, particularly in writing assignments. However, distinguishing between human and AI-generated content remains challenging. A recent study – “Using aggregated AI detector outcomes to eliminate false-positives in STEM-student writing,” conducted by Arizona State University, evaluated four AI detection tools to identify AI versus human-generated essays in a STEM educational environment.

Originality.ai demonstrated outstanding performance.

This research involved undergraduate students enrolled in an anatomy and physiology course. The objective was to evaluate the performance of multiple AI detection tools, focusing on their accuracy in distinguishing human-written essays from AI-generated ones.

In total, 174 students submitted both a human-written and an AI-generated essay. However, for AI detector evaluation, a subset of 99 essays (50 human-written and 49 AI-generated) was used due to one corrupted AI-generated file.

The total dataset included 348 essays (174 human + 174 AI-generated). However, AI detectors were tested on a sample of 99 essays (50 human-written and 49 AI-generated). These essays responded to a prompt about plasma membrane anatomy and physiology. Each essay was approximately 150 words long.

Essays assessed by AI detectors were evaluated using the following metrics:

Percentages such as FP and TP rates were calculated based on this 99-essay evaluation set.

Formulas for evaluation calculations:

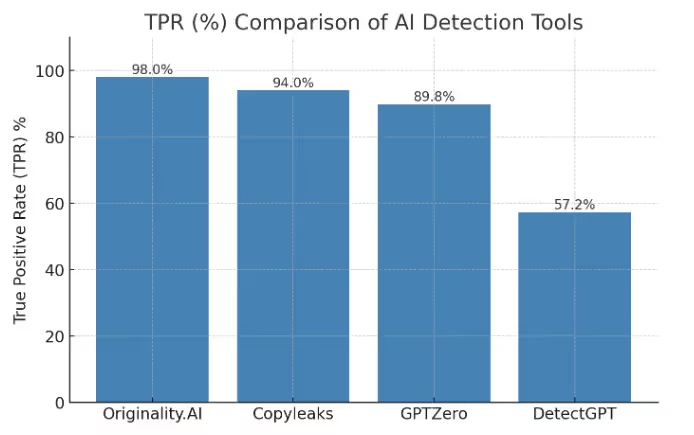

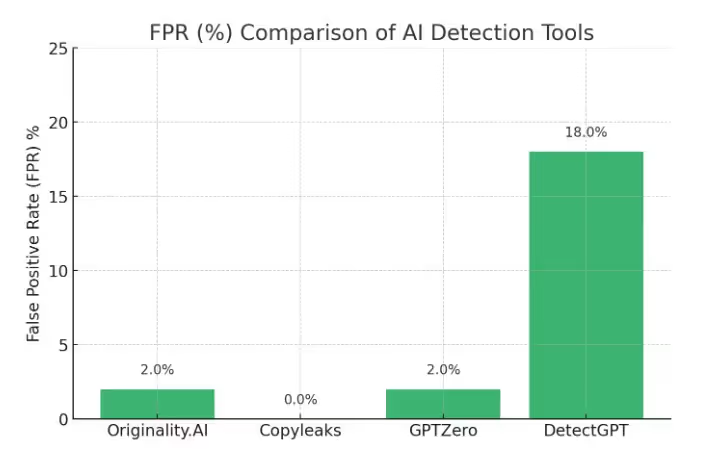

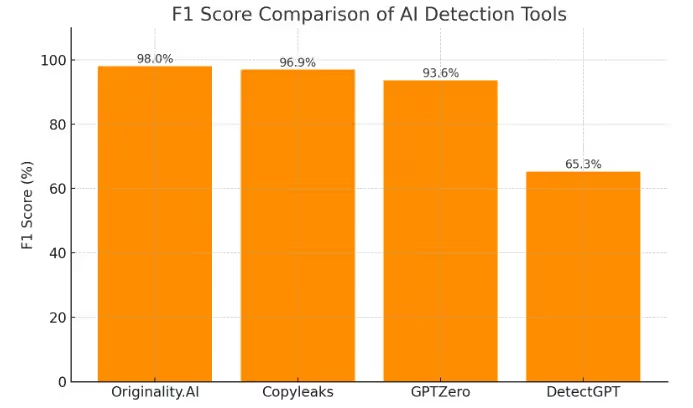

To provide insight into the accuracy metrics of this study we calculated the F1 Score and TPR based on the study's research data and the number of samples the study tested.

Learn more about Originality.ai for educators.

Originality.ai offers the best balance and accuracy among all the evaluated AI detectors. Its remarkable precision, with only 2% false positives and 2% false negatives, highlights its superior reliability in distinguishing between AI-generated and human-written content.

This consistent performance not only surpasses other AI tools like Copyleaks and GPTZero but also outperforms human evaluators, including faculty and teaching assistants.

Given the growing concern around AI-generated submissions, Originality.ai stands out as an essential tool for educators and institutions committed to maintaining academic integrity. Its ability to minimize both false flags and missed detections makes it an invaluable asset in today's educational landscape.

Read more about AI detection and AI detection accuracy:

According to the 2024 study, “Students are using large language models and AI detectors can often detect their use,” Originality.ai is highly effective at identifying AI-generated and AI-modified text.