The mid-2020s just might be the perfect time to take a first look at AI adoption stats by industry.

After all, the ChatGPT-led AI explosion just a few years ago kicked off a wave of AI integrations and innovations across just about every sector.

And now, in 2025, they’ve finally had some time to settle into real workflows — mind you, in some industries more than others.

This guide pulls together some of the latest numbers to give you a clean, comparative snapshot of AI adoption across various industries, and divides them into four categories:

To get an even more in-depth look at how AI is impacting education, check out our AI in Education statistics guide.

Then, maintain transparency as AI adoption increases with Originality.ai’s patented tools, including AI detection, plagiarism checking, and fact checking.

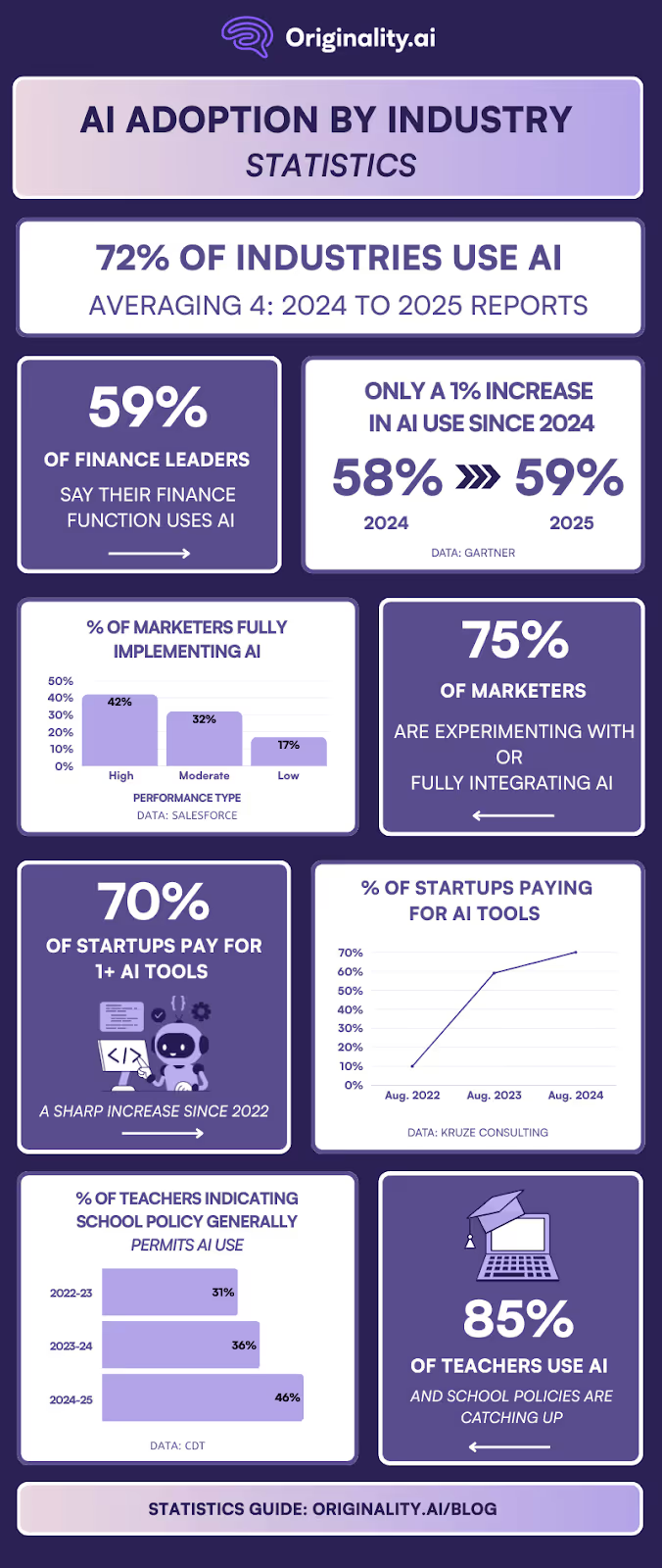

Across four very different industries, the numbers point in the same direction: AI has become part of normal work. It doesn’t matter whether the context is financial operations, marketing campaigns, startup teams, or classrooms — AI is now a normal part of the everyday workflow for a large share of people.

When you average these numbers, you get about seven-in-ten adoption across four industries that normally have little to nothing in common. That kind of alignment makes it clear that AI isn’t confined to any one profession — it’s becoming a standard part of how people work.

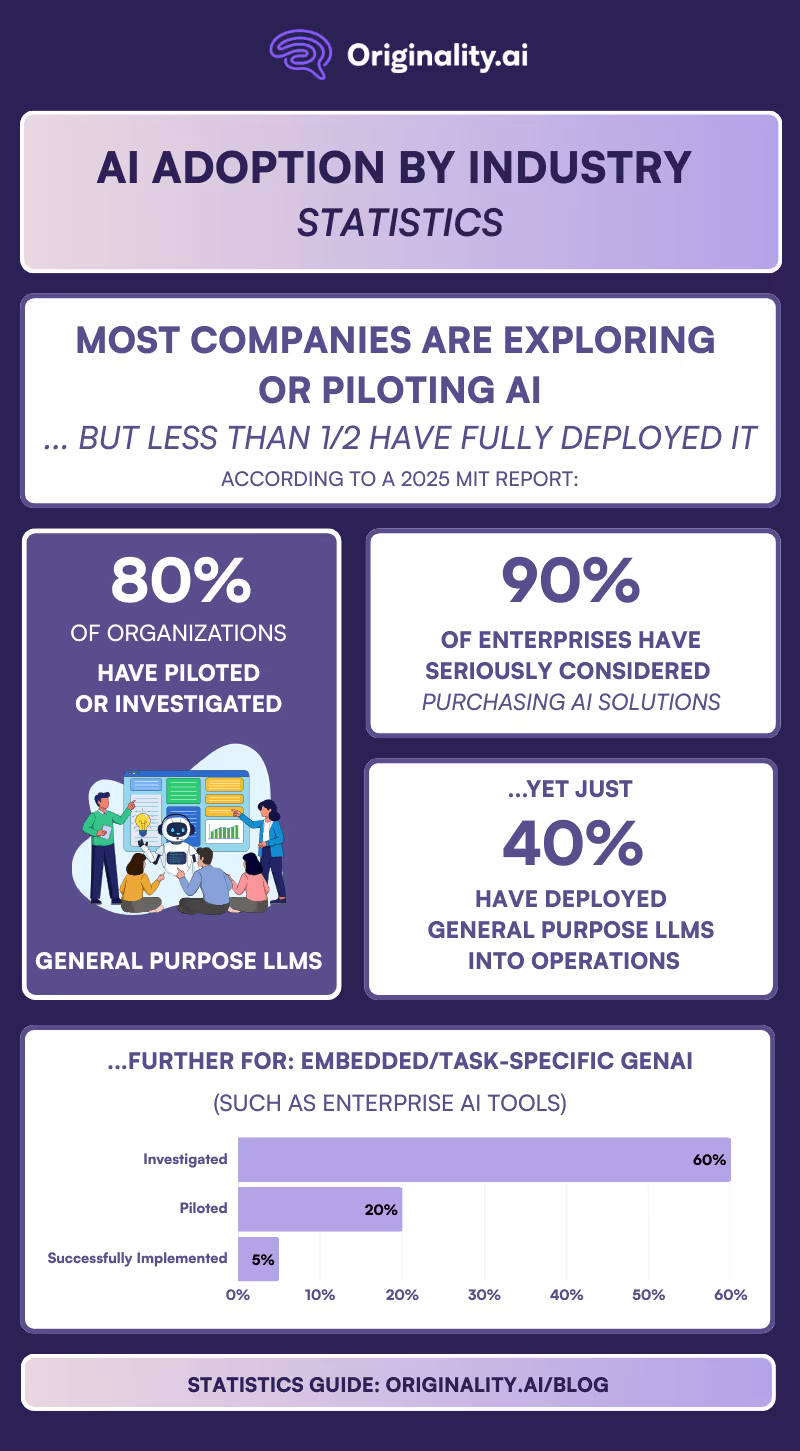

MIT’s 2025 State of AI in Business report shows that organizations are eager to adopt AI, but they’re still figuring out how to move from pilots to full, everyday use. The numbers paint a picture of high activity at the front end — exploring, piloting, evaluating — and a much smaller group making it all the way to deeper deployment.

The gap between testing AI and fully adopting it puts a lot of pressure on companies to figure out the “last mile” of implementation. Most leaders clearly want AI in their operations, but many still need clearer workflows, better policies, and more internal alignment to make adoption stick.

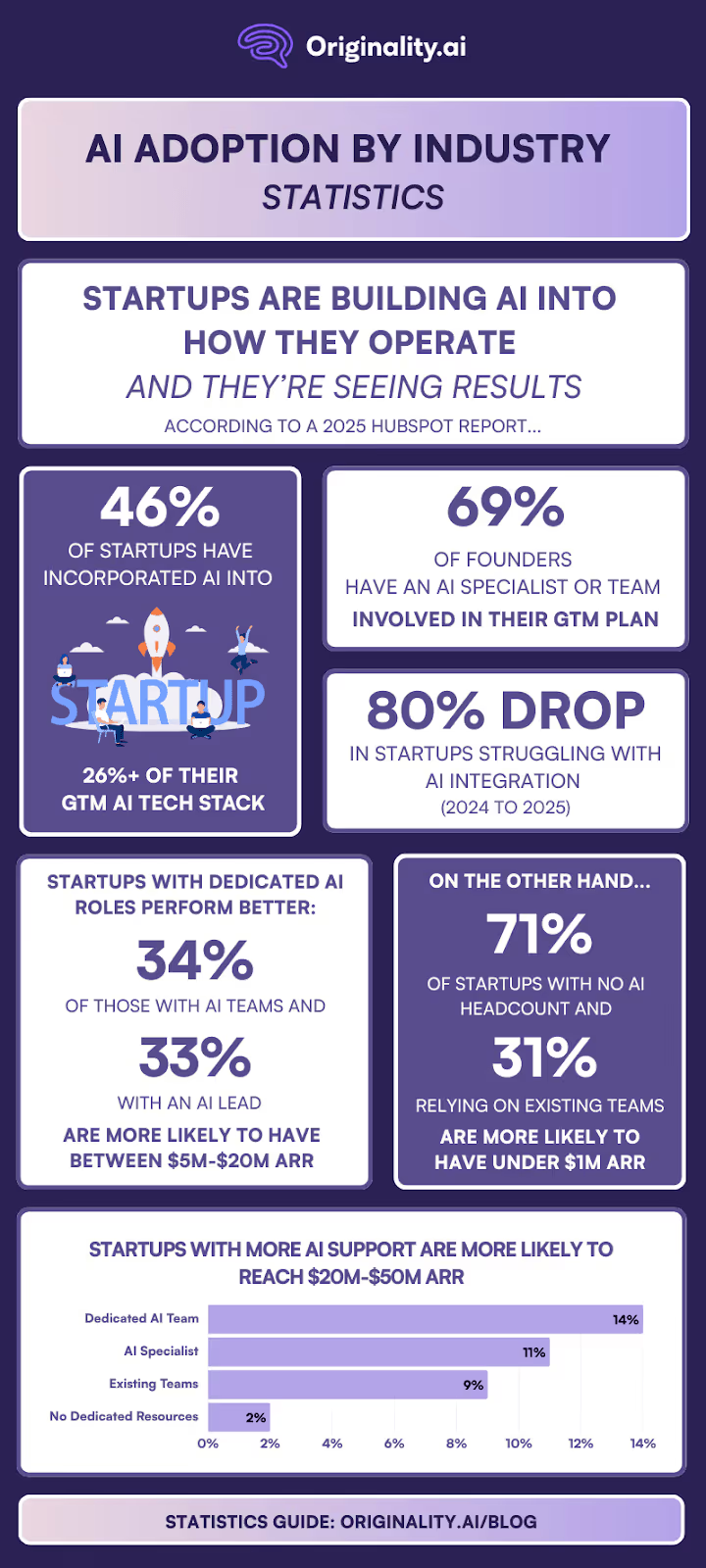

HubSpot’s 2025 AI in Startup GTM Strategy Report, focusing on startup research, shows that AI is becoming part of the core structure of how early-stage companies build and launch. Teams are reorganizing their stacks, rewriting their workflows, and baking AI directly into their go-to-market plans — and in many cases, those shifts are already paying off.

Together, these numbers show that AI is shifting from being a tactical experiment to a fundamental part of how startups operate. It’s shaping their stacks, their workflows, their teams, and increasingly, their growth. The pattern is clear: AI-first isn’t a niche strategy anymore — it’s quickly becoming the standard way new companies build and scale.

Check out the full list of stats and sources in the AirTable below:

These stats show just how far AI has made its way into everyday operations, especially inside bigger companies and finance teams. Taken together, they paint a picture of a market that’s moved well past the hype and is now treating AI like basic infrastructure rather than a shiny new experiment.

Source: Gartner press release, November 2025

Why it matters: Finance teams typically handle tasks like forecasting, scenario modeling, and risk analysis. AI being used this widely suggests companies are leaning on it to improve the speed and accuracy of decisions that affect the entire business.

Source: McKinsey survey, November 2025

Why it matters: When most companies are testing AI agents that can carry out multi-step tasks, they’re actively looking for ways to automate. That changes how teams are structured and how quickly projects are expected to move from idea to done.

Source: Eurostat report, January 2025

Why it matters: A jump from 8% to 13.5% might look small at first, but at an EU scale, it represents a ton of companies moving AI into real use. It also shows that adoption isn’t just a US or Big Tech trend — European businesses are adding AI to their standard toolset too.

Source: BCG report, October 2024

Why it matters: If AI doesn’t move past pilots, it usually won’t have much of an effect on how work actually gets done. Companies that make more progress often end up with clearer processes and fewer blockers around using AI, which can help them improve speed and consistency over time.

Source: Statistics Netherlands report, June 2025

Why it matters: Year-over-year growth this large suggests AI is spreading quickly across Dutch companies. It’s a reminder that adoption doesn’t always crawl; sometimes it runs.

Source: Federal Reserve Board analysis, February 2025

Why it matters: A range this big (20-40% for AI uptake) could mean a few things. It could be that some roles have an obvious use for AI while others don’t, that workplaces are simply moving at different speeds, or something else entirely. Either way, it’s worth watching if and how these numbers change over time.

Source: PwC survey, October 2024

Why it matters: Once AI shows up in “core strategy,” it can start influencing budget decisions, hiring, tooling, and what leadership expects teams to deliver. For people working in marketing, ops, finance, or product, that could translate into more pressure to find clear, AI use cases that actually move numbers.

Source: KPMG report, December 2024

Why it matters: Finance is usually one of the most conservative functions, so if AI is deeply embedded there, it could be a sign that the rest of the company is not far behind. And once leaders get used to faster, AI-assisted insights from finance, they may expect the same speed and clarity from marketing, operations, and every other team.

Source: Eurostat report, January 2025

Why it matters: European AI adoption varies by region and country; if you’re running campaigns, selling software, or rolling out AI-related policies across multiple countries, you’ll need different expectations and different playbooks by market.

Source: BCG report, October 2024

Why it matters: The early “AI leaders” aren’t surprising, as they’re the sectors that move fast by design. Fintech and software companies build and ship constantly, which can make them quicker to turn AI into real features and workflows. For slower-moving industries, that sets expectations that your own customers and executives may compare you against.

Source: NVIDIA, 2024

Why it matters: With about 9 in 10 financial services companies either planning to or already using AI, it shows that financial services brands are finding real value in gen AI. When a sector as careful and risk-aware as finance shows this kind of acceleration, it could be a sign that AI has practical, repeatable use cases.

Source: Bank of England and Financial Conduct Authority survey, November 2024

Why it matters: The interesting part isn’t just that 75% of firms already use AI — it’s that only 10% say they’re still a few years out. That small gap suggests most of the UK financial sector is moving faster than people may assume.

Source: Statista research, November 2025

Why it matters: If only 16% are deploying agentic AI, it shows the tech is still early, even in a sector that already uses plenty of AI. The slower rollout hints a operational or trust hurdles, not necessarily a lack of interest, considering other stats and findings indicate a high level of AI adoption in finance.

Source: Statistics Canada survey, June 2025

Why it matters: Canada’s adoption rate basically doubled in a year. For Canadian teams, that kind of jump is usually a signal to pay attention — it means AI isn’t just a distant trend anymore.

Source: Deloitte report, January 2025

Why it matters: If the most scaled GenAI work is happening in IT, it tells you where companies are getting enough reliability and clarity to commit. For teams working with AI outputs — whether that’s content, analysis, or decision support — it’s a sign that the most mature use cases often start where accuracy and stability matter most.

Source: Stanford HAI report, April 2025

Why it matters: When adoption jumps this fast, organizations don’t always have the systems in place to keep track of where or how they used AI. That makes it easier for AI-specific errors to slip past reviewers, which is why fact checks are essential.

Read more about the AI book list scandal and the Deloitte AI mistake.

Source: McKinsey survey, November 2025

Why it matters: Saying a company uses AI somewhere doesn’t tell you much about how evenly or reliably it’s being used. When AI is popping up here and there across the business, it can leave teams dealing with very different levels of quality, oversight, and expectations depending on where they sit.

Source: Deloitte report, January 2025

Why it matters: The finding that companies are planning to increase AI spend is an indication that many leaders are treating AI as important enough to invest in as a key priority for budgets.

Source: Bain & Company survey, May 2025

Why it matters: A talent gap this big tells you that adoption isn’t necessarily the hard part anymore — scaling these systems is. When companies can’t staff the work, even strong pilots struggle to turn into reliable, repeatable operations across the business.

Source: KPMG report, November 2024

Why it matters: When AI starts handling a meaningful share of finance work, its output doesn’t always stay in finance. Budgets, performance reviews, investment decisions, and planning all rely on those numbers, so any shifts in how the work gets done ripple into the rest of the organization.

Source: MIT report, July 2025

Why it matters: If this many teams are trying out GenAI, it often means that companies are actively looking for places where the tech might fit. Early experiments are usually the first sign that people are taking the tools seriously.

Source: MIT report, July 2025

Why it matters: Once teams run small experiments, the next step is checking out actual products. A number this high suggests companies aren’t just curious — they’re trying to figure out which tools might be worth committing to.

Source: MIT report, July 2025

Why it matters: Moving from evaluation to deployment is a bigger leap, so seeing four out of ten make it that far tells you GenAI is being integrated and launched throughout organizations to improve productivity.

Source: MIT report, July 2025

Why it matters: Here’s where most efforts stall. Plenty of tools get evaluated or piloted, but only a small group is successful enough to make it into everyday use. That drop-off is a clear sign of where companies hit the real friction.

There’s no doubt that marketing teams have moved quickly on AI. It shows up in personalization, content creation, data cleanup, and campaign planning. These stats show you just how “normal” it has become to have AI sitting inside the marketing stack.

Source: Nielsen survey, June 2025

Why it matters: Marketers don’t see AI personalization as a side feature anymore; they’re treating it as something that affects how campaigns actually get built. That shift changes what data matters, how teams test ideas, and what counts as a “good” result inside a marketing workflow.

Source: Wharton report, October 2024

Why it matters: AI-generated content is already part of many marketing workflows, which means teams can’t assume every draft follows the same standards as strictly human-written output (which is why it’s essential to ensure clear AI policies are in place and incorporate AI detection for transparency). That reality puts more weight on accuracy checks and review steps.

Source: American Marketing Association and Lightricks survey, December 2024

Why it matters: If marketers feel more productive using gen AI, that could explain why adoption is so high. People tend to keep the tools that help them move faster, and that kind of routine benefit makes generative AI harder for teams to set aside once it’s in the workflow.

Source: Deloitte, Duke, and AMA survey, 2025

Why it matters: It’s not just about generative AI tools. AI in general is now built into multiple parts of marketing work, which can change how much influence the tech has on the final output — even in projects where people might not think of the work as “AI-driven.”

Source: Salesforce report, May 2024

Why it matters: If most marketers are using or testing AI, it can affect what people around them expect from the work. Managers may assume certain tasks can move faster, and teams could start to prioritize hiring people with experience using AI tools.

Source: Content Marketing Institute and MarketingProfs report, October 2025

Why it matters: When nearly every B2B marketing team is working with AI tools, the real difference shifts from whether you use AI to how thoughtfully you use it. Quality of oversight, data, fact-checking, and judgment start to matter more than the tool list itself.

Source: Adobe report, 2025

Why it matters: If leadership connects AI to growth expectations, integrations of AI tools into marketing workflows will likely increase.

Source: Nielsen survey, June 2025

Why it matters: If nearly every company is pulling AI into their marketing work, the teams holding out are basically working with a different toolkit. That gap can make it harder to compare results, defend decisions, or keep up with how quickly everyone else is analyzing performance.

Source: Content Marketing Institute and MarketingProfs report, October 2025

Why it matters: Agent testing is still early, but it’s a sign of where workflows may be headed. Once teams start letting software handle multi-step tasks, they have to rethink review processes.

Source: HubSpot report, 2025

Why it matters: Research is often the slowest part of a campaign, and having AI handle even a piece of that early digging helps teams start projects with more context and less guesswork. It shifts some of the prep work off people’s plates without changing the core strategy. Learn more about AI for content research.

Source: Marketing AI Institute and SmarterX report, 2025

Why it matters: A lot of teams aren’t just dabbling anymore; they’re formally testing AI inside real workflows. This gives marketers clearer evidence about what actually works, making it easier to decide what to scale.

Source: Adobe report, 2025

Why it matters: Saying that GenAI adds strain hints that teams are learning on the fly. They’re trying to keep their work solid while getting used to tools that don’t always fit, and that kind of adjustment curve is a real part of adoption, not just a side note.

Source: Semrush report, 2024

Why it matters: A gap in practical know-how makes it harder for small teams to get value from AI tools. Instead of using them where they could help, people end up hesitating or working around them, which can slow down how smoothly AI fits into everyday marketing work.

Source: Deloitte, Duke, and AMA survey, 2025

Why it matters: For some marketing teams, this may be the point where generative AI stops feeling experimental and starts acting like something they use by default.

Source: Orbit survey, October 2025

Why it matters: If so many marketers are turning to AI for editing help, it may point to a shift in how teams handle revisions and polish. Some of the routine cleanup work moves faster, which can change how much time people spend getting drafts ready for publication.

Discover even more blogging statistics.

Source: Influencer Marketing Hub report, September 2025

Why it matters: Budget limits can mean a few things for AI adoption. It may reflect that teams aren’t sure which tools justify the cost yet, or that they’re hesitant to spend money on software they don’t feel fully trained to use. Either way, cost uncertainty slows down adoption even when interest is there.

Source: CoSchedule report, 2025

Why it matters: When almost every company is open to using AI, the few that aren’t may end up out of sync with the rest of the industry.

Source: Capgemini Research Institute report, 2025

Why it matters: The gap isn’t dramatic, but it may hint at how each group approaches day-to-day marketing work. B2C teams often deal with faster content cycles and higher volumes, so they might reach for GenAI a bit sooner than B2B.

Source: Canva report, March 2025

Why it matters: When teams know exactly what’s allowed, they can better integrate AI into their workflow. Clear guidelines remove the guesswork, which can make adoption smoother.

Source: Initiative for a Competitive Inner City and Intuit report, February 2025

Why it matters: Using AI for marketing tasks often comes from the need to move faster on everyday work. If a tool helps small teams streamline the routine stuff, it can make creating basic materials feel less like a bottleneck and more like something they can get off their plate quickly.

Founders aren’t treating AI as a side tool. For a lot of early-stage companies, it is built directly into the product and go-to-market plan. These stats show how deeply AI is being woven into startup operations and where smaller firms are facing challenges.

Source: Mercury report, August 2025

Why it matters: Early-stage founders often move fast, and adopting AI this heavily may make it easier for them to build momentum sooner. When those starting fresh are already leaning on the tools, it raises the baseline for what a “normal” startup stack looks like.

Source: Techstars survey, 2024

Why it matters: Founders using AI in both core parts of the business and in supporting roles can change the mix of skills they lean on day-to-day. Some work shifts toward systems that handle predictable tasks, and more of a founder’s attention ends up going to decisions that require judgment instead of repetition.

Source: Kruze Consulting report, November 2024

Why it matters: Paying for AI tools can push young companies to keep their systems organized earlier than they normally would. Once software becomes part of the monthly spend, teams often put more care into picking tools that work together, which can make their tech stack feel more intentional instead of patched together on the fly.

Source: HubSpot report, 2025

Why it matters: Seeing AI and ML (machine learning) companies pop up so often in high-growth lists may cause some founders to pay closer attention to what’s helping those teams gain traction. Even if the drivers aren’t obvious from the outside, the pattern is hard to ignore, and it can shape how early companies think about where they might find an edge.

Source: Mercury report, August 2025

Why it matters: AI adoption is high for entrepreneurial founders across generations — for Gen Z, millennials, Gen X, and Boomers.

Source: General Catalyst survey, 2024

Why it matters: Getting something into production often means a team has worked through the early guesswork and made the tool stable enough to rely on. For young companies, that shift can change how they plan projects, because they’re no longer just trying AI out — they’re counting on it to support real users or internal systems.

Source: CNBC, March 2025

Why it matters: If AI is doing almost all the coding, the early headcount math changes for how to build and get a product out the door.

Source: Meta and A&M India, June 2025

Why it matters: Strong AI adoption within India’s startups highlights the global reach of AI adoption in startups. Founders in major markets are folding these tools into real operations.

Source: HubSpot report, 2025

Why it matters: Building this much AI into the tools that support go-to-market work hints at how deeply these teams expect it to factor into execution. For newer companies, it suggests AI isn’t just supporting the product — it’s becoming part of the commercial engine.

Source: HubSpot report, 2025

Why it matters: Treating AI as its own function pushes companies to build clear ownership around the work. That kind of structure can make it easier for teams to test ideas without pulling attention away from core roles.

Source: HubSpot report, 2025

Why it matters: As integration gets easier, teams can spend more time actually putting the tools to use, making it easier for early companies to expand AI into parts of the business they initially avoided.

Source: HubSpot report, 2025

Why it matters: Teams that treat AI as its own responsibility often build more consistency into how they use it. That steadiness can make it easier to support the parts of the business tied directly to revenue.

Source: SALT and Stanford Digital Economy Lab, 2025

Why it matters: Startup AI adoption isn’t just about using the tech early; it’s about where it's applied. When so much effort goes toward tasks employees barely want automated, it shows a gap between where AI is being adopted and where employees would like to see it integrated.

AI is moving into classrooms, districts, and campuses faster than most institutions can write policies for it. These stats show how quickly teachers, schools, and universities are adopting AI in their day-to-day work.

Source: RAND survey, April 2025

Why it matters: Putting this much training in front of teachers suggests districts may be expecting AI tools to become part of regular classroom work. It doesn’t guarantee that every teacher is using them, but it does seem like staff are being prepared for tools they’re likely to run into more often.

Source: UNESCO survey, September 2025

Why it matters: Growing interest in AI across university roles can nudge institutions to think more seriously about how these tools fit into academic work. Even if priorities vary, having more faculty and staff exploring the tech often pushes departments to sort out clearer guidance and support.

Source: DATIA K12 and Magma Math survey, 2024

Why it matters: Early adopters are usually the ones pushing new ideas forward, and districts in that group may be more open to trying tools that actually save time for teachers and staff. This kind of early interest can set the stage for better support and clearer guidance as AI becomes part of everyday work.

Source: AACSB survey, February 2025

Why it matters: Regular GenAI use in the classroom could push business schools to start building clearer expectations for how these tools fit into core subjects. Students may actually benefit from instructors folding AI into assignments and discussions, since it gives them early exposure to tools they’ll likely run into in internships and entry-level roles.

Source: Gallup and Walton Family Foundation report, 2025

Why it matters: Although there’s a gap between AI usage and the presence of clear AI school policies, whether schools establish a policy (or not), AI is being implemented in the teaching workflow.

Source: Cengage Group report, October 2024

Why it matters: The difference isn’t huge, but K–12’s slightly higher use isn’t surprising given their workload. Teachers here deal with prep, grading, and student questions almost daily, so they may feel the need for AI tools sooner compared to higher ed classrooms.

Source: CDT report, October 2025

Why it matters: Adoption that’s this high suggests that teachers are finding concrete places where AI helps with tasks. And when most of the staff is already using the tools, districts may get a clearer picture of where AI actually makes a difference, which can guide future support and resource decisions.

Source: CDT report, October 2025

Why it matters: With just under half of teachers using AI grading tools, it’s clear that AI is becoming increasingly used to help evaluate assignments, and not just with curriculum development (69% of teachers report using AI for this).

Source: Inside Higher Ed and Hanover Research survey, October 2024

Why it matters: Starting with narrow tools can be a low-friction way for campuses to test what AI actually helps with. When tech leaders point to these small, contained uses, it hints at where institutions feel comfortable experimenting before moving on to anything more complex.

Source: Microsoft report, 2025

Why it matters: AI literacy is becoming increasingly important for students as they prepare for the AI-enabled workplace, and global educators are taking notice, with over half indicating that they believe AI literacy is a must.

Source: RAND report, April 2024

Why it matters: With only a fraction of teachers experimenting with AI this early on, most classrooms were still running on traditional workflows.

Source: AAUP report, July 2025

Why it matters: Edtech-using faculty may be working with AI far more than they realize. When tools have built-in analytics or automation running in the background, self-reported adoption numbers can understate how much AI is already woven into day-to-day academic work.

Source: Study.com survey, 2024

Why it matters: When this many people pause until someone spells out the rules, it shows how easily uncertainty can slow adoption. It’s not resistance to the tech itself — it’s teachers waiting for guardrails, so they’re not guessing their way through decisions that could affect students or their own workload.

Even with clear differences across industries, this list of AI adoption statistics makes one thing clear: it’s happening everywhere.

AI adoption may be uneven and heavily influenced by each sector's priorities, but its impact is unmistakable. It’s becoming a normal part of marketing teams’ workflows, startups are working it into both their products and marketing plans, educators are using it to help them out in the classroom, and finance teams are treating it like a standard part of their workflow.

Together, these statistics show that AI isn’t much of an experiment anymore in the mid-2020s — it’s part of how work gets done.

Maintain transparency as AI adoption increases with Originality.ai’s patented tools, including AI detection, plagiarism checking, and fact checking.

Further Reading: