Deloitte is set to partially refund the Australian government for a report that cost $440,000 (Australian Dollars).

This news was covered by major media outlets, including ABC News, Business Insider, and The Register, and comes in response to the discovery of apparent AI hallucinations in a report prepared by Deloitte in July 2025 for the Department of Employment and Workplace Relations.

It’s not the first time that AI hallucinations have made the headlines; earlier this year, several US newspapers published a summer book list with AI hallucinations — the AI book list scandal.

AI hallucinations are a real problem that impacts authenticity, reputation, and brand image… but could this have been prevented in the first place?

Let’s take a closer look.

With AI Slop making its way into reports published by governments, using tools like Originality.ai to identify AI text that requires additional review and fact-check for accuracy is a must.

According to Business Insider, Deloitte’s report was a lengthy project taking seven months to complete.

Following Deloitte’s investigation into the errors:

Not only did Deloitte update the version of the report to correct the “errors”...

…but they also included a section within the report’s methodology noting that AI (specifically Azure OpenAI GPT-4o) was used in its preparation.

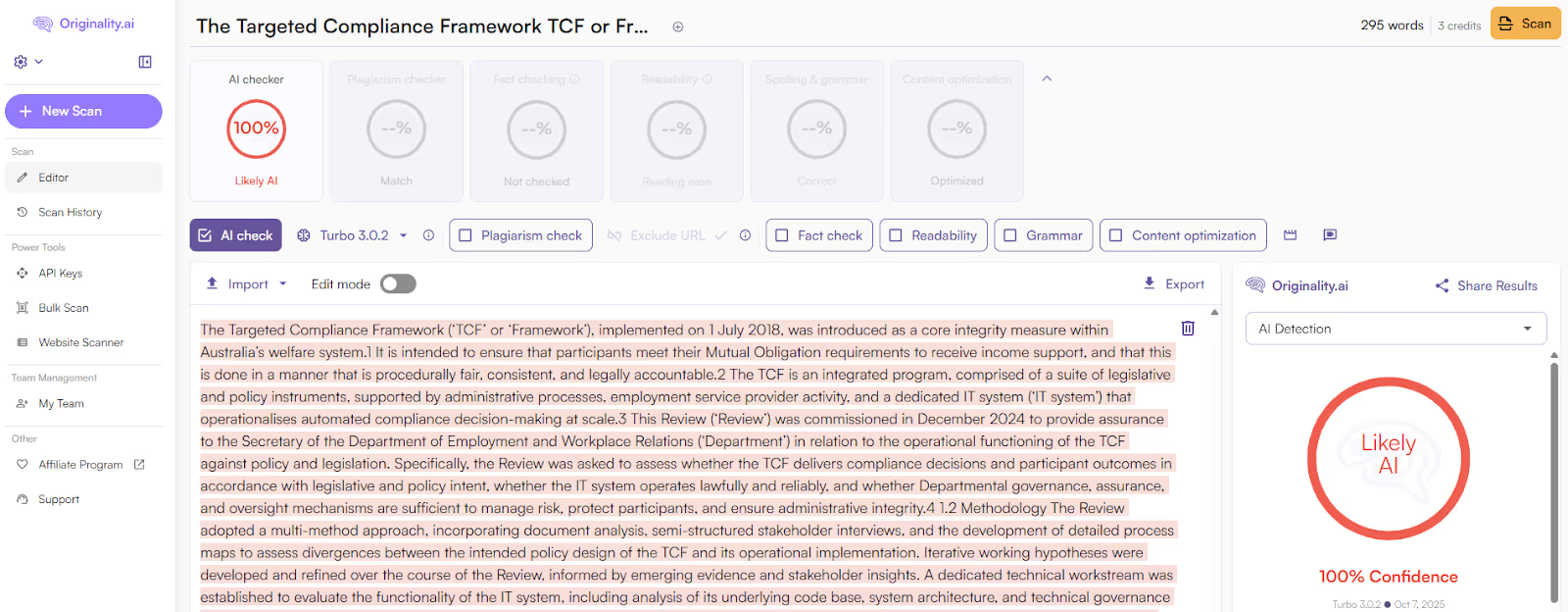

Our industry-leading Originality.ai AI Detector is an AI detection tool that accurately identifies AI-generated content from leading LLMs (learn more in our AI Accuracy Study).

Scanning the report with the Originality.ai AI Checker could have helped reviewers quickly identify sections of AI-generated text that required additional quality checks to ensure accuracy and authenticity were maintained.

For example, an excerpt from the updated report’s Executive Summary (page 6) is flagged as Likely AI with 100% Confidence by the Originality.ai AI Detector.

The initial report, which contained the AI hallucinations/errors, has since been updated, so unfortunately, we can’t run the original version with inaccuracies through our fact checker.

However, if you’d like to see our fact checker in action, read our article on the AI Book List Scandal, where the fact-checking software clearly flags numerous AI hallucinations. You can also read more about Originality.ai’s fact-checking accuracy in our Fact-Checking Study.

If fact-checking software had been used, it could have supported:

AI slop is polluting the internet with low-quality content that can contain AI hallucinations and rapidly spread misinformation.

In this case, that meant a report that a government was supposed to be able to rely on contained inaccuracies that could have caused serious problems.

As Dr Christopher Rudge, who spotted the errors stated in ABC’s coverage, “That’s about misstating the law to the Australian government in a report that they rely on. So I thought it was important to stand up for diligence.”

A quick check could have helped both Deloitte and the Australian government identify AI-generated content and catch the errors.

Skipping that step came with a very real and high price tag.

Those errors are now going to cost Deloitte a partial refund of $440,000.

In 2025, content quality checks aren’t optional. They are essential.

If you are a journalist, reporter, reviewer, or editor and want to avoid scenarios where credibility and integrity are jeopardized, start using Originality.ai today.

Interested in reading more about AI? Check out our AI Studies.