It may not have reached every corner of your life just yet, but for the most part, AI is everywhere. As you’ll see in our list of AI statistics below, consumers are using it (though they’re not always thrilled about it), businesses are adopting it (it’s not just marketers), and even governments are stepping in to help regulate it (it’s about time!).

Here, we’ve pulled together a list of some of the top AI statistics to know in 2025, and arranged them into the following categories:

Let’s get into it.

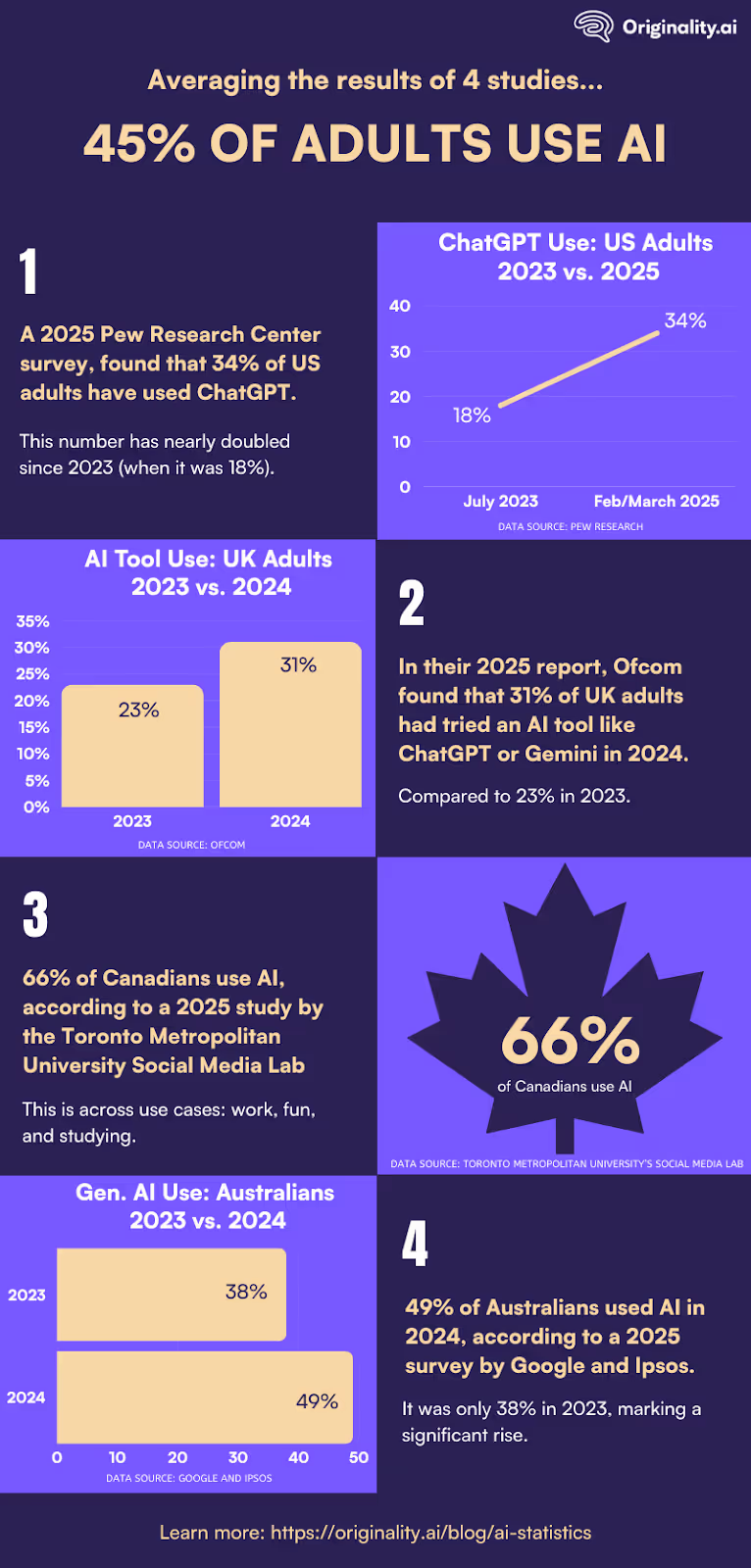

Averaging the results of four independent studies, each representing a different country (the U.S., U.K., Canada, and Australia, respectively), every 1 in 2 adults has now used an AI tool or chatbot. American companies may dominate the generative AI industry, but they’re also quite popular in other countries, too.

So, if you’re hoping to rank in AI search, tailor your content accordingly.

Here’s a breakdown of the stats:

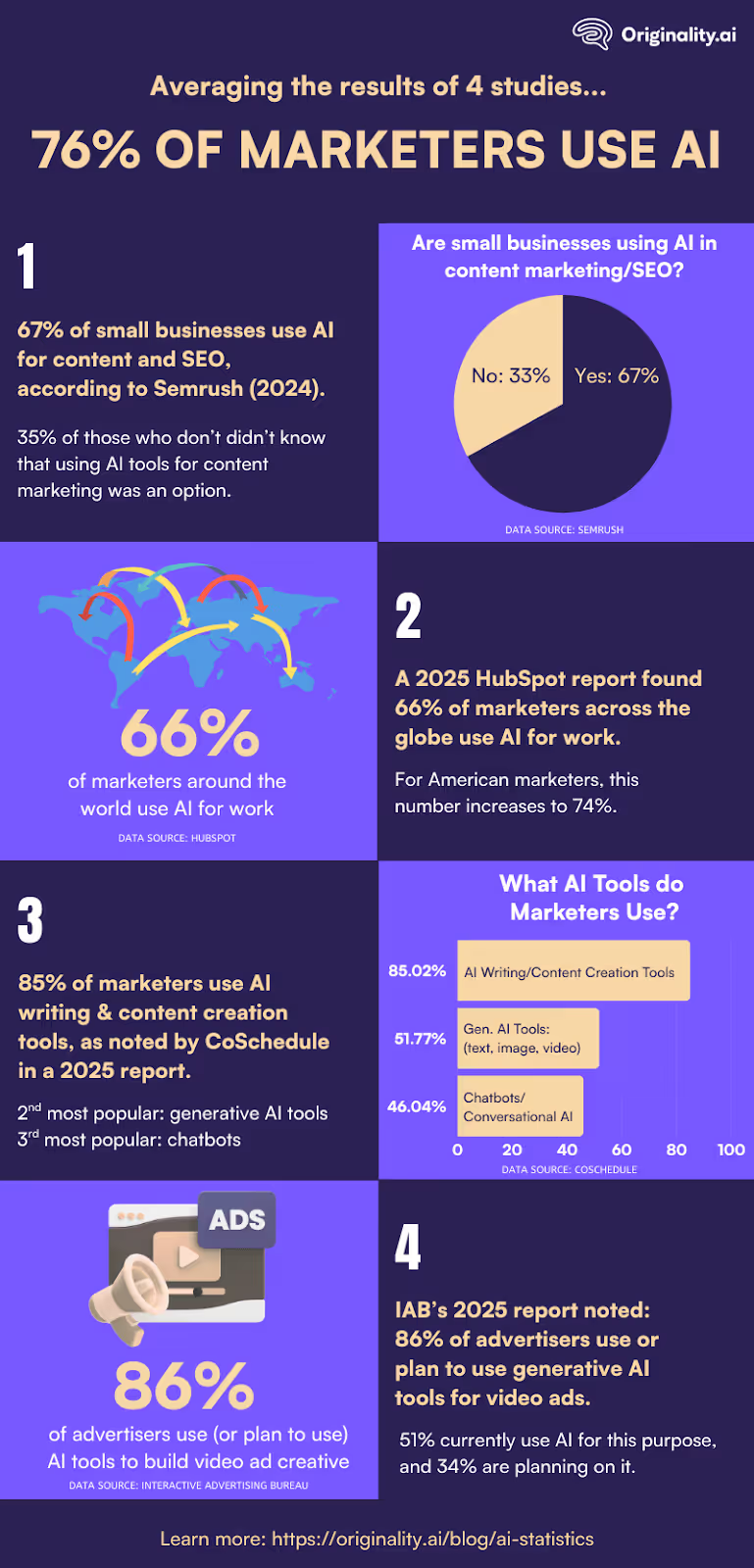

Generative AI is taking over the marketing world. Roughly 3 out of 4 marketers are currently using or plan to use AI for content creation tasks, when you average the results of four independent studies.

The takeaway here is clear: if you have yet to jump on the AI bandwagon, you’re already behind.

Here’s a breakdown of the stats:

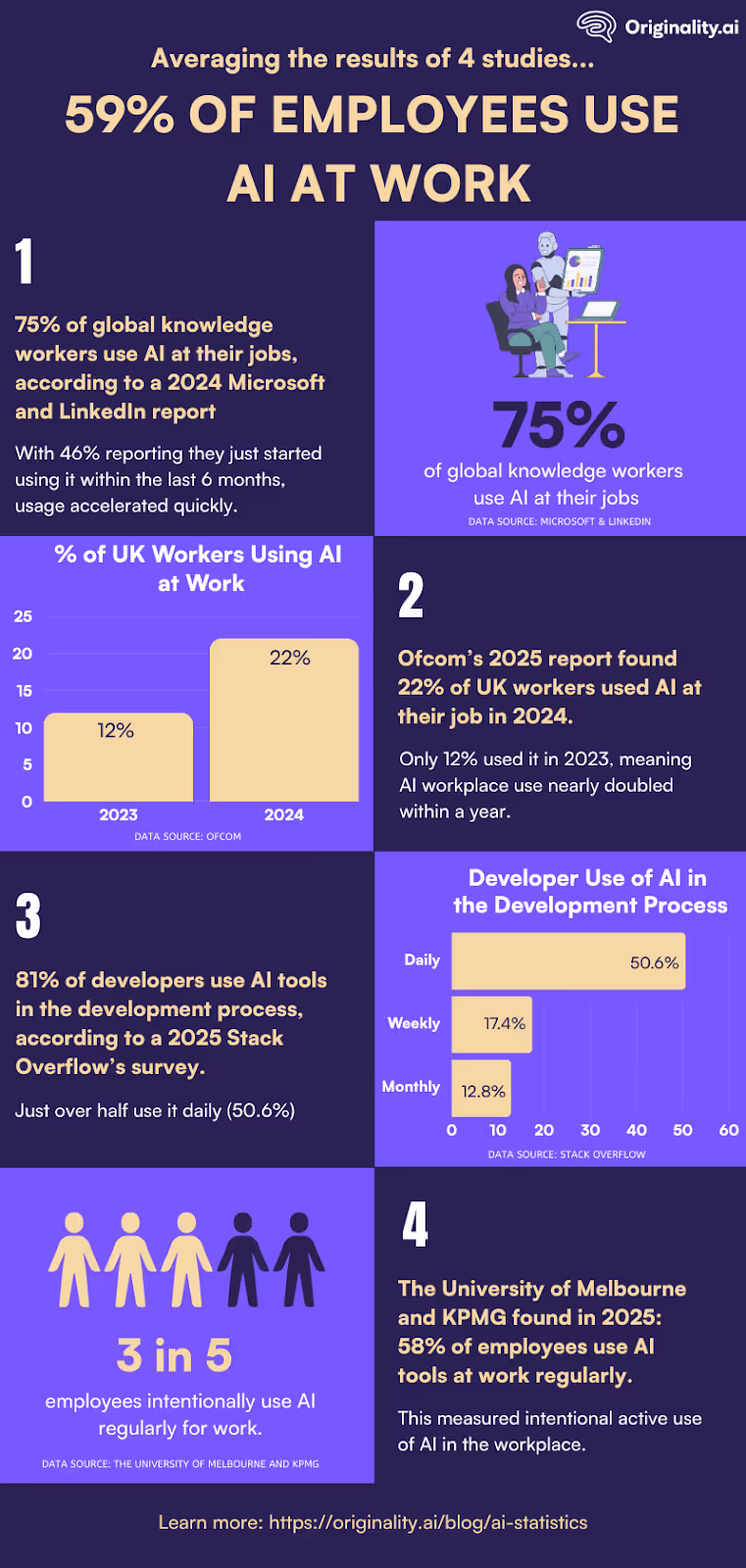

It’s not just marketers who are using it. Almost 6 in 10 workers now use AI at their jobs, a figure reflecting the average results of four independent studies.

Of course, it depends on the job (the range of stats here is from 22% to 81%), but AI is having some impact across industries. You just can’t treat it as an isolated, niche marketing tool anymore — it’s changing the way a lot of industries do business.

Here’s a breakdown of the stats:

Now, onto the rest of the stats!

Since we have quite a long list to work with here, we’ve provided an overview of the following AI statistics and their sources in the AirTable below:

AI is quickly becoming part of people’s everyday routines, but that doesn’t mean they trust it completely. For marketers and publishers, this means clear sourcing, labeling, and human oversight have become key to making your content stand out as credible.

These stats show where people are embracing AI, where doubts remain, and why clear disclosure and sourcing help content feel reliable.

Source: Pew Research Center article, June 2025

Why it matters: When nearly 1 in 3 US adults admit to using ChatGPT, that’s mainstream familiarity. This means that readers are getting better and better at identifying AI-generated text and images, so clear sourcing and simple disclosures help your content feel trustworthy instead of gimmicky.

Source: Reuters Institute report, 2025

Why it matters: Habits are changing fastest for younger audiences. Plan for visibility inside AI answer experiences, not only on web pages.

Source: Pew Research Center report, April 2025

Why it matters: If readers are uneasy about AI, they may be more likely to bounce when content looks automated. Showing who wrote it, where the data comes from, and how it was checked gives them a reason to stay.

Source: Ipsos AI Monitor report, 2025

Why it matters: That mixture of nervousness and excitement means audiences are curious but cautious. For publishers and marketers, that is an opening to frame AI content as helpful rather than risky. Show the upside clearly, maintain transparency, and you guide readers toward engagement instead of hesitation.

Source: YouGov report, July 2025

Why it matters: Even frequent AI users aren’t all convinced it’s a net positive. If almost a third of regular users still expect it, it shows that familiarity doesn’t erase skepticism. Content that explains how AI fits in the process helps ease that tension.

Source: Axios article, July 2025

Why it matters: Billions of daily prompts make AI use impossible to ignore. At that scale, even small shifts in behavior or policy can ripple out fast, affecting costs, search traffic, and how people encounter information online.

Source: Ofcom report, May 2025

Why it matters: Adoption isn’t only about companies using AI — it’s about whether audiences accept it. If half of UK adults still put more trust in human writing, firms that roll out AI content without transparency risk losing the very people they’re trying to reach.

Source: KPMG and University of Melbourne study, April 2025

Why it matters: Using AI doesn’t always mean trusting it. If half of regular users still have doubts, your content needs clear sourcing and credibility signals to earn confidence.

Source: KPMG and University of Melbourne study, April 2025

Why it matters: Trust can vary by region. If you’re speaking to audiences in emerging markets, leaning into AI might boost confidence. In advanced economies, though, you’ll need extra transparency to overcome skepticism to shift based on local sentiment.

Source: Pew Research Center article, April 2025

Why it matters: If half the country already thinks AI will hurt journalism, that’s a signal not to treat it as neutral. Publishers need to show the guardrails they’re using so readers know AI isn’t going to dilute their trust in the news.

Source: Rutgers University NAIOM report, February 2025

Why it matters: Trust matters more than ever. That gap shows audiences are watching for signals of credibility, so labeling AI’s role and backing claims with sources is critical.

Source: YouGov survey, March 2025

Why it matters: If most people doubt AI’s fairness and accuracy, you can’t rely on it alone. Adding human review is what keeps content balanced and believable.

Source: KPMG and University of Melbourne study, April 2025

Why it matters: Attitudes toward AI can vary across countries, for instance, in Egypt 83% felt that AI was trustworthy while in Finland, only about a third of respondents felt AI was secure. If you’re publishing globally, it’s a reminder that audiences won’t all approach AI with the same level of confidence.

Source: Pew Research Center report, April 2025

Why it matters: Attitudes toward AI aren’t the same, even within the same country. This is why it’s important to test how different groups respond to AI in your messaging before assuming everyone sees it the same way.

Source: Edelman report, 2025

Why it matters: Trust in AI can look completely different depending on where you are. A message that works in China might not work in the US, so global strategies need to account for local attitudes.

Source: University of Kansas study, December 2024

Why it matters: Just knowing AI was used can make people doubt the content, even if they don’t understand the details. That makes clear disclosure essential if you want to hold onto trust.

We’re now at the point where more companies are moving past pilot projects and building AI directly into how they work. As adoption spreads (and if trust is still shaky), clients and regulators will likely expect clearer proof of where AI fits into the process, so businesses that document and communicate it well may just have the advantage.

These stats highlight how fast adoption is spreading, what expectations it sets for speed and quality, and why credibility in how AI is used matters as much as adoption itself.

Source: Eurostat article, January 2024

Why it matters: AI use is already playing a major role in how big companies operate, which means they’re shaping the expectations around speed, cost, and output quality. Smaller players who ignore that shift risk looking slower and less competitive by default.

Source: Statistics Canada analysis, June 2025

Why it matters: If AI use among Canadian businesses keeps doubling, the baseline for products and services shifts. Readers, clients, and customers will expect AI-level speed and efficiency, so failing to show it in your process makes you look outdated.

Source: McKinsey & Company report, May 2024

Why it matters: When most companies already have AI in the mix, the real question shifts from “are you using it” to “how well are you using it.” Falling behind on quality or creativity is what will stand out.

Source: RSM report, June 2025

Why it matters: When nearly every mid-market firm reports using generative AI, it shows adoption isn’t limited to deep-pocketed enterprises. The tools are practical and affordable enough for the middle tier, which means the excuses for holding off are running out.

Source: OECD report, June 2025

Why it matters: The Organisation for Economic Co-operation and Development (OECD) covers 38 countries, so its numbers set a trusted benchmark. With adoption still fairly low overall, the takeaway isn’t just who’s ahead but how much room there is for growth, giving publishers and businesses a way to gauge whether they’re moving faster, keeping pace, or falling behind the broader market.

Source: National Bureau of Economic Research paper, April 2024

Why it matters: Based on data from the US Census Bureau’s Business Trends and Outlooks survey, this number shows AI adoption is growing among businesses, but still runs lower than most private surveys. Use it as the baseline when you’re comparing adoption across industries or company sizes.

Source: Stanford HAI report, 2025

Why it matters: An almost 25% jump in a single year shows how quickly AI has gone mainstream. For anyone still waiting on the sidelines, the comparison point isn’t last year’s early adopters anymore, but the majority of the market.

Source: IAB report, July 2025

Why it matters: AI creative cuts costs and levels the playing field for smaller brands. They don’t have the same budgets as the top advertisers, so adopting GenAI early is a way to stretch dollars and keep up — the big guys better watch out.

Source: Arctic Wolf report, 2025

Why it matters: AI is now a security expectation. If your systems aren’t AI-enabled, vendors and clients will notice.

Source: Federal Reserve Bank of New York via Liberty Street Economics article, September 2025

Why it matters: AI adoption is spreading across sectors, showing that the shift isn’t limited to digital-first industries.

Source: Capgemini report, July 2025

Why it matters: Despite the hype, scaled AI agents are rare. You could look at that gap as both a warning (it’s not ready) and an opportunity (get an edge on the competition).

Source: Microsoft report, August 2025

Why it matters: With nearly all Indian business leaders planning AI agent rollouts, the market is shifting fast. If you’re competing globally, you need to match that pace or risk falling behind in efficiency and innovation.

Source: Marketing AI Institute and SmarterX report, 2025

Why it matters: When employees see training as the biggest blocker, but leadership doesn’t, adoption stalls. Closing that gap is the difference between AI that adds value and AI that creates brand risk.

Source: Omdia survey, June 2023

Why it matters: Most companies see at least modest returns from AI, but a smaller group is pulling far ahead. The difference could come from tracking what works and scaling it, instead of just using AI everywhere without a plan.

Source: arXiv.org paper, May 2024

Why it matters: It’s not just happening in content marketing. Manufacturing may be slower to adopt AI, but momentum is building, signalling that audiences everywhere may start expecting AI-driven efficiency across the board.

With adoption in full force, it’s no surprise that AI is reshaping day-to-day work, speeding up some tasks while raising real concerns about job security and fairness.

These numbers show where workers see value, where they feel uneasy, and why clear policies and transparency make a difference in how teams adapt.

Source: MIT study, July 2023

Why it matters: Faster drafts are only half the story. If ChatGPT helps writers hit deadlines more easily, the bigger advantage is what they can deliver on top. Maybe that’s extra research, clearer messaging, or simply work that feels more authoritative.

Source: Stack Overflow report, 2025

Why it matters: If half of professional developers are using AI daily, their final product won’t be purely human-made anymore. Documentation, review processes, and security checks all need to account for code written with AI assistance, or you risk licensing errors and inconsistent style sneaking into production.

Source: Pew Research Center article, February 2025

Why it matters: Concern on this scale shows AI is reshaping workplace culture as much as workflows. Businesses that acknowledge those worries directly (whether through AI training, clear communication, or simply reassurance) will have an easier time keeping employees happy and engaged as adoption grows.

Source: Anthropic study, February 2025

Why it matters: AI isn’t showing up evenly across roles. Some teams are already leaning on it heavily while others barely touch it, which means managers need to plan for different levels of training, oversight, and support.

Source: Quarterly Journal of Economics article, May 2025

Why it matters: These kinds of gains show that AI isn’t just a productivity booster for experts, as it’s clearly closing the gap for new hires, too. That makes customer support an early proving ground for where AI can add measurable value without replacing people.

Source: GitHub Copilot study, February 2023

Why it matters: The biggest gains show up in work that has clear rules and easy checks. If a similar concept is applied to content, it points to jobs like formatting, tagging, or templated copy. You know, tasks where speed matters but accuracy is just as easy to verify.

Source: METR study, July 2025

Why it matters: Slower performance from experienced developers is a reminder that AI isn’t always an easy upgrade. In complex, long-running projects, rollout may need testing and the right tools before it pays off.

Source: Microsoft and LinkedIn report, 2024

Why it matters: If most workers bring their own AI tools, it shows the gap between what companies provide and what people actually need. For content teams, that gap can impact workflows unless leaders set clear policies and supply trusted tools.

Source: IMF analysis, January 2024

Why it matters: Lots of jobs already involve AI, especially in advanced economies. You could take it as a sign that tasks are shifting. Planning for training now makes it easier to keep teams steady through the changes.

Source: World Economic Forum report, May 2023

Why it matters: When AI is concerned, nobody agrees on where jobs go next, and that uncertainty makes it risky to wait. Start planning for reskilling now so you aren’t scrambling later.

Source: Microsoft and LinkedIn report, May 2024

Why it matters: Saving half an hour a day adds up fast, and the people using AI most effectively already know the shortcuts. Letting them document what works and share it with the team turns individual gains into company-wide progress.

Source: Microsoft Research and Carnegie Mellon University study, April 2025

Why it matters: Feeling sure about AI output isn’t the same as being right. Try to build in checks and reviews to keep teams from running with answers that only look convincing.

Source: Carnegie Learning report, 2025

Why it matters: Regular use by teachers and administrators shows AI is already part of daily school operations, making it key to set clear rules on disclosure, grading, and privacy before it becomes harder to rein in.

AI tools can be helpful, but as you probably know all too well, they still get things wrong and may even hallucinate. People notice when the information isn’t reliable, and that shapes how much they’re willing to trust it.

These stats show where guardrails work, where they don’t, and why human checks and clear labeling matter if you want your audience to believe what they’re reading.

Source: NewsGuard report, December 2024

Why it matters: If chatbots are wrong or incomplete this often, it’s hard to trust their output. The takeaway here is to treat everything as a draft and back it with sources before publishing.

Source: Journal of Medical Internet Research article, May 2024

Why it matters: In sensitive fields like health, made-up references aren’t just errors; they’re risks. Always remember to pair AI output with trusted sources and expert review before publishing.

Source: npj Digital Medicine article, May 2025

Why it matters: They may take a little extra time to implement, but guardrails make a difference. With the right prompts, retrieval, and checks, error rates drop to a level professionals can actually work with.

Source: arxiv.org paper, July 2023

Why it matters: If guardrails on major models can be broken this easily, it isn’t just about mistakes — it’s about vulnerability. Treat outputs as open to manipulation and put review steps in place before trusting them.

Source: NewsGuard report, May 2025

Why it matters: With so many AI-made sites running without oversight, bad information can look more real than ever. Double-check sources before you cite them and rely on signals that prove where the content came from.

Source: Turnitin press release, April 2024

Why it matters: With millions of papers flagged, schools can’t ignore AI use anymore. Using AI content detection tools is only the first step — clear rules and easy ways for students to show how they used AI have to follow.

Source: Deloitte study, December 2024

Why it matters: Even people who know AI well are struggling to trust what they read online. That makes transparency (like clear labels and verifiable sources) a must if you want to keep credibility.

Source: KPMG and University of Melbourne study, 2025

Why it matters: When almost half of employees think their company lacks clear AI rules, it creates a credibility issue. If your policies aren’t visible, audiences may assume oversight is missing, too.

Source: BBC study, February 2025

Why it matters: If half the answers come back with mistakes, you can’t treat AI output as finished work. It has to be checked and fixed before it ever reaches an audience.

Source: Deloitte study, 2024

Why it matters: The majority of consumers studied favored labeling AI-generated content, while 68% were concerned about the potential for synthetic AI-generated content to be used for scams.

Money is flowing into AI at record levels, and policymakers are racing to set the rules that go with it. The combination of heavy investment and fast-moving regulation is changing how businesses grow, launch products, and stay compliant.

These stats show where funding is headed, how oversight is evolving, and why both will matter for anyone building or publishing with AI.

Source: Stanford AI Index report, 2025

Why it matters: Billions flowing into generative AI show investors still see momentum. That kind of money speeds up competition and raises the bar for results, not just demos. Interestingly a different report noted that global AI funding surpassed $100 billion - see stat 56.

Source: CB Insights report, January 2025

Why it matters: With billions being poured into AI, new products will likely flood the market fast. For buyers, that means more choice but also more pressure to tell which tools actually deliver.

Source: EY Ireland press release, August 2025

Why it matters: Investment is accelerating, not slowing. Startups that survive now will likely face stronger competition, but also have more capital to scale faster.

Source: Pitchbook data via Axios article, July 2025

Why it matters: AI is dominating venture capital at this point. That concentration may just pull talent, standards, and infrastructure toward AI-first businesses.

Source: Carta report, July 2025

Why it matters: Investors are paying a premium for AI companies at an early stage. That kind of valuation lift means expectations are high, and startups will need to prove real value quickly to keep it.

Source: Stanford AI Index report, April 2025

Why it matters: That kind of money attracts the best talent and sets the pace for the tools everyone else ends up using. If you’re building with AI, expect US companies to influence the standards and APIs your team works with.

Source: OECD.AI Policy Navigator, September 2025

Why it matters: Governments aren’t sitting on the sidelines anymore. With hundreds of new rules in play, businesses need to stay alert to which ones could affect their content or operations.

Source: NIST article, February 2024

Why it matters: When this many major players join forces, their standards quickly become the baseline. Agencies and enterprises will soon expect vendors to prove they can keep up.

Source: NCSL report, September 2024

Why it matters: A single federal rulebook won’t cover you. Each state is adding its own twists, so tracking local requirements is turning into a must-do task.

Source: US FDA news release, January 2025

Why it matters: AI is already embedded in high-stakes industries like healthcare. If you’re in marketing or product, plan on clear safety messaging and transparency from the start.

Source: UK Government press release, May 2024

Why it matters: For the first time, oversight bodies are linking up across borders. That could make global compliance checks simpler, but also harder to avoid.

If this collection of AI statistics means one thing, it’s this: you can’t escape AI anymore. It’s becoming such a normal part of consumers’ and businesses’ routines that even the government is getting involved — and racing to catch up.

Policies aren’t the only thing lagging behind, though. Despite such widespread adoption, trust in AI remains an issue, which is especially relevant for marketers, publishers, and anyone else who creates content.

After all, trust often decides what gets read, shared, and believed, and the way to do it these days is by showing your work. Be clear about how you use AI, back your claims with solid sources, and check over any AI-generated work carefully.

Because in a world filled with similar AI outputs, credibility is what can set you apart.

Maintain transparency in the age of AI with Originality.ai.

Further Reading: