In recent years, the proliferation of generative artificial intelligence (AI) tools has transformed the landscape of online content creation, including customer reviews.

This shift is particularly relevant in highly regulated and reputation-sensitive sectors such as banking, where consumer trust plays a central role in shaping public perception and market behavior.

As customers increasingly rely on digital reviews to make informed decisions, the integrity and authenticity of these reviews become critical.

This study aims to investigate the extent to which AI-generated content has impacted customer reviews of United States banks from 2018 to 2024.

This study contributes to the broader conversation on AI ethics, regulatory oversight, and the evolving nature of online reputation systems in the financial industry.

The study was conducted using a dataset of over 19,000 customer reviews from 47 distinct US banks. Then, we applied proprietary Originality.ai AI detection to classify each review based on its likelihood of being AI-generated.

We focused on year-over-year trends to understand how the prevalence of AI-generated content has evolved over time.

In a study of over 19,000 customer reviews from 47 US banks from 2018 to 2024:

Interested in learning more about the impact of AI on bank reviews from other regions? Read our analysis of AI Canadian “Big 5” bank reviews and AI Canadian Online Bank Reviews.

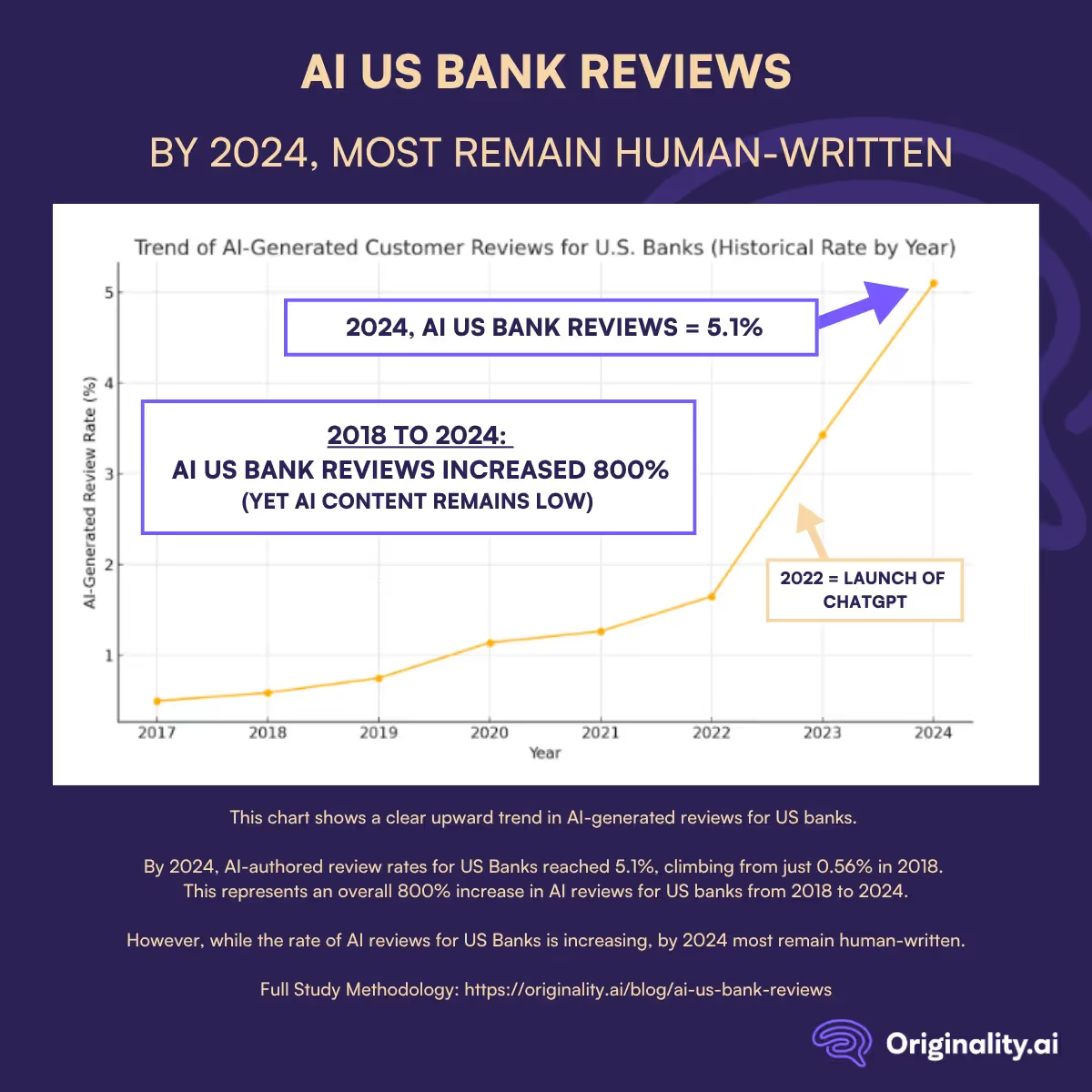

The analysis of AI-generated customer reviews for U.S. banks between 2018 and 2024 reveals a clear upward trend in synthetic content (as depicted in the graph above).

Starting from a low baseline in 2018, the rate of reviews identified as AI-generated has steadily increased year over year (while remaining mostly human-written). Let’s take a closer look:

In 2018, the proportion of AI-generated reviews stood at approximately 0.56%. The growth of AI reviews in US banks was relatively modest over the time period studied:

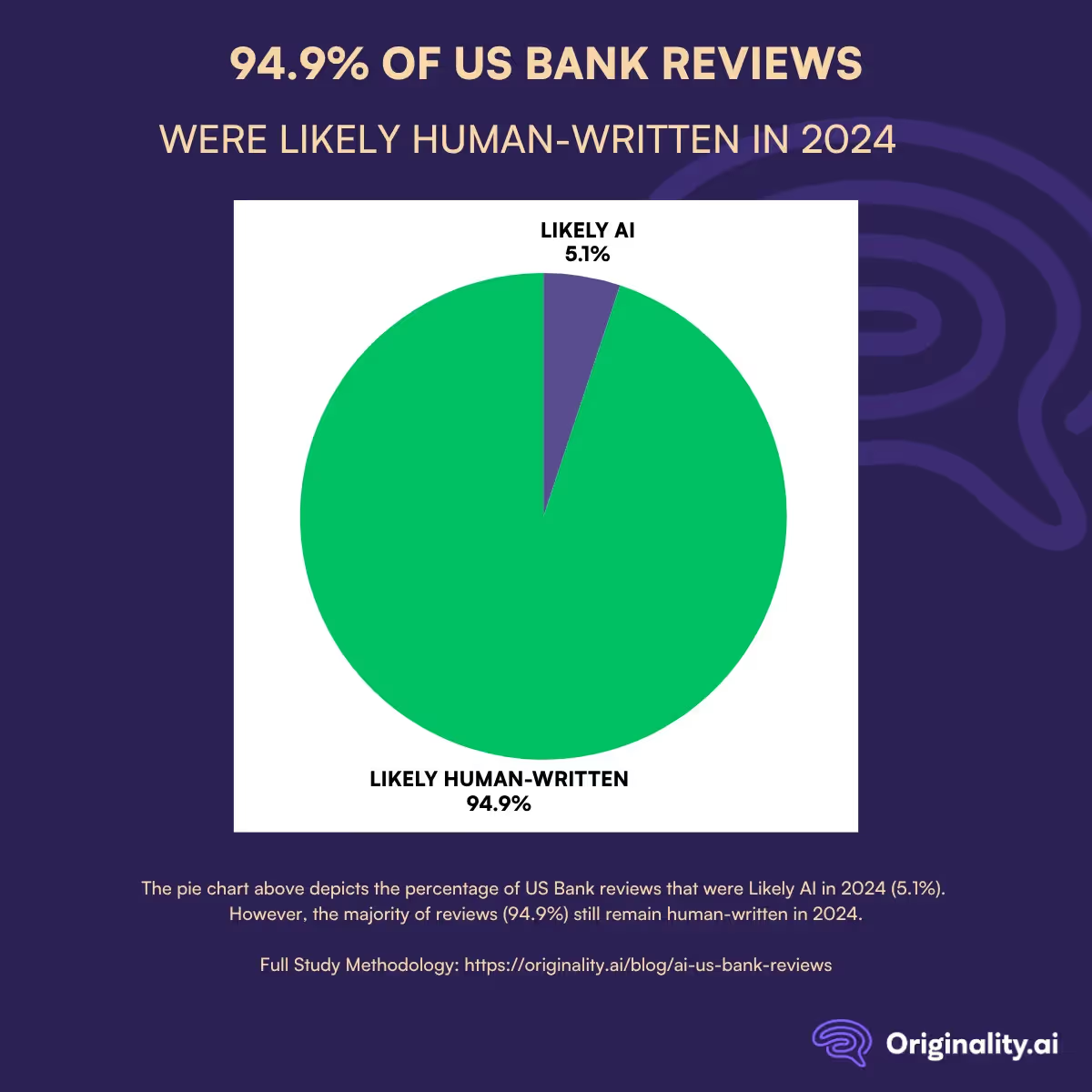

The key takeaway from this analysis of AI US Bank Reviews? While the rate of AI reviews for US Banks is rising, the majority of reviews as of 2024 are still human-written.

Why are AI reviews increasing (even if moderately)? There are a few possibilities:

The findings from this study highlight that AI content has increased in US Bank reviews since 2018. In 2024, 5.1% of US Bank reviews were AI-generated, up from just 0.56% in 2018.

Yet, the majority of US Bank reviews, at 94.9% are still human-written in 2024 (as depicted in the pie chart above).

The majority of US Bank Reviews remaining human-written was a pleasant surprise, considering the implications of fake AI reviews.

The increasing use of synthetic content, as studied on other platforms such as Glassdoor, Canadian ‘Big 5’ Banks, and Canadian Online Banks, raises important concerns about trust, authenticity, and the ability of platforms to moderate and verify review integrity.

In sectors like banking, where customer sentiment directly influences institutional credibility and consumer behaviour, even a modest influx of artificial content could skew perception and undermine confidence.

The presence of AI-generated reviews for U.S. banks, even if moderate, signals a broader transformation in the way reputations are built and maintained in the digital age.

As AI tools become more embedded in everyday writing, stakeholders, including banks, regulators, and review platforms, must consider proactive measures to ensure transparency.

These may include disclosures for AI-assisted content, more robust detection models, and user education on responsible AI use.

Future research can expand on these findings by exploring the motivations behind AI-generated review creation, analyzing review sentiment and linguistic patterns, and comparing rates across industries.

Wondering whether a post or review you’re reading might be AI-generated? Use the Originality.ai AI detector to find out.

Read more about the impact of AI:

We gathered customer reviews from publicly available pages of various U.S. banks. This included both national and regional institutions to ensure broad coverage.

Custom web scrapers were used to extract relevant information. Each review was tagged with metadata such as the author, date, location, bank name, star rating, number of likes, and the full review text.

Once collected, the data was cleaned and duplicates were removed. This helped maintain consistency and ensured the dataset was representative.

To assess whether a review was AI-generated, we used the Originality.ai API. This tool provides a likelihood score between 0 and 1, indicating the probability that the content was generated by an AI model. Based on these scores, reviews with a likelihood score above a designated threshold (typically 0.5 or higher) were flagged as "AI-Generated."

Each score was saved alongside the review’s metadata for further analysis. This methodology is consistent with previous studies on AI-generated content in airline and retail reviews, ensuring consistency in how AI-generated material is identified and compared.

MoltBook may be making waves in the media… but these viral agent posts are highly concerning. Originality.ai’s study with our proprietary fact-checking software found that Moltbook produces 3 X more harmful factual errors than Reddit.