The integration of artificial intelligence into daily life continues to advance.

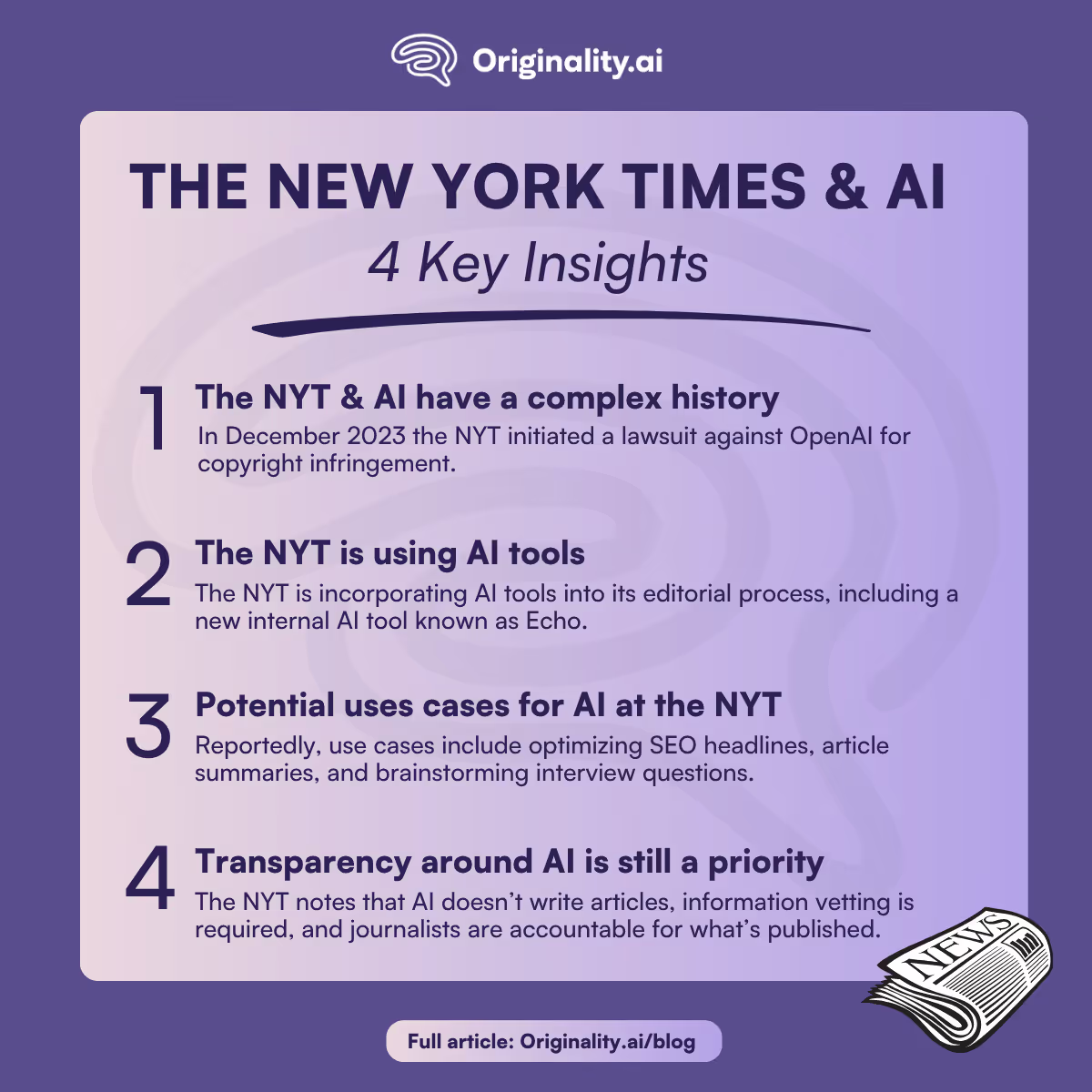

One of the most recent businesses that has opted to use AI is the New York Times (NYT), which has begun using AI tools (alongside its policies/principles on AI use).

For anyone who has been following the generative AI conversation closely over the past few years, you will know that the use of AI in journalistic practices is a significant (and controversial) topic.

Here, we will discuss how the NYT plans to adopt AI tools, the functionality of these tools, and the broader implications for the journalism industry.

Not sure if something you’re reading is AI-generated or human-written? Maintain transparency in the age of AI with Originality.ai.

Before we dive into the NYT’s AI tool adoption, it is important to provide some context regarding their history with generative AI, most notably OpenAI and Microsoft.

In December 2023, they initiated a landmark lawsuit against the two companies, claiming extensive copyright infringement (learn more about OpenAI and ChatGPT lawsuits).

The main aspect of the NYT and OpenAI lawsuit: NYT alleged that OpenAI’s ChatGPT and Microsoft’s Copilot both used millions of NYT articles to train their large language models, and then the LLMs generated copies that closely mimicked NYT content.

“Defendants’ unlawful use of The Times’s work to create artificial intelligence products that compete with it threatens The Times’s ability to provide that service. Defendants’ generative artificial intelligence (“GenAI”) tools rely on large-language models (“LLMs”) that were built by copying and using millions of The Times’s copyrighted news articles, in-depth investigations, opinion pieces, reviews, how-to guides, and more.” - NYT Complaint Dec. 2023 (page 2)

“Defendants’ Unauthorized Use and Copying of Times Content 82. Microsoft and OpenAI created and distributed reproductions of The Times’s content in several, independent ways in the course of training their LLMs and operating the products that incorporate them.” - NYT Complaint Dec. 2023 (page 24)

U.S. District Judge Sidney Stein recently (March 2025) allowed most of NYT’s claims to proceed (although some of the claims were dismissed) as reported by the Associated Press, which means that, while NYT is now using AI tools more regularly, this case is very much still ongoing.

With that in mind, it might come as a surprise that the NYT is now stating its plans to use AI tools in its content production.

Well, it’s important to understand the context a little more.

While The New York Times has officially embraced artificial intelligence, it isn’t planning on using it to replace its journalists.

“We don’t use A.I. to write articles, and journalists are ultimately responsible for everything that we publish.” - NYT

Instead, the aim is to use tools for productivity as noted by TechCrunch, integrating them thoughtfully across its editorial and product teams.

In essence, it’s adopting AI to keep up with rapid technological shifts, whilst also leading them with responsible guardrails in place.

The aim is to have a measured approach that can maximize efficiency and creativity, whilst also maintaining the NYT’s reputation as an accurate and trustworthy news outlet.

Of course, given the NYT’s complex relationship with leading AI tools and its concerns about usage, it should come as no surprise that it has developed its own in-house AI tool, known as Echo.

According to Semafor’s reporting, the tool is designed to assist journalists with various editorial tasks and suggested functionalities include:

In addition to Echo, Semafor also reported that other AI tools, such as GitHub Copilot, NotebookLM, and Google’s Vertex AI, were approved for a variety of editorial or product development tasks.

Further, the report indicated that potential AI use cases were highlighted for the NYT editorial staff, which included:

As you can imagine, the NYT staff have very clear and strict guidelines regarding AI usage.

The NYT permits the use of AI tools for the specific usages highlighted above, but it also has clear guidelines, which include:

Given the fact that the NYT has been very outspoken about AI tools and the fact that it is an industry-leading news publisher, this has significant industry implications.

It will be interesting to see whether other brands will implement the same rigorous internal regulations if they choose to integrate AI into their editorial processes.

We’ve already seen some major AI blunders in 2025, most notably the AI-generated summer reading list scandal and Deloitte’s AI mistake. A clear reminder of just how important fact-checking and content quality checks are when AI is used.

Overall, the integration of AI into top media outlets, like the NYT, highlights the increasing need for transparency around AI usage.

Therefore, tools like the Originality.ai AI detector and fact checker are more important than ever, helping readers understand the context of what they are reading and ensuring that publishers don’t become over-reliant on these rapidly advancing tools.

Read more about the latest in AI: