AI Overviews are reshaping how we find and consume information online. In short, they select a handful of key sources and synthesize a short overview of the main facts about a user’s search.

Typically, linked citations are included along with the overview, where users can then click to access more information about the cited source.

However, given AI’s tendency to produce AI hallucinations, such as with the AI book scandal, it raises important questions:

In this study, we focused specifically on Google’s AI Overviews.

To answer these questions, we built a dataset and analysis pipeline in three steps:

We started with the MS MARCO Web Search dataset, which contains real search queries typed by Bing users.

Using OpenAI’s gpt-4.1-nano, we classified each query as either YMYL or non-YMYL, and then divided the YMYL queries into four sub-categories: Health & Safety, Finance, Legal, and Politics.

From this set, we randomly sampled 29,000 YMYL queries for analysis.

We used SerpAPI, which provides structured access to Google SERPs, to retrieve AI Overview presence and citations, as well as the top-100 organic results for each query.

Every citation and organic URL was then classified as AI-generated or human-written using the Originality.ai AI Detection Lite 1.0.1 model.

Documents that could not be confidently classified (for example, broken links, PDFs, videos, or pages with too little text) were grouped into an unclassifiable category.

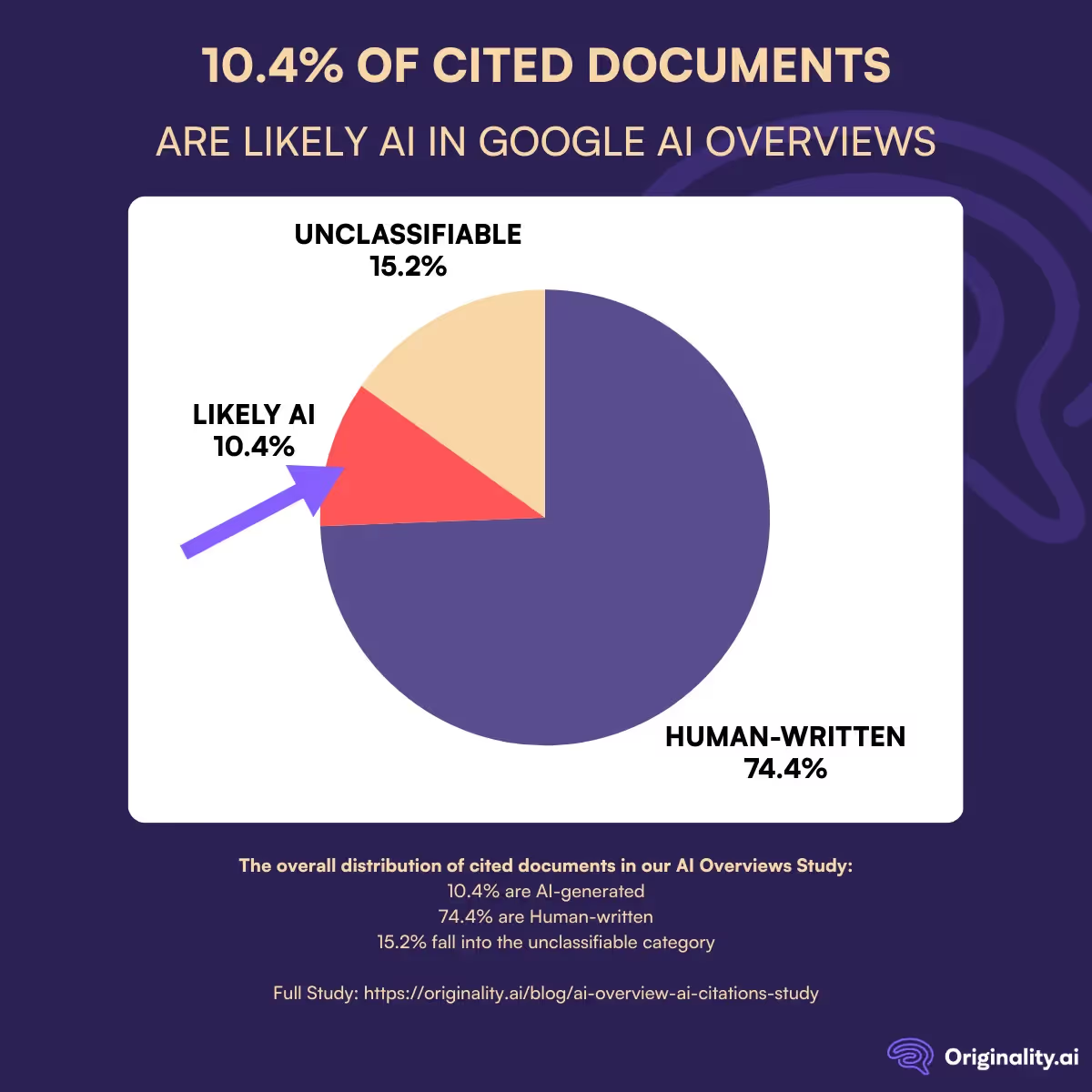

We began with the overall distribution of cited documents.

Within the unclassifiable group, the most common reasons were:

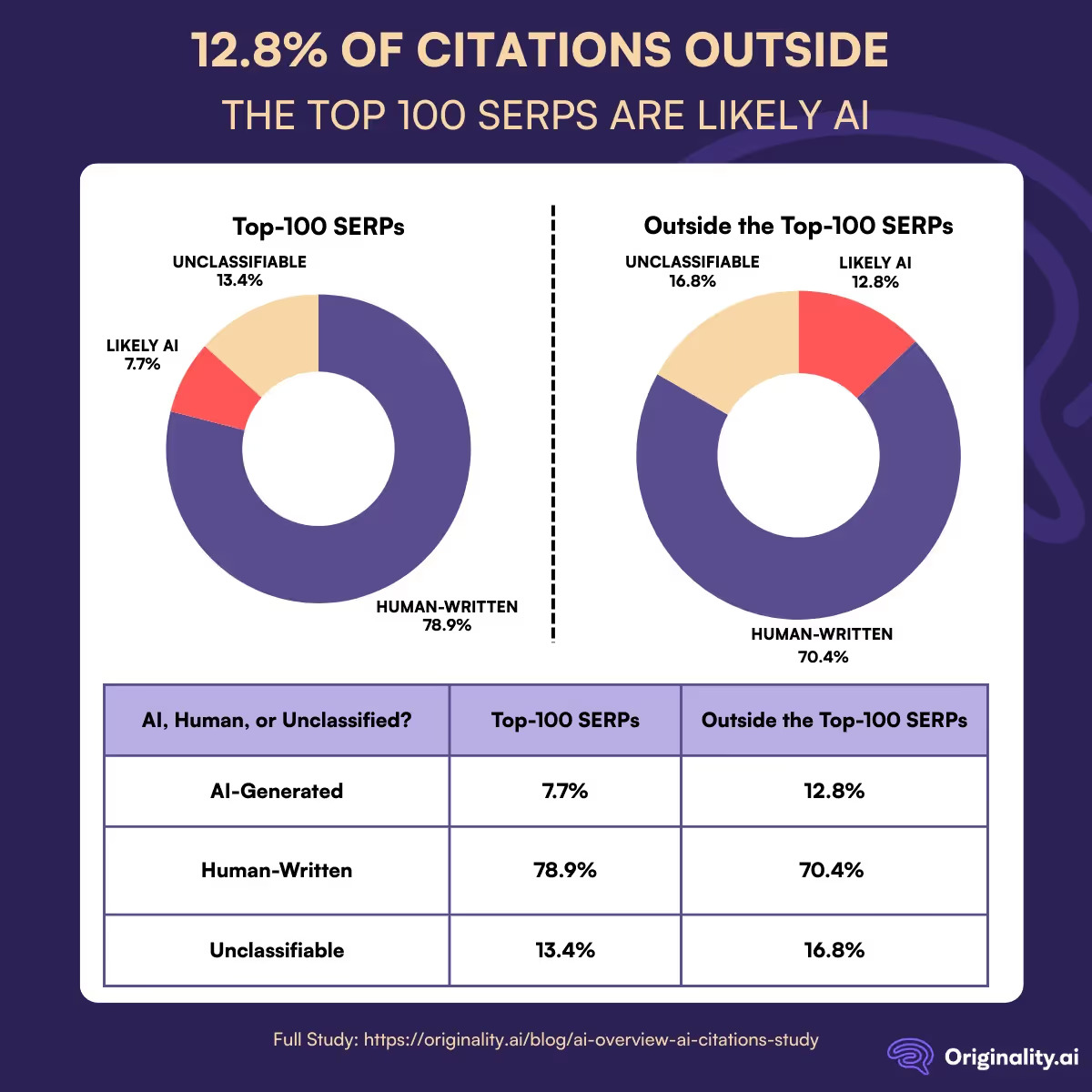

We then analyzed the prevalence of citations in the Top-100 SERPs vs. outside (or absent from) the Top-100 SERP results.

The next key finding was that citations from outside the Top-100 SERPs also contained a higher proportion of AI-generated content.

About 1 in 10 citations in Google’s AI Overviews is AI-generated.

More than half of the citations come from outside the top-100 search results, and these sources are more likely to include AI-written material.

Since this study looked only at YMYL queries (health, finance, law, and politics), the findings are more serious.

Most citations are still human-written, but even a small share of AI-generated content in these areas raises concerns about reliability and trust.

How could this impact AI models?

Beyond immediate citation quality, there is a longer-term risk of model collapse.

AI Overviews themselves are not part of training data, but by surfacing AI-generated sources, they boost those sources’ visibility and credibility.

This, in turn, increases the likelihood that such material is crawled into future training sets.

Over time, models risk learning from outputs of earlier models rather than from human-authored knowledge. That recursive feedback loop can amplify errors, reduce diversity of perspectives, and ultimately degrade the reliability of online information.

Maintain transparency in the age of AI with the Originality.ai AI Checker.

Does Google ranking correlate with the probability of an AI Citation? Find out in our study on Rankings and AI Citations.

Then, learn more about AI Search and AI Detection Accuracy:

MoltBook may be making waves in the media… but these viral agent posts are highly concerning. Originality.ai’s study with our proprietary fact-checking software found that Moltbook produces 3 X more harmful factual errors than Reddit.