In recent years, the rapid advancement of artificial intelligence (AI) tools capable of generating human-like text has impacted a number of fields, including scientific publishing.

The emergence of large language models (LLMs), such as ChatGPT, has made it increasingly easy for researchers to draft scientific content, including abstracts.

As these tools become more accessible and integrated into academic workflows, questions arise about their impact on the authenticity, originality, and transparency of scientific communication.

This study focuses on the rate of AI in journal abstracts from publications focused on that same topic — artificial intelligence.

Abstracts are often the only portion of a paper read by policymakers, media, and the general public; as a result, their integrity is paramount.

Using a dataset of thousands of journal abstracts from AI-related publications spanning 2018 to 2025, this study examines the prevalence of AI-generated content over time.

The findings of this research will contribute to ongoing discussions about the role of AI in academia, the potential need for transparency in AI-assisted writing, and the ethical implications for scientific authorship.

This study investigates the prevalence and trajectory of AI-generated content in scientific abstracts within the field of artificial intelligence from 2018 to 2025.

Our analysis is guided by these central questions:

Together, these objectives aim to provide a data-driven foundation for understanding how generative AI is reshaping the academic discourse in artificial intelligence, highlighting both the opportunities and risks posed by its integration into scientific writing.

These findings highlight that AI tools are changing how research is communicated, especially in AI scholarship, a field at the forefront of these technological developments.

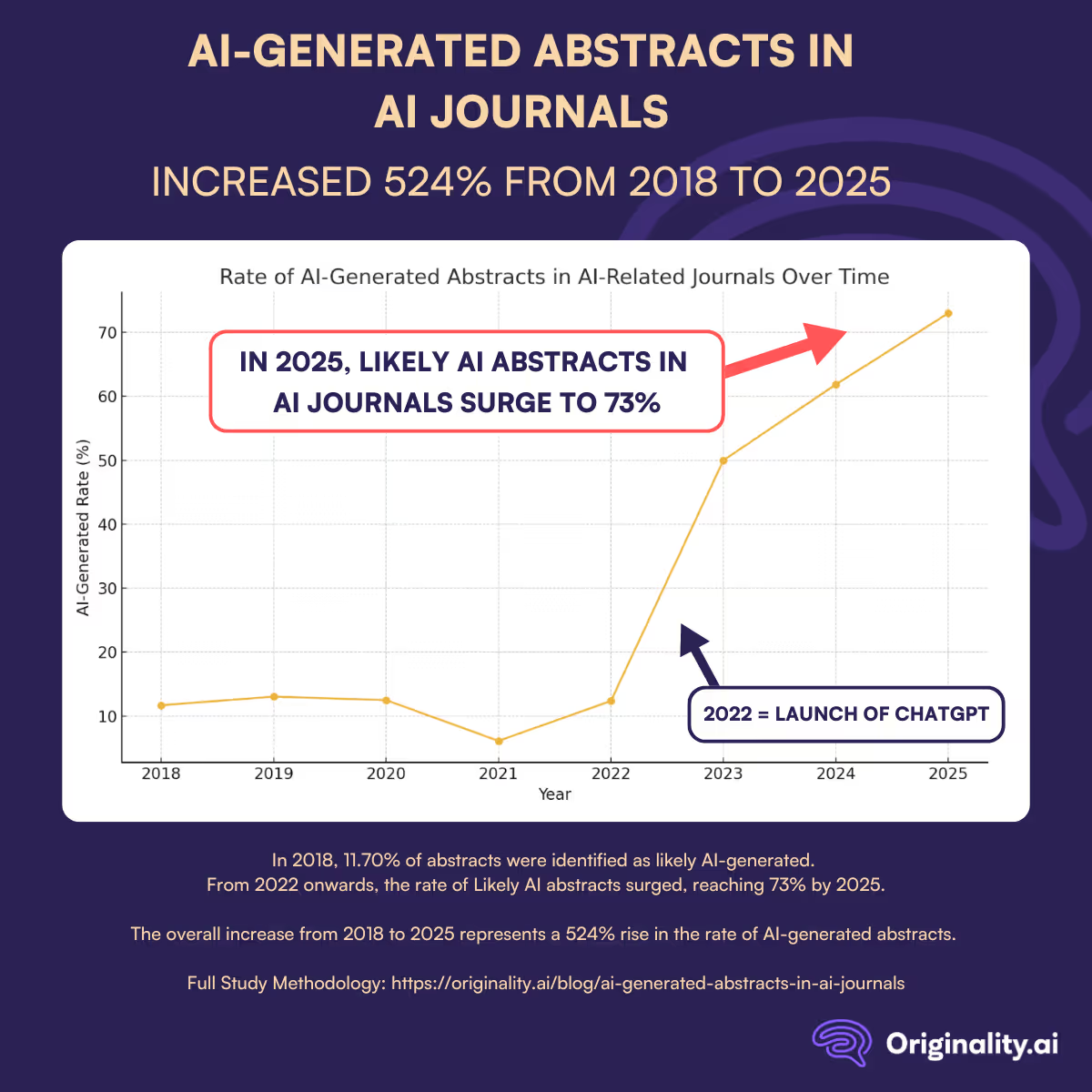

The proportion of AI-generated abstracts in scientific journals focused on artificial intelligence has shown fluctuations over the time period studied, with an overall upward trajectory, especially since 2022.

This is interesting to note as it highlights broader trends in the implementation of AI technology within the AI industry and scholarship.

2022 marked a notable shift and accelerated implementation of AI for writing abstracts within AI journals.

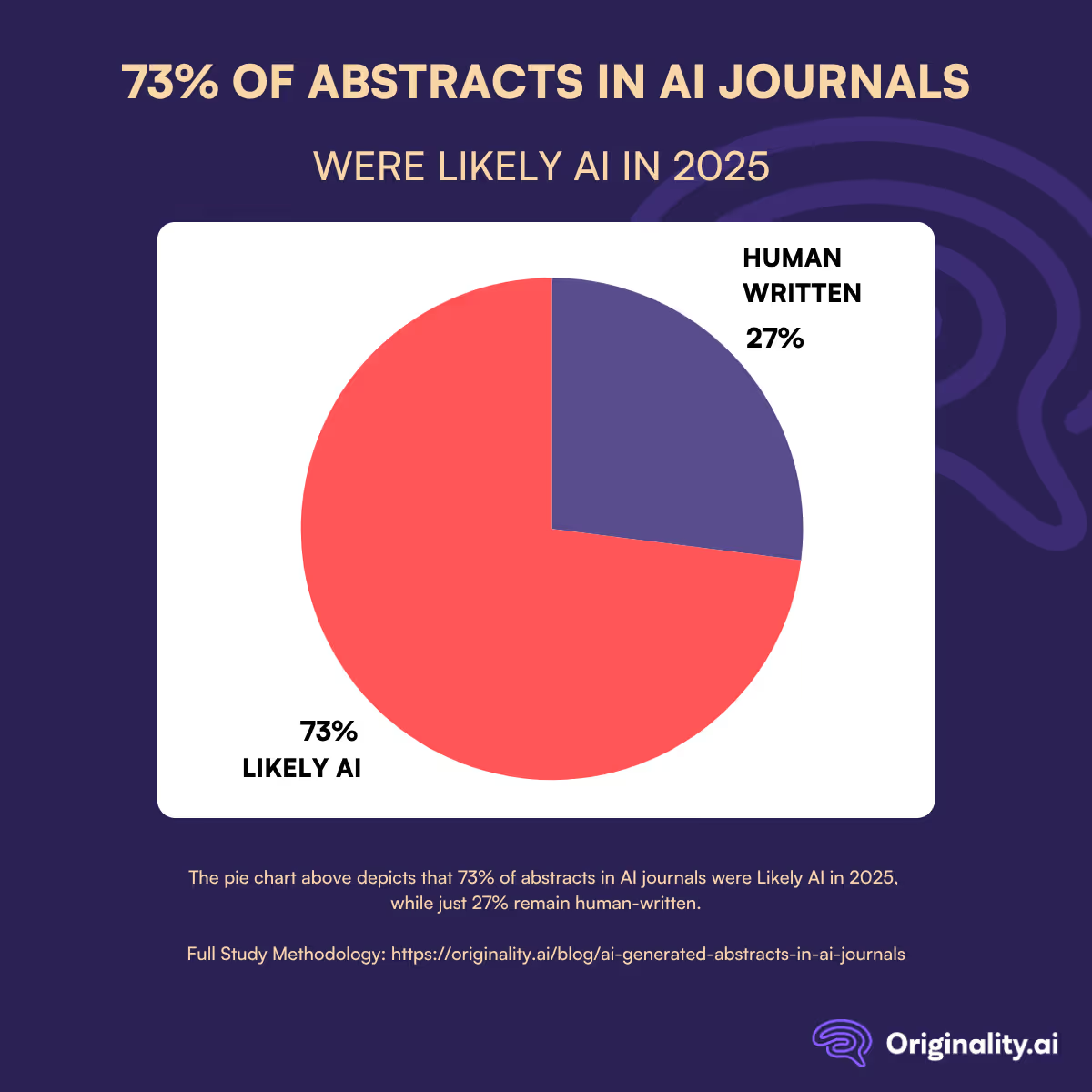

From 2022 onwards, the rate of Likely AI abstracts surged, reaching 73% by 2025.

This marks a substantial shift in writing practices within the field of artificial intelligence itself; from 2018 to 2025, it represents a 524% increase in the rate of AI-generated abstracts.

This trend underscores the accelerating influence of AI on both the content and the medium of scientific discourse in the very field it seeks to transform.

The growing presence of AI-generated abstracts from 2018 to 2025 within the field of artificial intelligence reflects a noteworthy evolution in how scientific content is created and presented.

Unlike other domains where AI-generated content may still be emerging, the field of AI presents a unique case: researchers are not only developing AI technologies but are also among the earliest adopters of these tools in their writing practices.

The data shows a modest and fluctuating pattern between 2018 and 2022.

However, following the launch of popular tools like ChatGPT in 2022, the rate of AI-generated abstracts continued to climb.

By 2025, the rate jumped to 73%, indicating that the majority of abstracts in AI journals were likely composed with generative AI.

This trend raises nuanced questions about the integration of AI tools in academic publishing.

On the positive side, AI-assisted writing tools offer efficiencies in scientific communication, such as helping researchers draft clear, grammatically sound abstracts and reducing the language barrier for non-native English speakers.

However, in a domain so closely tied to the development of these technologies, there are added concerns: how do we ensure accountability when the tools being studied are also influencing the presentation of the research itself?

Questions around disclosure, intellectual authorship, and the validity of peer-review processes must be revisited as AI-generated content becomes more prevalent, even in the field that builds these tools.

This study offers a lens into the evolving landscape of scientific writing within the artificial intelligence community.

These results suggest that AI tools are changing how research is communicated and published, especially in artificial intelligence, a field which is at the forefront of these technological developments.

As the use of generative AI becomes increasingly normalized, academic institutions, journals, and researchers must establish clear frameworks that define appropriate use, ensure transparency, and maintain the integrity of scholarly communication.

In a field where the subject and the medium are becoming increasingly intertwined, maintaining a strong ethical foundation will be essential to fostering trust and accountability in the next generation of academic publishing.

Wondering whether a post, review, or abstract you’re reading might be AI-generated? Use the Originality.ai AI detector to find out.

Read more about the impact of AI:

We investigated the prevalence of AI-generated abstracts in AI-related scientific literature from 2018 to 2025 using publicly available academic metadata and the Originality.ai AI detection tool.

Data Collection

Using the OpenAlex API, we retrieved up to 500 abstracts per year containing the keyword “artificial intelligence” and a non-empty abstract. A custom function reconstructed the original text from OpenAlex’s inverted index. Metadata such as title, journal, and publication date were retained.

AI Detection

Reconstructed abstracts (minimum 50 words) were analyzed using the Originality.ai API, which provided a confidence score indicating the probability of AI authorship and a binary classification (is_ai_generated) flag. Scans were retried up to three times if errors occurred.

Output and Storage

Results were compiled into a master dataset using pandas and exported as a CSV file, including fields like year, title, abstract, journal, AI score, and scan status.

This approach enabled consistent, scalable tracking of generative AI use in scientific abstracts over time.

MoltBook may be making waves in the media… but these viral agent posts are highly concerning. Originality.ai’s study with our proprietary fact-checking software found that Moltbook produces 3 X more harmful factual errors than Reddit.