Over the past year or so, the internet’s content economy has entered a new era, one that content creators, SEOs, and publishers cannot afford to ignore.

Generative AI tools like ChatGPT, Claude, and even Google’s new AI Overviews are reshaping how people consume information online.

Increasingly, users aren’t clicking through to original sources. Instead, they’re trusting AI-generated summaries to deliver the key takeaways upfront.

And while this may seem like progress for convenience, it’s quietly creating a devastating shift beneath the surface.

Join the conversation on ethical AI use and content transparency with Originality.ai.

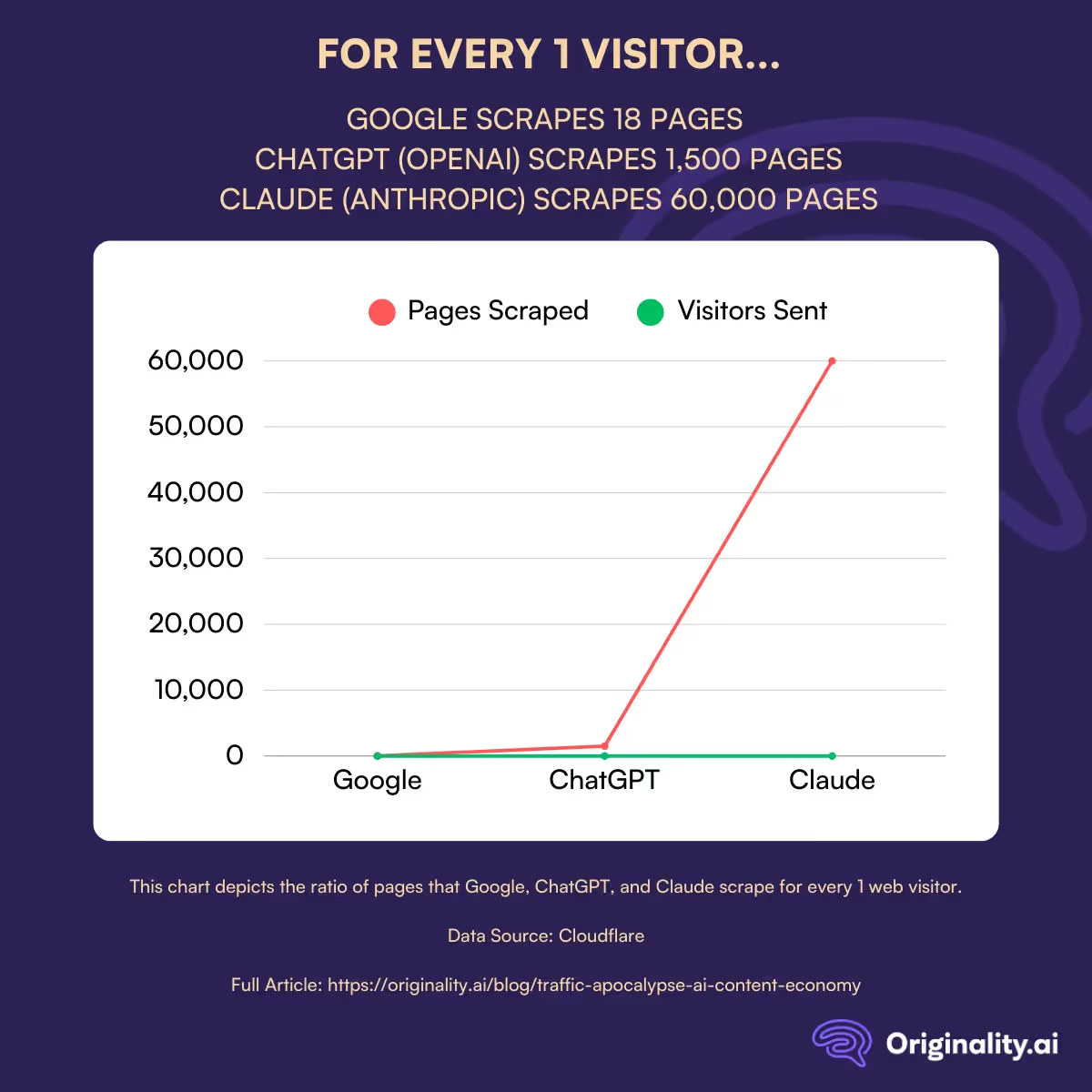

As AI-fueled interfaces increasingly bypass websites in favor of delivering answers directly, Cloudflare’s recent data delivers a somber picture of how much more these models are scraping and how little traffic they are returning to creators.

According to Cloudflare, the longstanding economic model of the web, “search engines index content and direct users back to original websites, generating traffic and ad revenue for websites of all sizes,” is dead.

Instead, AI crawlers collect content without sending visitors to the original source, therefore removing any revenue or satisfaction they would get from seeing users viewing their content.

According to Cloudflare’s research:

All this means AI systems are consuming exponentially more content than they return value for, shifting the web’s original value exchange firmly in favor of the platforms.

In simple terms, creators are essentially subsidizing the growth of AI, which leads to rapid AI growth and decreased traffic sent to creators.

As Cloudflare puts it:

“If the Internet is going to survive the age of AI, we need to give publishers the control they deserve and build a new economic model that works for everyone – creators, consumers, tomorrow’s AI founders, and the future of the web itself.”

But the growing imbalance between traffic and scraping isn’t just a tech issue. In fact, it’s far from it.

It’s a reflection of how user behaviour is evolving as we move firmly into the AI era.

More and more, users are turning to generative AI tools like ChatGPT, Claude, and AI Overviews to answer questions directly, without needing to click through to the original sources.

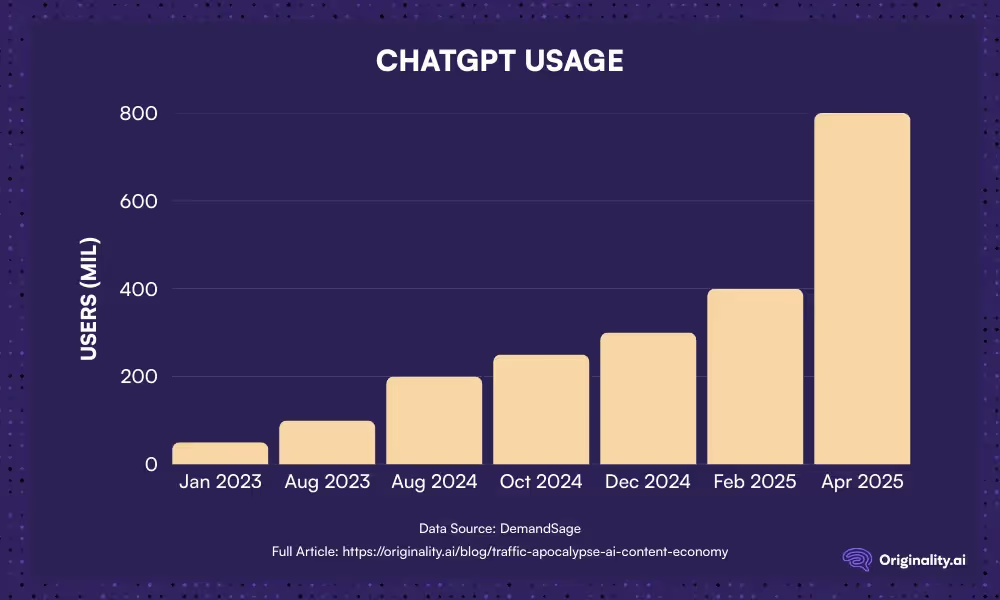

Consider that ChatGPT has approximately 800 million weekly active users, as noted in our LLM Visibility Statistics Guide.

With usage rates that high, it’s easy to see just how rapidly these tools are becoming integrated into day-to-day life.

The appeal is obvious. AI answers are fast, neatly packaged, and often ‘good enough’ to satisfy the average query.

But that convenience comes at a cost.

Search used to be a two-step process:

Today, step two is disappearing. The AI answer is the product.

Even in Google, the shift is visible. With the rollout of AI Overviews, users are getting a generated summary, right at the top of the page, reducing the need to visit individual sites.

Fewer clicks, lower traffic, and fewer chances for creators to garner an audience and monetize their work.

When traffic drops, so does ad revenue, affiliate income, newsletter growth, and every other business model built on visibility.

Take this example from 404 Media, an independent tech publication that publishes deeply reported original journalism.

In a recent piece, they revealed that Google sent them over 3 million visitors, whereas ChatGPT sent just 1,600.

This isn’t an isolated insight. In reality, it is pretty much the norm.

At a structural level, this means fewer incentives to invest in high-quality content. After all, why would you spend hours (or days) producing original research, reporting, or analysis if an AI system is going to summarize it instantly, without linking, crediting, or compensating?

That means fewer independent journalists, fewer niche experts and creators, and a web increasingly filled with derivative content built on uncredited sources.

Now, if you’ve just read that and you’re slipping into a mild existential crisis, let me bring you back. The landscape may be shifting fast, but creators aren’t powerless.

There are tangible steps that publishers, SEOs, and content teams can take to regain control, protect their work, and push the industry forward in the right direction.

To respond effectively, creators first need visibility into where and how their content is being used.

Tools like Originality.ai can help you detect AI-generated content across the web and audit how much of a page was written by an LLM.

Getting a better understanding of the prevalence of AI within your niche is the first step to reclaiming ownership of your content.

But change doesn’t just come from detection alone. It also requires collective pressure. Pressure on platforms, pressure on policymakers, and pressure on the wider tech industry.

Creators and publishers should advocate for transparent citation practices, clear licensing models, and a clearer option to opt in or out for AI crawlers.

Cloudflare’s Pay-Per-Crawl is certainly a step in the right direction, signalling that platforms should pay to access publisher content, but more action is needed.

As LLM use continues to grow, it’s essential for creators to keep track of where their content is referenced or paraphrased, whether those references are getting credited, and how competitors are also responding to these shifts.

The best way to do this is to use alerts, analytics, and search monitoring tools to keep tabs on how your work is being surfaced.

The more aware you are, the better you can adapt your strategy, update your licensing policies, or call out misuse.

Let’s be real. AI isn’t going away.

But the way it interacts with content (and compensates the people who create) can still be shaped.

If you’re a publisher, marketer, SEO, or even a journalist, now is the time to take a stand. The future of the open web depends on transparency, attribution, and a fairer exchange between creators and platforms.

Want to know if your content is being used by AI without attribution? Try Originality.ai to scan your work and detect how LLMs may be leveraging it.

Further Reading: