It’s no secret that AI and LLMs are sharper than ever.

That leaves one major question unanswered, though. Can AI content ever truly pass as human?

Assignment AI Humanizer claims it can, by reshaping AI content and attempting to make it sound more ‘human’.

In this review, we dig into how the Assignment Humanizer perform, whether the output is detectable, and why tools like this are so popular.

The Assignment AI Humanizer is a rewriting tool built to turn AI-generated content into content that reads more like it was written by a human.

The main pitch? Helping text slip past AI detectors.

The tool is easy to use, and within moments, users receive a rewritten version of their content that claims to sound more natural and not get flagged by AI tools.

For several years now, we have been tracking the volume of AI-written content making its way onto the internet.

Whether it's essays, blog posts, or business copy, the rise in generated content has also led to a rise in the importance (and demand) for detection tools.

Teachers, editors, and companies increasingly rely on platforms like Originality.ai to separate human work from machine output.

But they aren’t the only tools that have seen an increase in demand. AI “humanizers” have also increased in popularity, promising to smooth out the mechanical edge of AI writing and make it harder for detectors to catch.

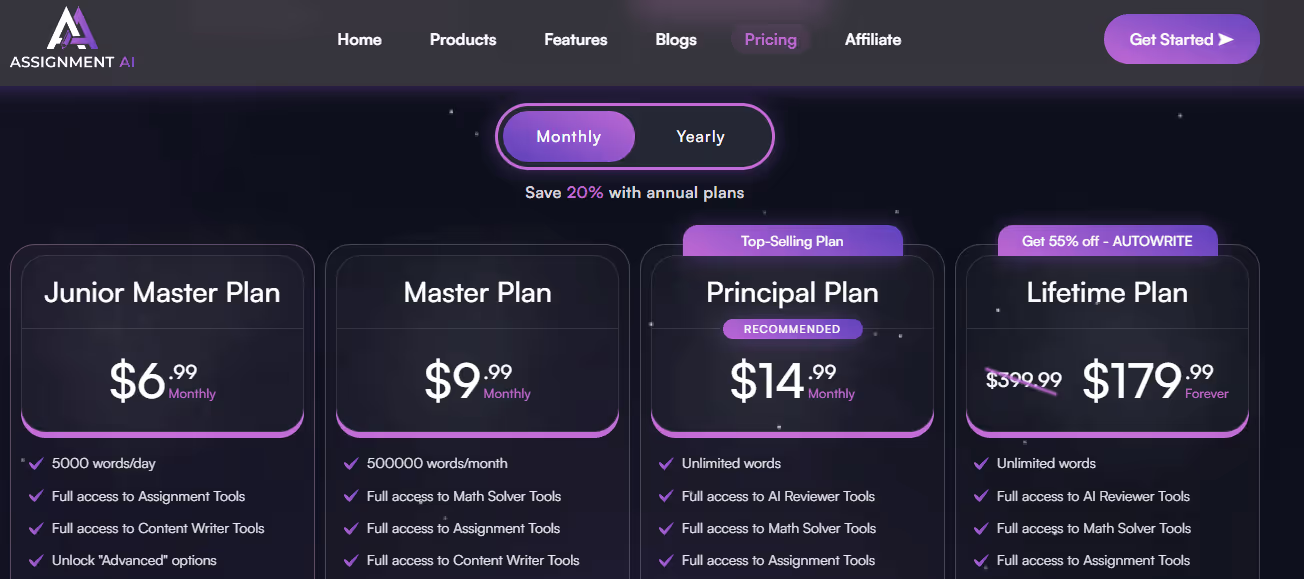

The Assignment AI Humanizer advertises 4 different pricing tiers to choose from: Junior Master, Master, Principal, and Lifetime. You can also choose between a monthly or annual account.

However, you can also get a free account, which is hidden behind the left arrow below the plans.

The free version is very generous, letting users ‘humanize’ up to 800 words a day.

To see if the Assignment AI Humanizer actually delivers on its promise, we put it through a series of tests against three of the most popular AI detectors: Originality.ai, Writer, and ZeroGPT.

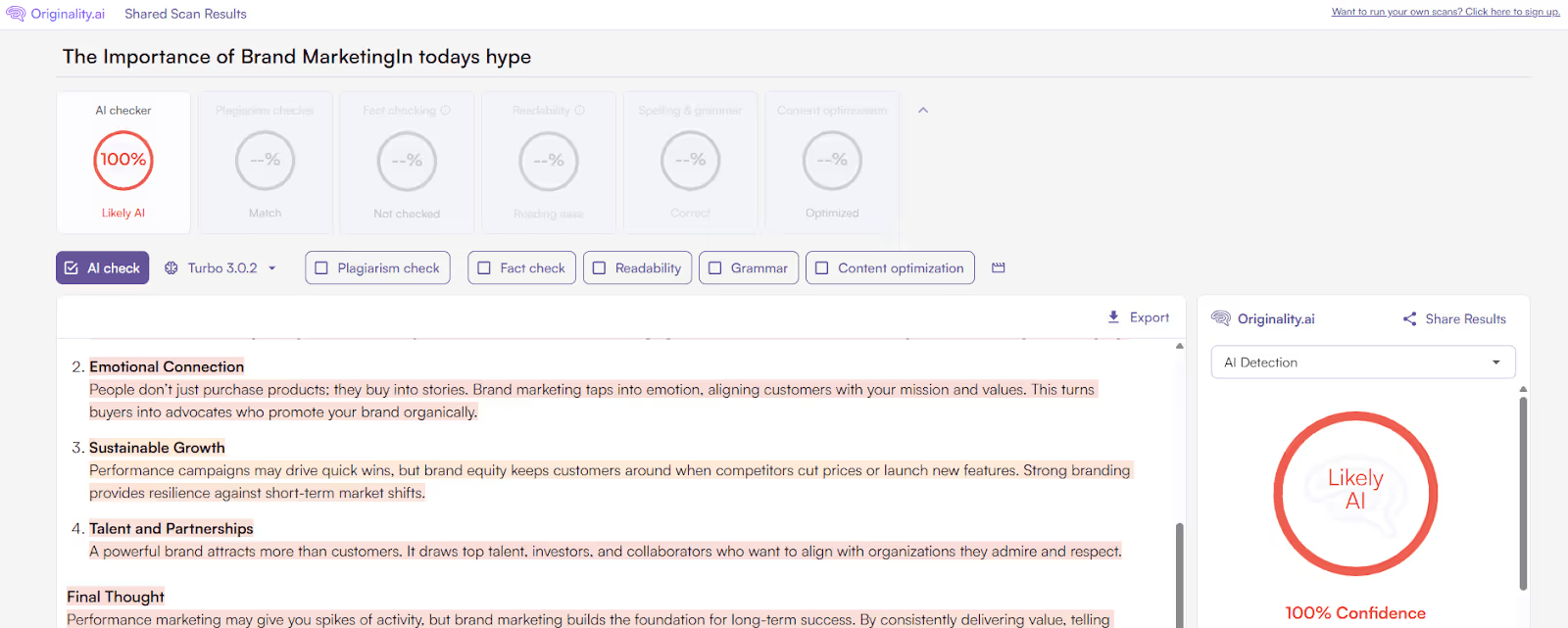

Step 1: We began by generating a brand-new article on the importance of brand marketing using ChatGPT, using it as our base AI sample that all detectors (should) flag as 100% AI.

Step 2: That draft was then run through each detector to establish a baseline for each tool, copying it word for word and making no edits.

Step 3: We then fed the same draft into the Assignment AI Humanizer, letting it rewrite the text into a supposedly more “natural” tone.

Step 4: Finally, we took the humanized version word-for-word and pasted it into the same three detectors to see if the tool’s tweaks were enough to get past them.

Original content (AI-generated): 100% Confidence that the text is Likely AI

Assignment AI Humanizer Version: 100% Confidence that the text is Likely AI

Original content (AI-generated): 90% Human-generated (10% AI-generated)

Assignment AI Humanizer Version: 92% Human-generated (8% AI-generated)

Original content (AI-generated): 96.64% AI-generated

Assignment AI Humanizer Version: 95.06% AI-Generated

As the chart above illustrates, the Assignment AI Humanizer text struggled to consistently bypass detection.

Originality.ai flagged both the ChatGPT draft and the Assignment AI Humanizer rewrite as Likely AI with 100% confidence.

Writer was much less effective, identifying both versions as likely human-written, whereas GPTZero also picked up that both pieces are likely AI-generated.

In short, the Assignment AI Humanizer is still detectable by the best-in-class Originality.ai AI detection tool.

Read more AI humanizer reviews:

Because AI detectors are now widely used, AI humanizers are also increasingly popular with the aim of humanizing AI-generated text so that it bypasses AI detection.

No. Advanced platforms like Originality.ai still catch humanized outputs. Read more in our AI detector accuracy study.

Not always. Many users notice that while the text looks more “varied,” it can lose clarity, flow, or natural structure, sometimes making it more difficult to read than the original AI draft.

Traditional spinners focus on swapping words. The Assignment AI Humanizer tries to adjust sentence rhythm, tone, and complexity to mimic how people actually write.