The rise of artificial intelligence (AI) tools has begun to reshape many facets of academic research and communication.

As these tools are further integrated into scholarly workflows, questions arise about the extent to which AI-generated content is influencing academic literature.

Neuroscience, with its multidisciplinary overlap across biology, psychology, computer science, and medicine, is a compelling and linguistically complex area of academic literature.

Within journal publications on neuroscience, the abstracts play a critical role in sharing findings to a wide academic and clinical audience.

This makes neuroscience abstracts a key focal point for understanding shifts in scientific communication.

In this study, we aim to analyze the prevalence of Likely AI neuroscience-related abstracts published between 2018 and 2025.

By leveraging our proprietary Originality.ai AI detection tool to evaluate abstracts, we aim to quantify shifts in the use of generative AI tools within academic publishing.

This study focuses specifically on the impact of AI in neuroscience abstracts published between 2018 and 2025.

Our analysis is shaped by these central research questions:

By addressing these questions, this study sheds light on how generative AI is reshaping scholarly communication in the field of neuroscience.

Interested in learning about how AI is impacting other areas of academia? Read our analysis on abstracts in AI journals and AI climate change paper abstracts.

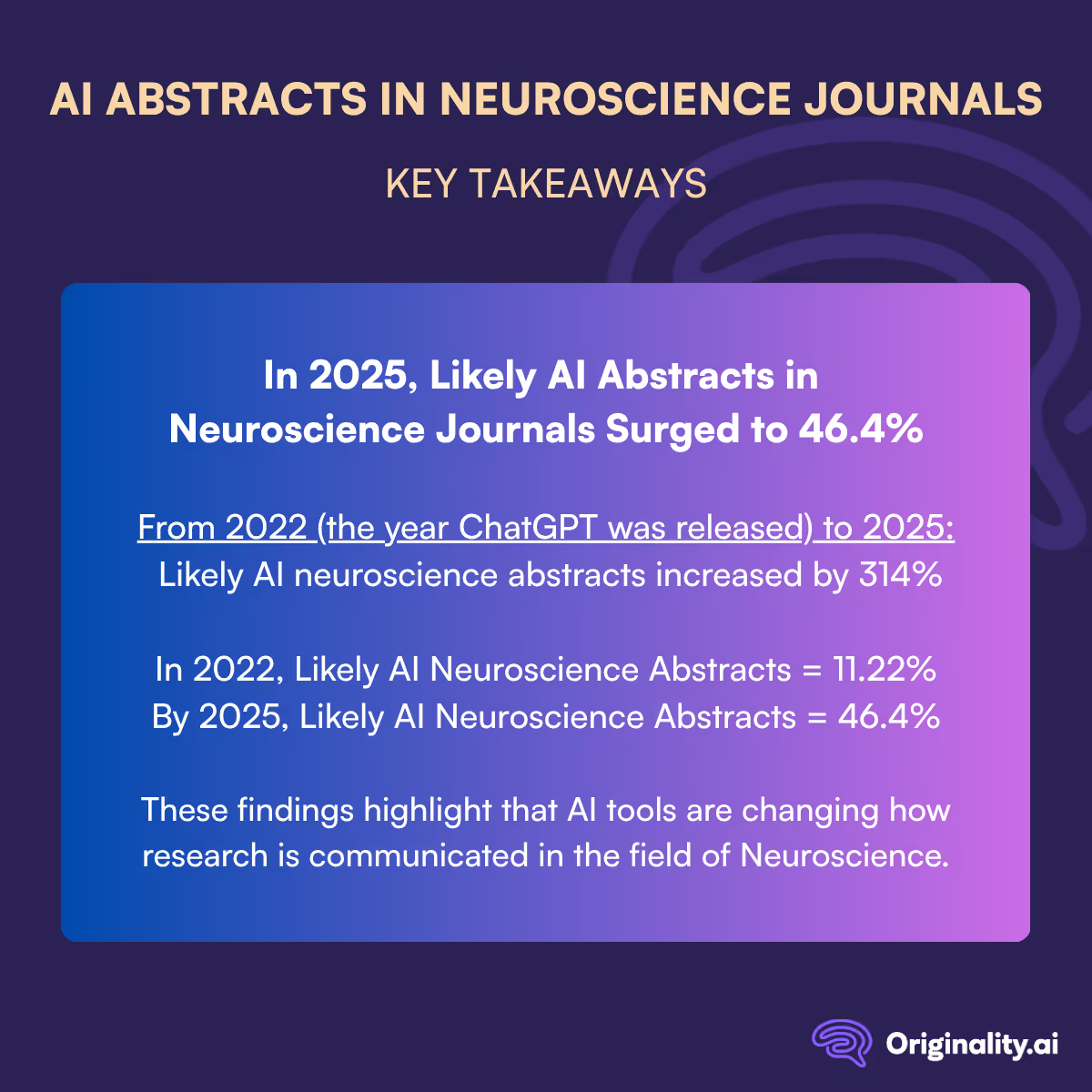

The prevalence of AI-generated abstracts in neuroscience-related journals has shown a clear upward trend over the period studied, from 2018 to 2025.

From 2022 to 2025: AI neuroscience abstracts increased 314%

Why look at the timeframe of 2022 to 2025? In 2022, ChatGPT was launched, dramatically increasing the popularity of AI and LLMs.

AI levels in 2022 and 2025 represent a notable contrast from the years studied before ChatGPT was launched (2018 to 2021), when Likely AI abstracts were consistently less than 10%

This exceptional growth highlights a transformative shift in scientific communication within the field of neuroscience, suggesting that AI-generated content is becoming normalized in academic publishing.

In 2025, just under half (46.4%) of neuroscience abstracts are AI-generated.

This sharp rise is likely tied to the growing accessibility and performance of large language models and other generative technologies.

Neuroscience research requires high levels of precision, clarity, and industry-specific knowledge.

While AI tools can reduce linguistic barriers and improve efficiency, they also raise concerns, considering that AI can and does produce AI hallucinations.

Further, questions around the disclosure of AI use, the incorporation of AI detection into peer-review processes, and updates to editorial standards must be addressed as AI tools become increasingly integrated across industries.

This study offers a data-driven view of the evolving role of AI in scientific communication within neuroscience.

The findings reveal a 314% increase in AI-generated abstracts from 2022 to 2025, corresponding to the release of popular AI tools like ChatGPT and underscoring the rapid normalization of AI-assisted writing in this field.

Such a significant shift cannot be overlooked.

It calls for the development of clear policies around disclosure of AI use, tools for distinguishing between human- and AI-generated content, and new frameworks for understanding authorship and accountability in an era of hybrid scientific writing.

Wondering whether a post, abstract, or review you’re reading might be AI-generated? Use the Originality.ai AI detector to find out.

Read more about the impact of AI:

We examined the prevalence of AI-generated abstracts in AI-related scientific literature from 2018 to 2025. Using academic metadata from OpenAlex and the Originality.ai detection tool, we collected and analyzed abstracts over multiple years.

Data Collection

Abstracts were retrieved via the OpenAlex API for each year from 2018 to 2025, filtered by the keyword “artificial intelligence” and up to 500 non-empty abstracts were collected per year. A custom function reconstructed the original text from OpenAlex’s inverted index. Metadata such as title, journal, and publication date were preserved.

AI Detection

Each abstract (min. 50 words) was scanned using Originality.ai, which returned a confidence score and binary classification for AI authorship. Scans were repeated (up to three times) in case of errors, with handling for timeouts and rate limits.

Output and Storage

Results were compiled into a master dataset using pandas and exported as a CSV. Fields included: year, title, abstract, publication_date, journal, ai_likely_score, is_ai_generated, and scan_status.

This approach enabled scalable tracking of AI-generated content in scientific abstracts, supporting further analysis and trend visualization.

According to the 2024 study, “Students are using large language models and AI detectors can often detect their use,” Originality.ai is highly effective at identifying AI-generated and AI-modified text.