OpenAI released GPT-5 on Aug 7, 2025 and this study looks to understand if AI detectors remain accurate on GPT-5 generated content.

Additionally, we examine what makes GPT-5 unique in terms of writing. Not just is it still detectable but what are its unique features, does it overuse delve, em-dash etc?

Yes, GPT-5 content is able to be detected by Originality.ai at a 96.5% accuracy rate. Currently, GPT-5 content is less detectable than GPT-4o; however, that gap will close quickly as Originality.ai trains on more content from GPT-5.

99% Accurate for Rewritten Content based on a quick test of 100 samples

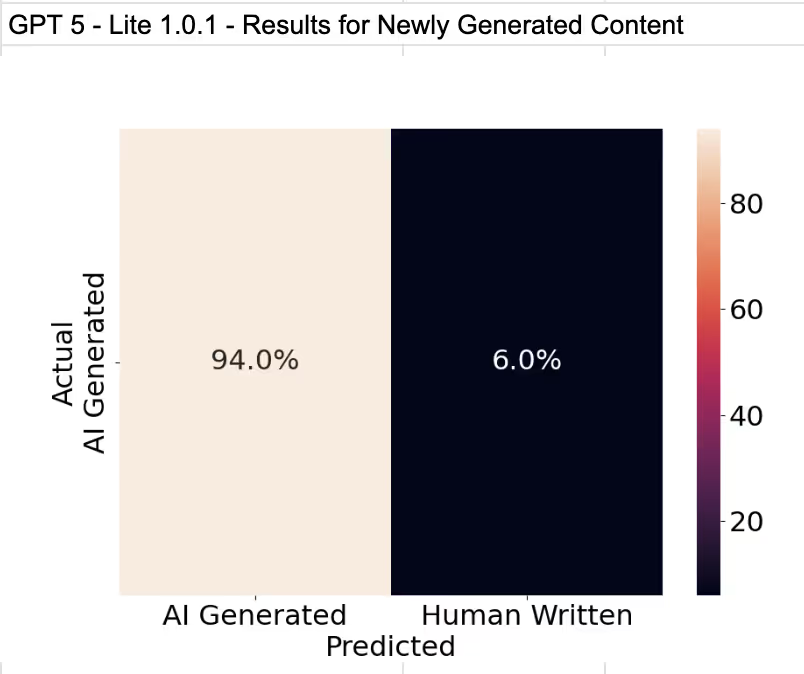

94% Accurate for Newly Generated GPT-5 Content based on a quick test of 100 samples

Method:

Results:

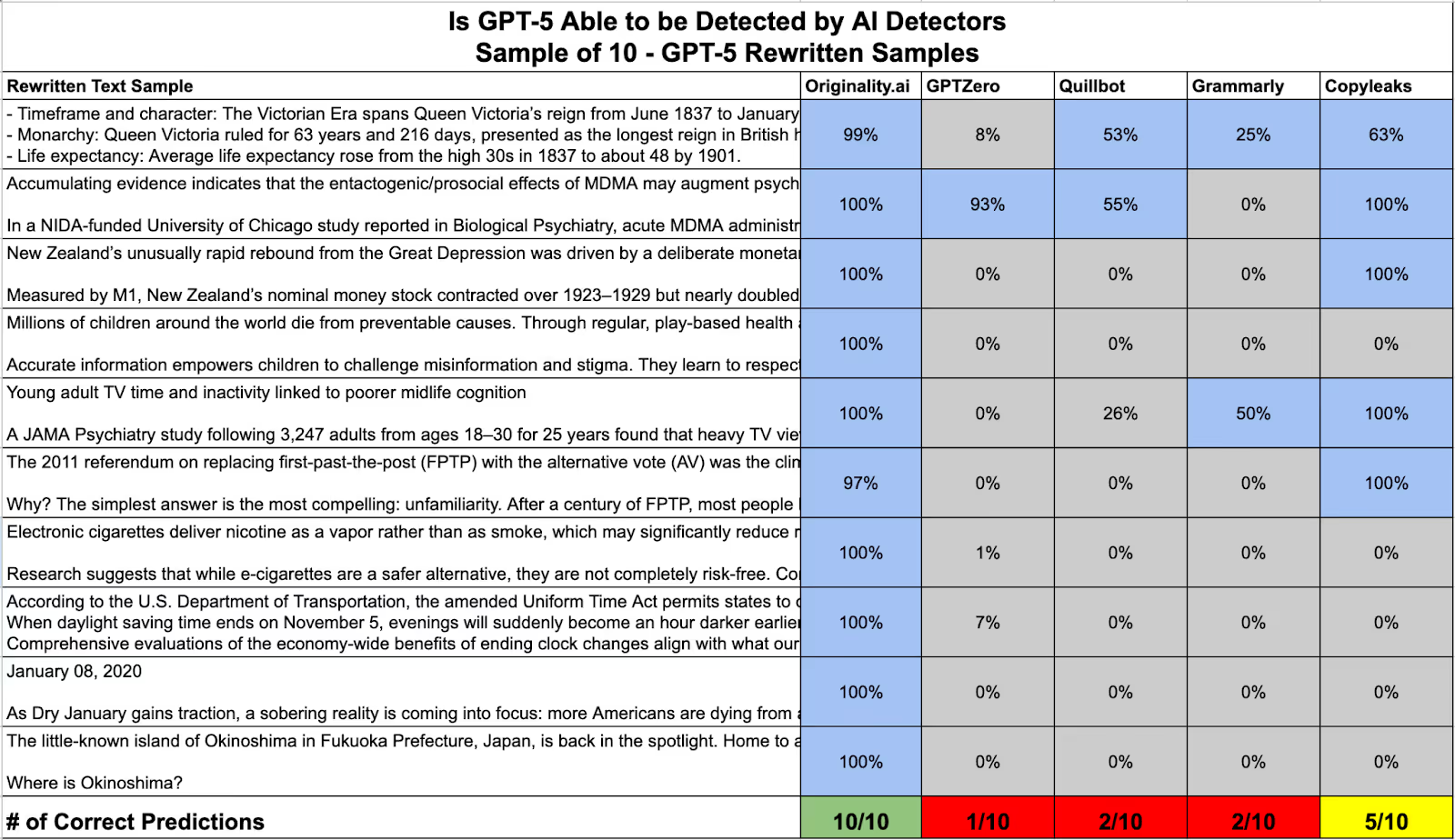

Can other detectors such as GPTZero, TurnitIn, CopyLeaks, Quillbot and Grammarly detect GPT-5 generated content?

Method:

The first 10 samples from the GPT-5 rewritten content are tested against multiple detectors.

Results:

Note - I expect the performance of all AI detectors to increase significantly over the coming weeks/months as models are updated.

Open AI says that GPT-5 is the “most capable writing collaborator yet”

Basically, what this means is that OpenAI says GPT-5 can…

Out of the 100 human-AI rewrites, none of the 100 human samples used any em-dash’s. However, the GPT-5 rewritten content included the em-dash in 67 of the articles.

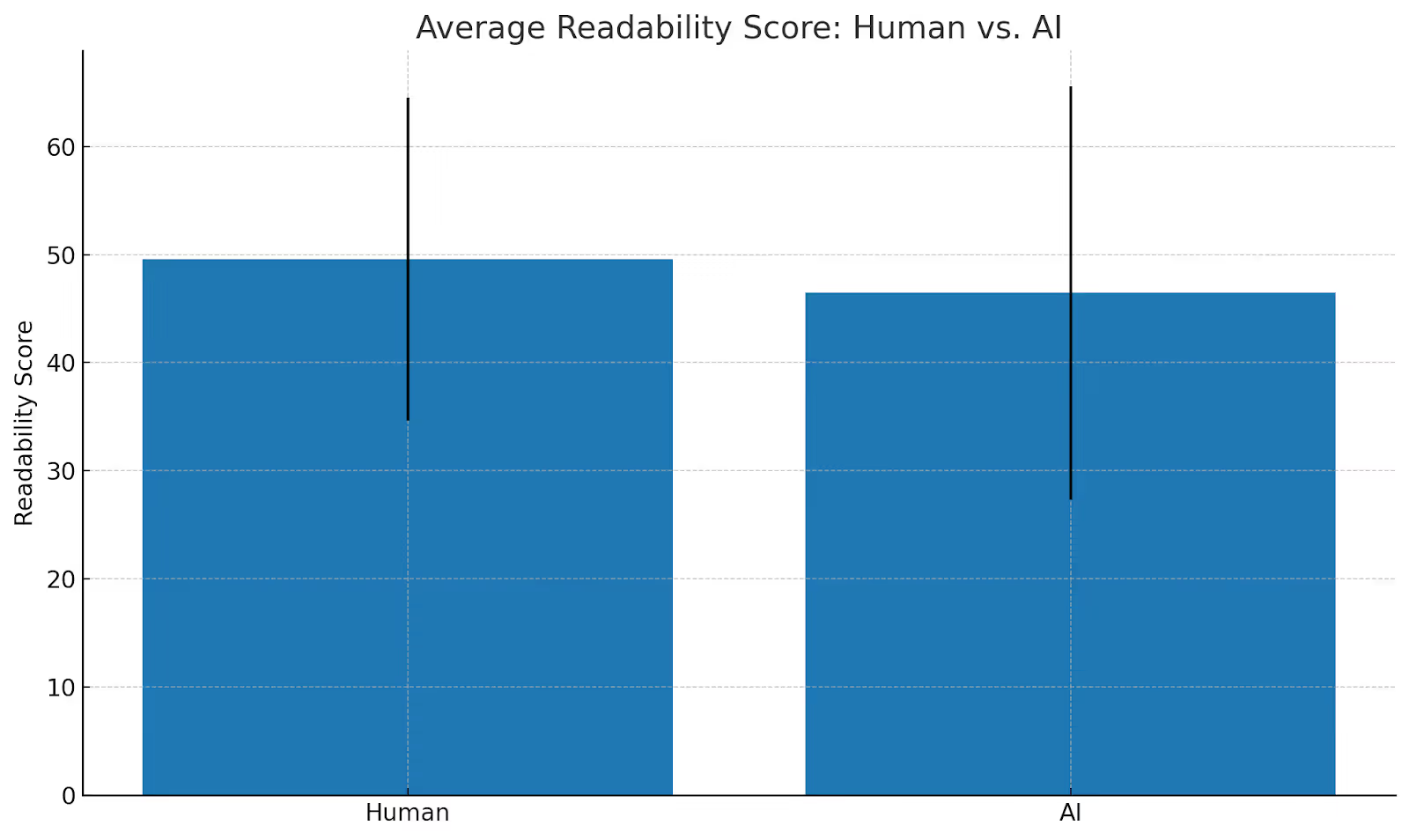

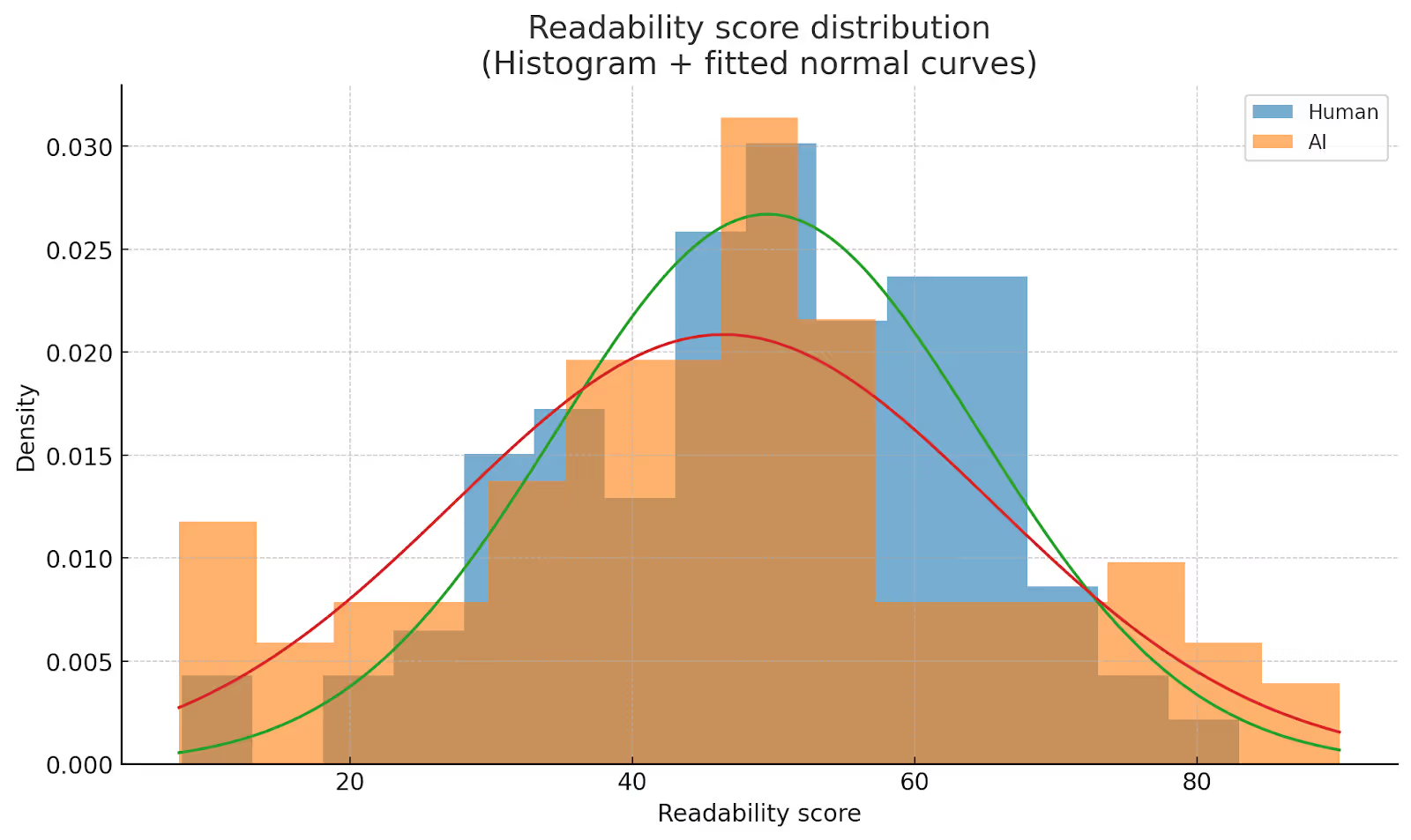

Previous models GPT-4o etc often rewrote content and the output of the content often clustered around similar Flesch Kincaid Readability scores.

GPT-5 is being positioned as a superior writing tool that is able to follow a specific style more consistently.

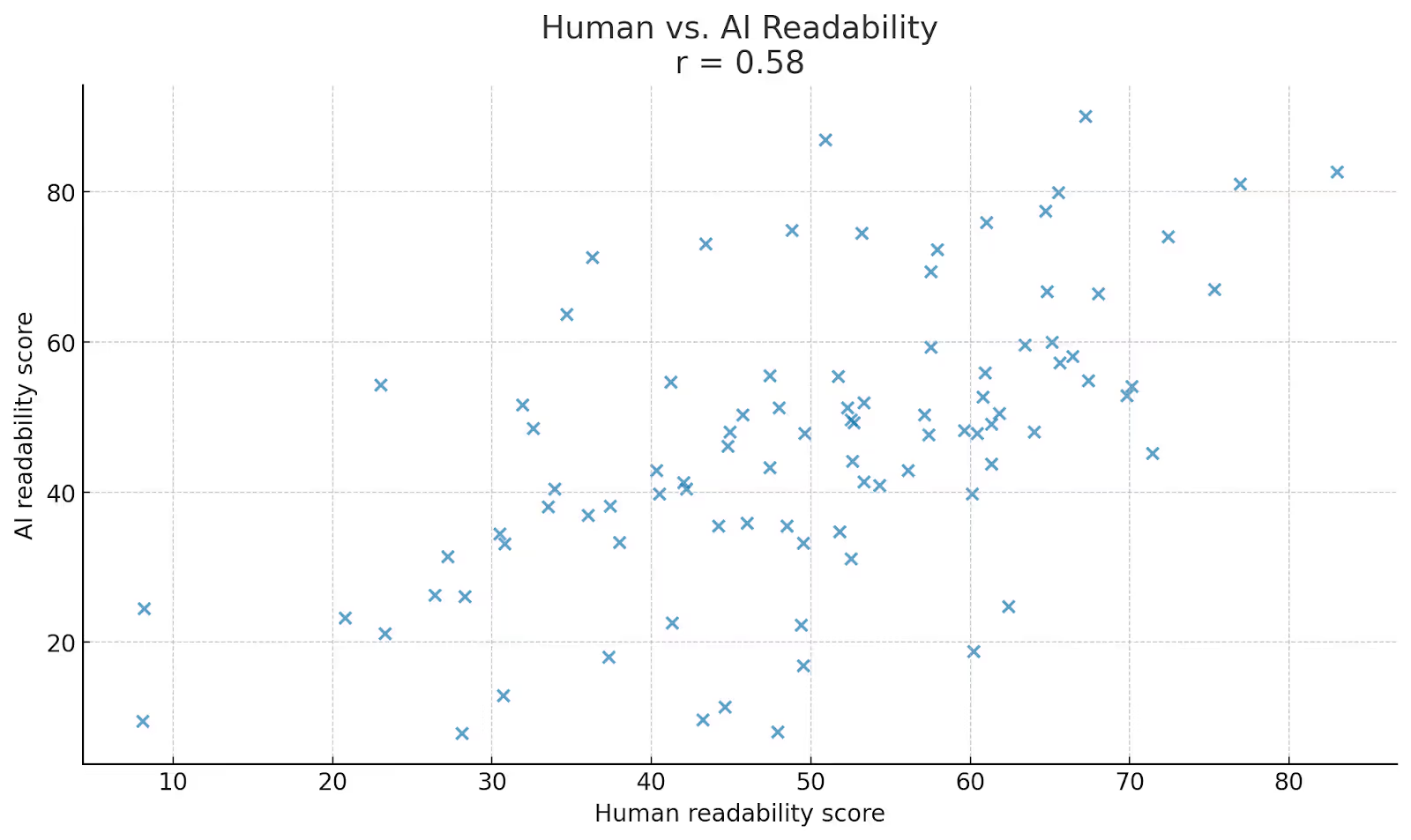

If that is the case it should show up in readability scores staying consistent with the human text it is being asked to re-write.

To test this we looked at dataset of Human content and AI content to see how consistent the readability scores were between the input and output using our Readability Checker.

Results:

GPT-5 mirrored the readability score of the human content

Average Flesch Kincaid Readability Score:

With more spread for AI written content (19.1 vs 14.9 for human written)

There is a clear, moderately strong positive relationship. Higher-readability human originals tend to yield higher-readability AI rewrites.

GPT-5 rewrites generally mirror the clarity of their human source material. In our 100-sample test, human passages averaged a Flesch–Kincaid score of 49.6, while GPT-5’s versions landed at 46.5—only about three points lower and still in the same readability band. The two sets of scores are moderately correlated (r ≈ 0.58), so a well-written original usually stays well-written after the AI pass. The main difference is consistency: AI outputs show a wider spread in scores (standard deviation 19 vs 15), meaning they sometimes swing from ultra-concise to slightly dense. Bottom line: GPT-5 tends to keep the readability level you give it.

GPT-5 content is detectable by Originality.ai but for now it makes AI detectors less accurate. We would expect all leading AI detectors to get more accurate in the coming weeks/months on the latest model release from GPT-5.

Yes - GPT-5 still loves to use the Em-Dash

GPT-5 mirrors the style/readability score of human writing more than previous models.

According to the 2024 study, “Students are using large language models and AI detectors can often detect their use,” Originality.ai is highly effective at identifying AI-generated and AI-modified text.