AI detection scores — what are they and what do they mean?

In this guide, we’ll take a look at one aspect of how AI detectors work, the AI score and what an AI score means.

In brief, an AI detection score (also known as an AI score or AI confidence score) is the score that an AI checker provides after scanning text. It indicates whether the text was AI or human-written.

Keep in mind that the method for presenting (and determining) AI detection scores can vary by brand and AI detector, or type of AI detection software.

Here, we’ll focus on the industry-leading Originality.ai AI Checker and what the Originality.ai AI detector score means.

Maintain transparency in the age of AI. We offer a free AI Checker so you can try out the most accurate AI detector.

Our AI detector provides a probability that a piece of content is AI or Original (human-written) after text is input into the checker.

This returns an AI confidence score.

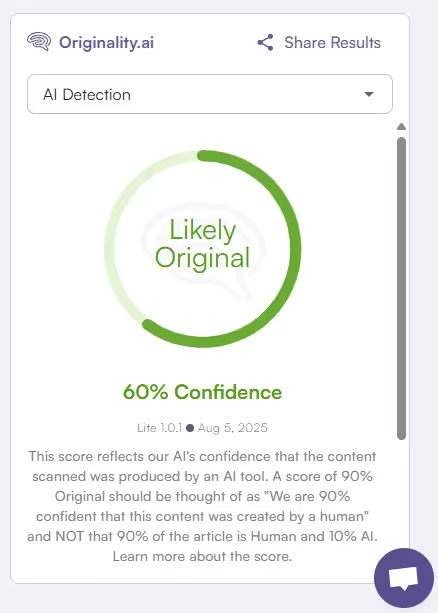

Let’s break down what that could look like:

60% Original and 40% AI means the model thinks the content is Original (human-written) and is 60% confident in its prediction.

Essentially, it is an AI score prediction.

This AI score DOES NOT mean that 60% of it was Original and 40% was AI-generated.

If you wrote 100% of the copy yourself and you receive a 60% Original score, that is not a false positive.

Originality.ai’s AI detector has correctly predicted that the content was human-generated with 60% confidence.

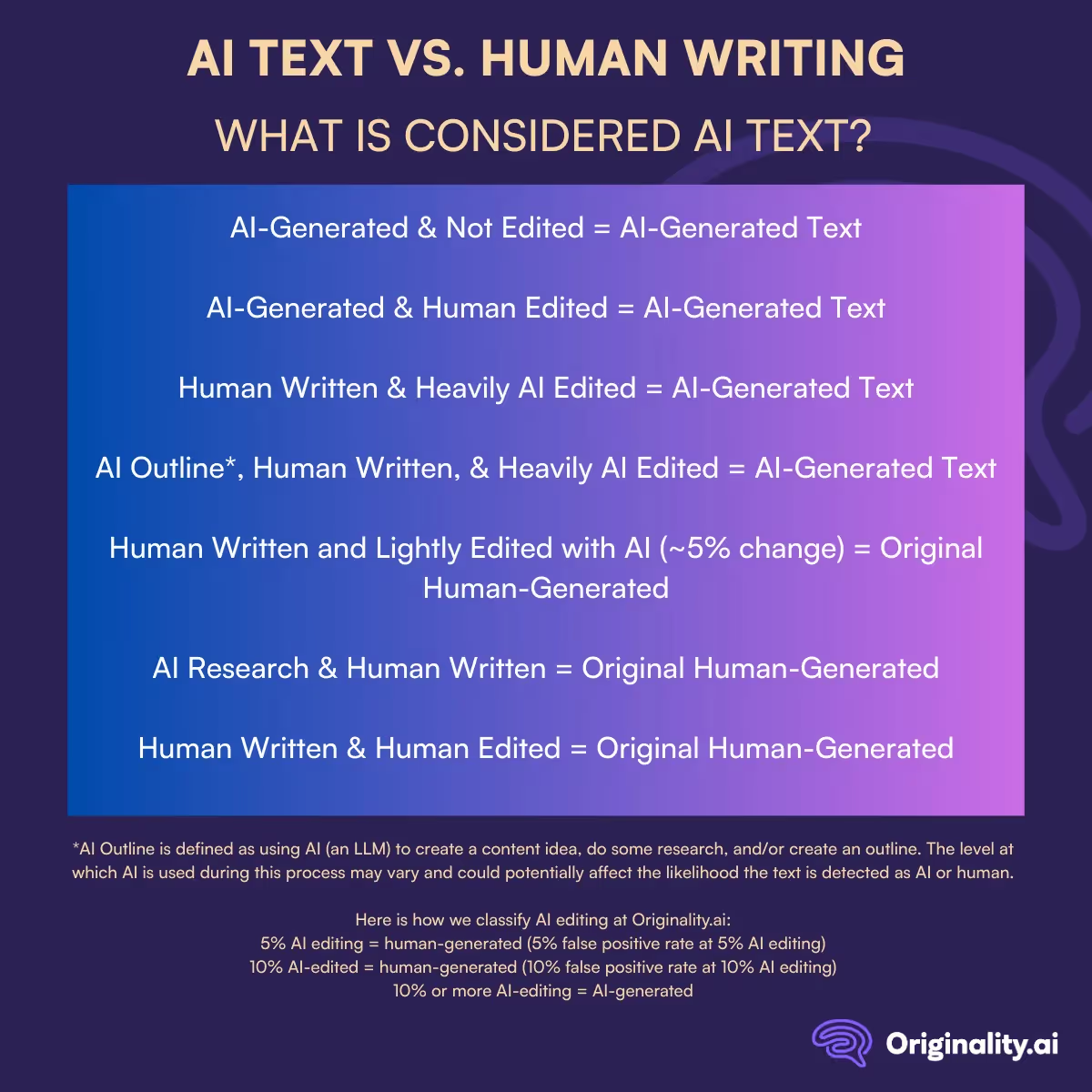

Here is what we think should and should not be classified as AI-generated content:

*AI Outline is defined as using AI (an LLM) to create a content idea, do some research, and/or create an outline. The level at which AI is used during this process may vary and could potentially affect the likelihood the text is detected as AI or human.

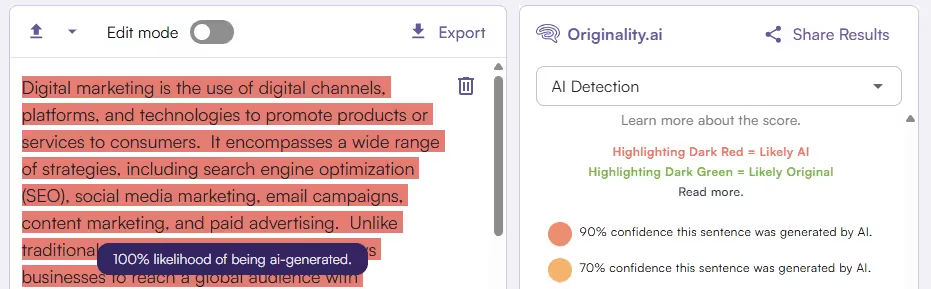

Once the Originality scan is complete, you can dig deeper beyond the AI score.

We provide sentence-level highlighting showing what parts the Originality AI Detector found were the most likely to be AI-generated.

To demonstrate the in-text highlighting, let’s take a look at an AI-generated sample of text.

For example, we prompted ChatGPT to generate 100 words on digital marketing:

Then, when we ran that through the Originality.ai AI Detector, you can clearly see the ChatGPT-generated text highlighted in dark red to indicate that it is Likely AI.

Note: The patterns that our AI picks up on cannot be translated into a simple list of ‘here are x reasons why your content is identified as AI-generated’.

Wondering how AI detection works? Our AI detector takes a more holistic and far more intensive approach to analyze an article to determine if it was AI-generated than anything else we have seen.

Read our in-depth article: How Does AI Content Detection Work? for more insights.

Our AI was trained on an incredible number of the most popular LLMs, including ChatGPT (such as GPT-4o and GPT-5), Claude (Claude 4 Sonnet and Opus), and Llama, and can accurately identify patterns in AI content across an entire article.

Our approach is a far more intensive/accurate way to look at an article than the way that other free AI detection tools work, which rely on either…

A linear probabilistic model: These tools use OpenAI’s model to predict what the next word would be based on the NLP model. If enough of the next words in a piece of content fit within what the model would predict, then it is likely AI. This is easy to fool with the changing of a few words.

Burstiness and Perplexity: “Burstiness” and “Perplexity”-based tools rely on the fact that AI content is typically consistently created without much variation. They are susceptible to being tricked, which can result in many false positives.

Yes, our AI detector has demonstrated exceptional accuracy in both internal tests and multiple 3rd party studies:

Is the score perfect?

At Originality.ai, our machine learning engineers are always working to improve model accuracy and running tests on the latest AI models (such as GPT-5).

However, no AI detection tool is perfect. The tool can produce incorrect predictions and predict that content that was human-written is AI-generated (also known as a false positive - more on that later in this guide).

Originality.ai provides distinct models that are tuned to meet the needs of specific use cases.

The AI detection models that are currently available include:

Lite

Turbo

Academic

Multi Language

Read more about each model here

False positives are painful when they occur, and we are working hard to continually improve and release even better models that drive down our false positive rate.

A false positive occurs when an AI detector thinks something was AI-generated when, in fact, it was written by a human.

We have a free Chrome extension to assist in transparently showing you the writing process that truly created it (whether human-written or AI-generated).

We appreciate the valid question, “Why was my article identified as AI?” Below are resources on how to prevent and reduce the risk of false positives.

False positive rates vary by model and by company.

The answer is no.

A Plagiarism Checker provides hard proof that plagiarism occurred, while an AI Detector provides the probability that a piece of content was AI or human-generated.

Here’s a quick look at some of the differences between plagiarism checking and AI detection:

The same approach should not be taken with Plagiarism and AI detection. For instance, a text might be AI-generated but not include plagiarism. Alternatively, a plagiarized article might be human-written.

In the example scan below, the article was both human-written and plagiarism-free:

Read more about the Originality.ai Plagiarism Checker in our Plagiarism Accuracy Study.

Learn about AI Detection and AI Detection Accuracy:

Learn about the impact of AI across industries: