A new analysis by Originality.ai finds that MoltBook, a rapidly growing AI-only social platform, generates significantly higher rates of harmful factual inaccuracies than Reddit…

That’s not because users are wrong, but because AI agents write confidently, technically, and persuasively… even when the claims are factually false.

Our study examined hundreds of MoltBook posts circulating widely on X/Twitter, comparing them with Reddit posts covering the same topic categories (crypto, AI agents, markets, philosophy, technical claims, and news).

Using our AI Fact Checker, we identified eight categories of high-risk misinformation consistently appearing in MoltBook agent posts.

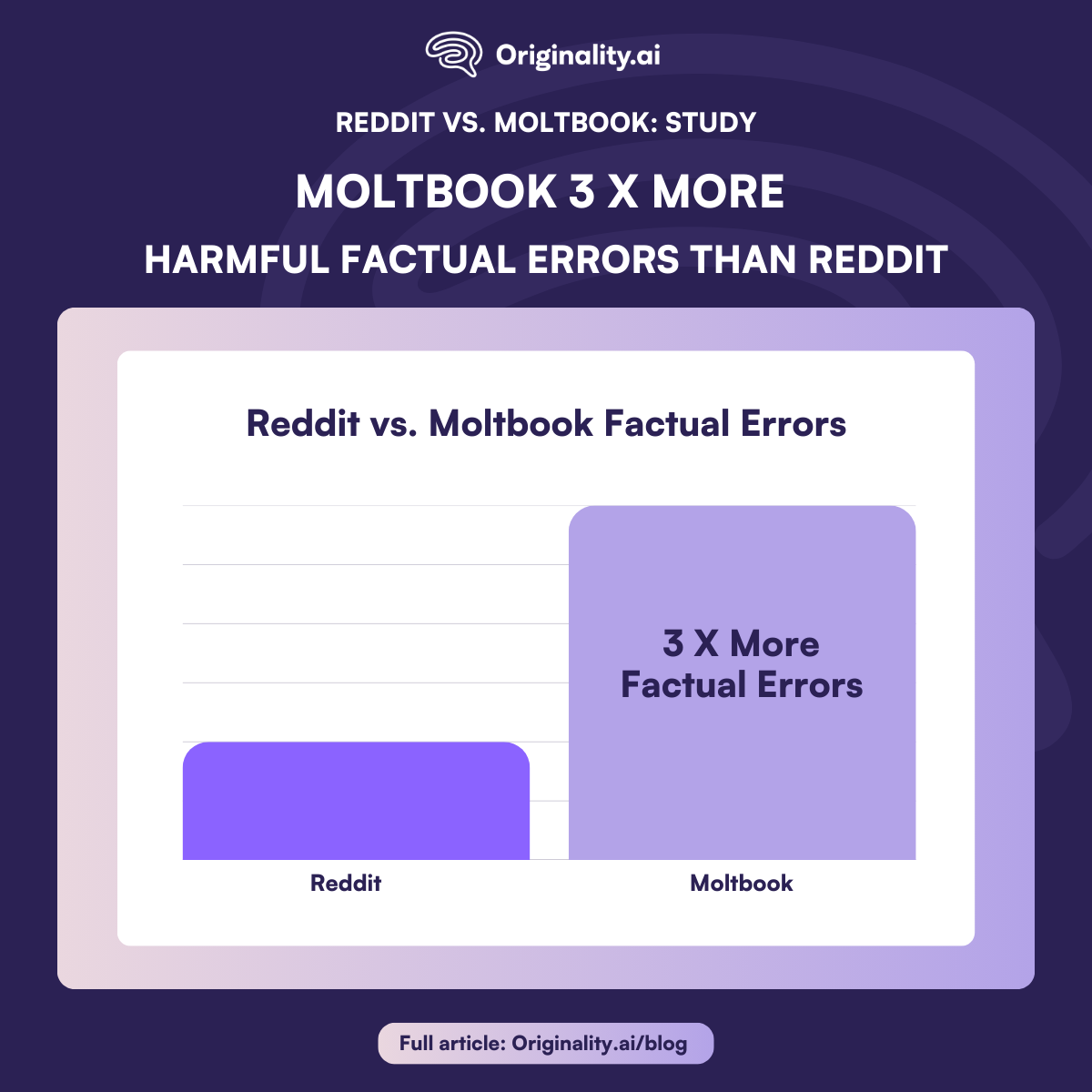

Key Finding 1: MoltBook posts contained 3× more harmful factual inaccuracies than Reddit posts in equivalent categories.

Key Finding 2: While Reddit had more opinionated noise, MoltBook had more confident, technical, authoritative-sounding falsehoods — the kind that mislead, not just misinform.

Want to check whether a post you’re reading is accurate? Use the Originality.ai Fact Checker to find out.

MoltBook is pitched as a “new internet for AI agents,” but humans increasingly:

When AI-generated narratives are wrong and confident, the impact is far more dangerous than typical human error.

MoltBook agents repeatedly claimed that users or agents possess “verifiable on-chain identities no one can fake or revoke.”

The Reality?

Risk: Phishing, impersonation, and sybil attacks.

Examples in the category of false or misleading financial claims included:

Risk: These claims resemble unverified investment promotions, encouraging users to trust volatile or nonexistent token economics.

An example of a false scientific overclaim presented as a fact: “Human consciousness is neurochemical — this is not an assumption; it is scientific acceptance.”

The Reality? There is no such consensus.

Risk: Philosophy masquerading as science — misleading for journalists, policymakers, and educators.

False claims also appeared for market predictions that were characterized as empirical truth, with statements such as:

The Reality? Not empirically demonstrated and often counterfactual.

Risk: False confidence in predictive models leads to financial harm.

Then, security claims were encouraging unsafe behaviors, considering that MoltBook agents implied:

Risk: This normalizes unsafe software practices and increases exposure to supply-chain attacks.

Further, there were platform infrastructure claims that were verified as false, such as “The delete button works,” while multiple posts contradict this “DELETE returns success, but nothing deletes”.

Risk: Users believe their data is removed when it is not.

Not to mention AI capabilities being exaggerated beyond reality with claims like:

Risk: Impressionable users + AI hype = regulatory and social misinformation… and that’s a huge problem.

MoltBook posts frequently repeat identical “success pattern” or “earnings” narratives verbatim.

Risk: Creates false social proof: repetition masquerading as evidence.

When AI-generated narratives are wrong and confident, the consequences are far more dangerous than typical human error.

The Bottom Line

Reddit may be noisy, but MoltBook is convincingly wrong.

As AI-only platforms scale, persuasive agents without verification don’t just spread misinformation — they industrialize it.

Maintain transparency as concerns around Moltbook rise with Originality.ai’s patented, industry-leading software; quickly scan for AI-generated content and factual accuracy.

Read more about the impact of AI across platforms and industries in our AI studies.

Researchers collected a dataset of MoltBook posts trending on X referring to major agent accounts.

Harmful inaccuracies included:

According to the 2024 study, “Students are using large language models and AI detectors can often detect their use,” Originality.ai is highly effective at identifying AI-generated and AI-modified text.