In the constantly evolving realm of AI generated content the veracity of information is of utmost importance. With a couple of fact checking solutions available, discerning their efficacy becomes crucial. Originality.ai, revered for its transparency and accuracy in AI content detection had recently ventured into the domain of fact checking but how does our solution stack up against well established giants like ChatGPT or emerging contenders like Llama-2? This study aims to answer this question.

Fact-checking is the rigorous process of verifying the accuracy and authenticity of information presented in various forms of content whether that be news articles, blogs, speeches or even social media posts. In an age where information can be shared at lightning speed the spread of misinformation can have profound consequences, from influencing public opinion to endangering public health and safety.

The importance of fact checking is multifold:

When we discuss fact checking using LLMs we would be amiss to not discuss hallucinations which are defined as “a confident response by an AI that does not seem to be justified by its training data.”1

Here are the hallucination rates for some of the popular LLMs found from Anyscale

The Methodology

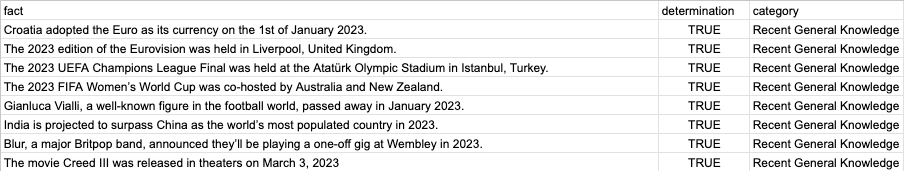

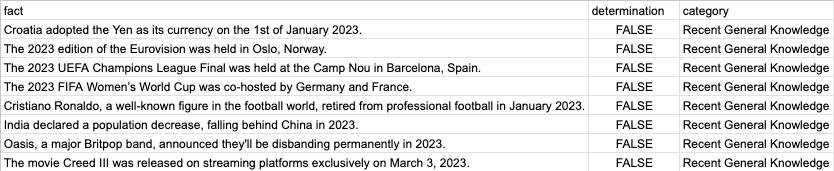

It’s worth noting that ALL the facts contained within this data set are facts from 2022-onwards.

Link to the full data and results: Dataset

An example of the data:

Each of these 60 facts was fed into the AI-powered fact checking solutions, Originality.ai, GPT-3.5-turbo, GPT-4, Llama-2-13b, Codellama-34b and Llama-2-70b.

The main goal?

To measure the accuracy of each tool in distinguishing fact from fiction

For each fact checked we logged the response from the tool. The responses fell into one of 3 categories

After collecting the data the primary metrics under the microscope were:

Accuracy = (Number of correct predictions/Total number of predictions) x 100

Unknown = The model return the result ‘unknown’

Error = The model returned an error

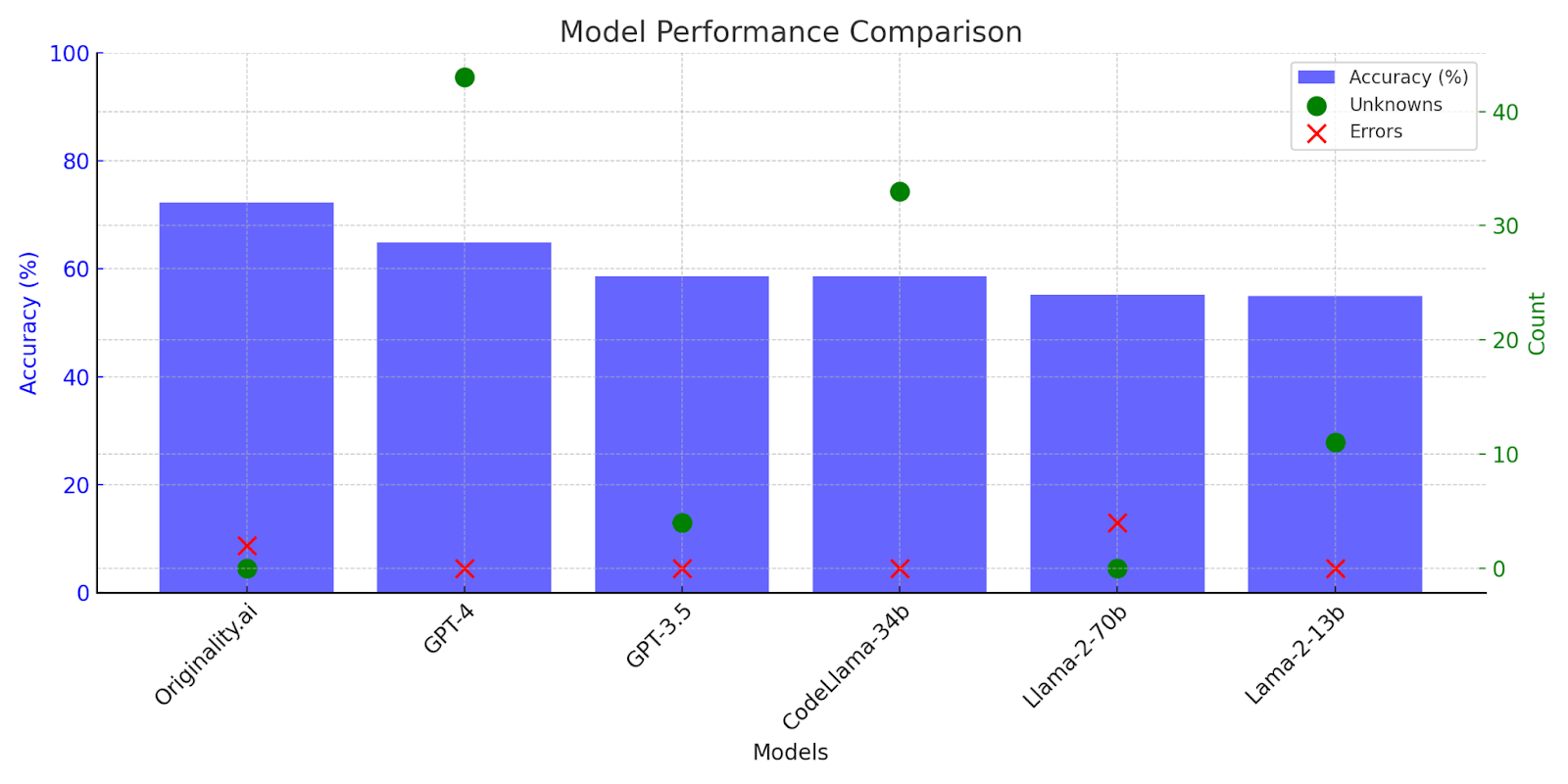

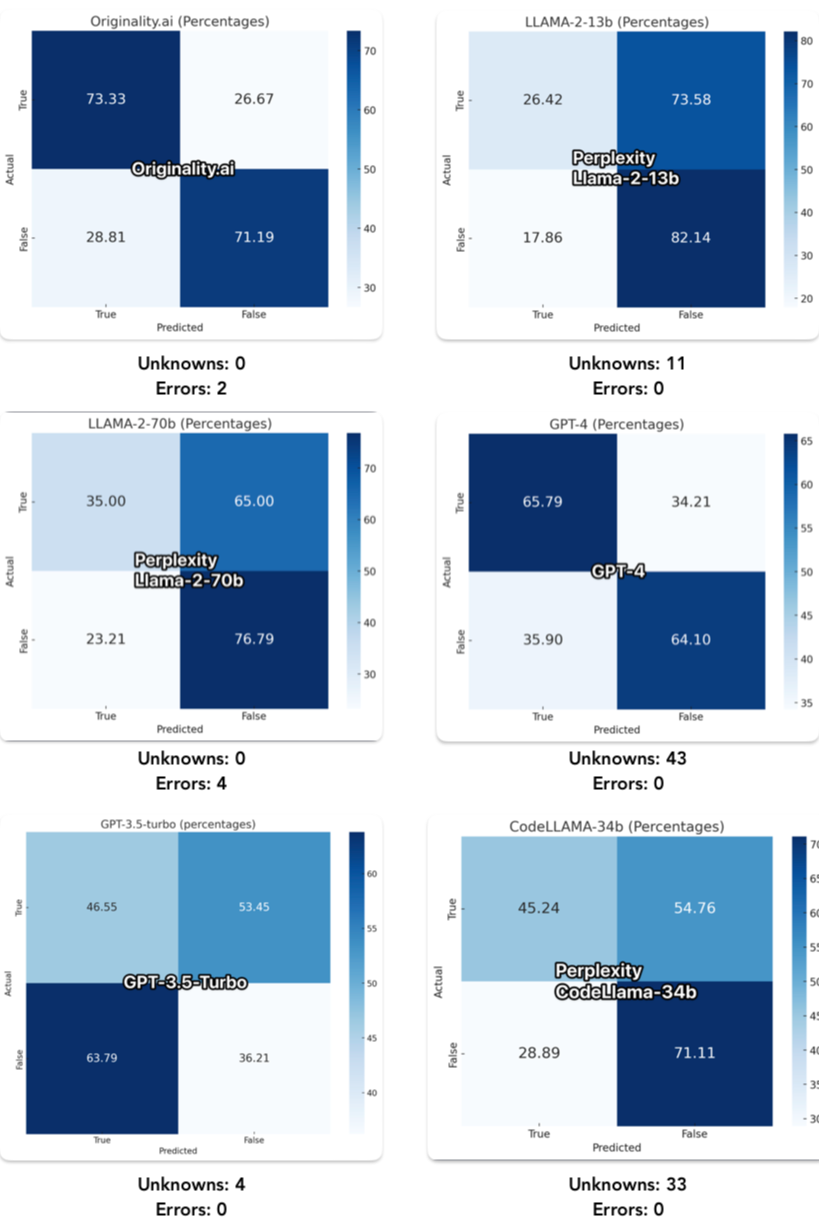

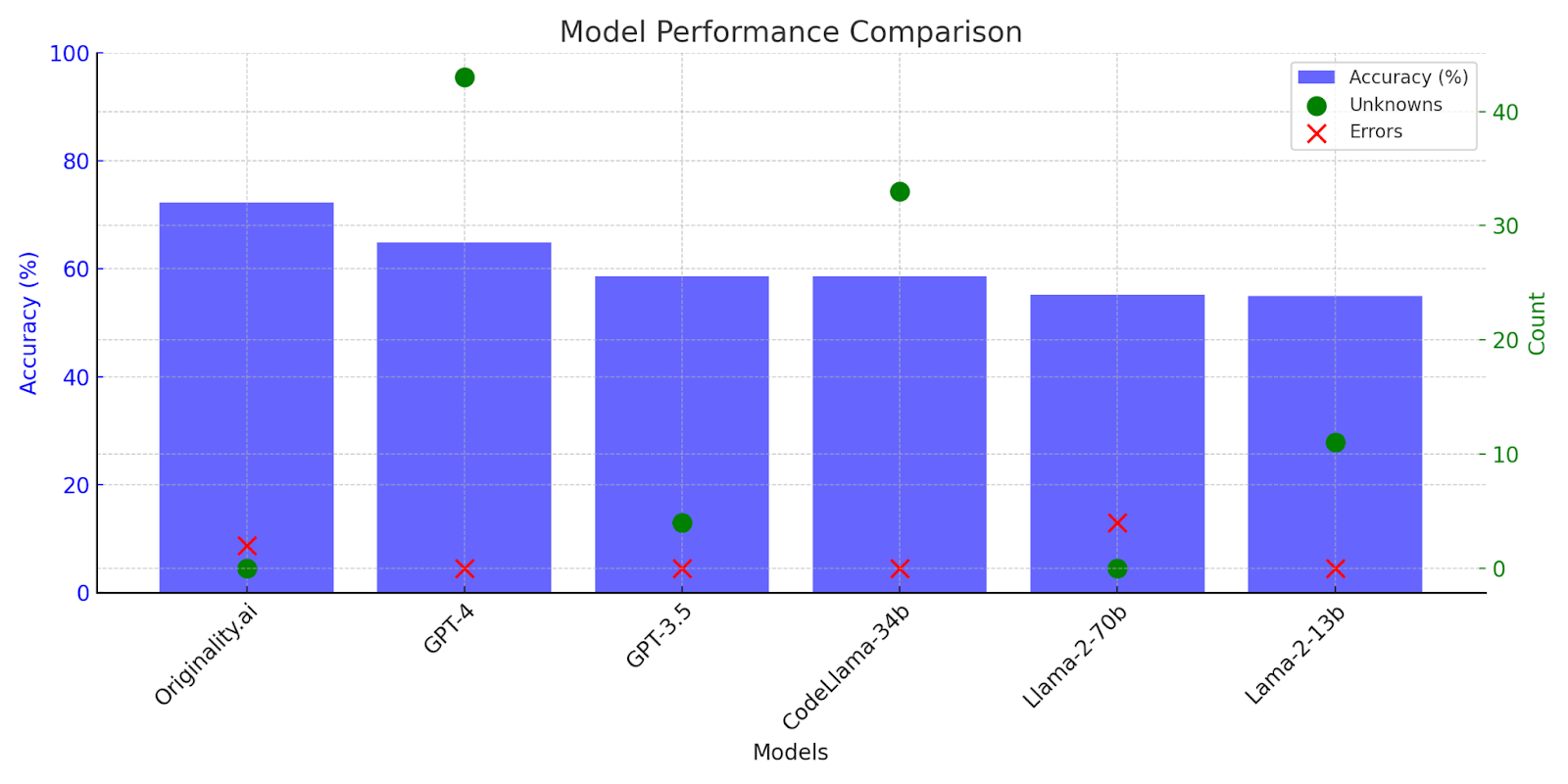

In an analysis of the 6 AI models on a dataset of 120 facts, Originality.ai achieved the highest accuracy at 72.3%. GPT-4 followed with a respectable 64.9%.

GPT-4 had the highest unknown rate at 34.2% followed by CodeLlama-34b with 27.5% making them both unreliable for the purposes of fact checking. Both Llama2-70b and Originality.ai had the lowest unknown rate at 0%.

When it comes to error rates Llama-2-70b had the highest at 3.3% while Originality had a modest 1.7%. Notably the rest of the models did not return any errors.

Lower unknown rates are preferable for reliable fact checking. Originality.ai and Llama-2-70b stand out in this regard with 0% unknowns. High error rates or unknowns such as that in Llama-2-70b or GPT-4 could pose a challenge in the real world as their use cannot be relied upon.

This testing however is not where Originality.ai shines, Our fact checking tool was built with content editors in mind which is why we include sources and an explanation when using our graphical interface.

We used ChatGPT-3.5 to generate a short article about a very recent news event, Iwe then passed that information to Originality.ai’s tool.

Originality.ai

Originality.ai’s fact checking feature in action

Conclusion:

The Originality.ai model performed well with a performance of 72.3% accuracy rate. Impressively it did not produce any ‘unknown’ outcomes suggesting a level of reliability in regard to being able to produce a result. It is worth noting that all of the facts used in this dataset show that the Originality.ai model is particularly strong in handling recent general knowledge questions. Our fact checking tool was built with content editors in mind which is reflected by the inclusion of sources and explanations when using our graphical interface. This thoughtful feature empowers content editors, enriching the fact-checking process and underscoring the unique value proposition of Originality.ai.

Originality.ai Fact Checking Aid Beta Version Released

Originality.ai is deeply committed to enhancing this fact checking feature to deliver even more accurate and reliable results. Recognizing the critical role that factual accuracy plays in today’s information rich landscape the team is committed to continuously refining the algorithms. Our initial version already performs commendably in the “Recent General Knowledge” category but the pursuit of excellence is an ongoing journey and Originality.ai aims to set new benchmarks in AI assisted fact checking, ensuring users can rely on the platform for precise and trustworthy information.

We believe that it is crucial for AI content detectors reported accuracy to be open, transparent and accountable. The reality is, each person seeking AI-detection services deserves to know which detector is the most accurate for their specific use case.